* 20 entries including: power grid infrastructure, a short history of wheat, gene chips, MySpace fakery, Hillary for president, ZUMWALT destroyer, global warming lawsuits, pythons in Florida, automotive black boxes, Iranian oil drought, traffic roundabouts in Canada, tracking spam, 21CN for the UK, bizarre warning labels.

* FAKING IT ON MYSPACE: The MySpace social-networking system has made a splash over the last few years, and also created controversy over a supposed rash of sexual predators infesting the site. According to an essay on TECHNOLOGYREVIEW.com by well-known computer columnist Wade Roush, the scandal over MySpace seems to be exaggerated. MySpace has over 116 million subscribers, more than the population of Mexico; the incidence of sexual solicitation on the system is low, and the company is working to give the boot to nuisances. Surveys show that there is a fair amount of peer-to-peer sexual solicitation on the MySpace, but most of the time the recipients simply ignore it.

Roush claims that the real threat of MySpace to commercialization. Since 2005 it's been owned by Rupert Murdoch's News Corp, which means a focus on the bottom line. Any operation with 116 million clients is going to have marketing clout, and Google has recently inked an exclusive agreement with MySpace to provide search capability and targeted ads on the system.

That MySpace wants to make money is no real cause for complaint, but the business model is suspicious in some respects. MySpace was established as a social-networking system in which people set up profiles for themselves for communications and other interactions. The basic concept was derived from earlier social-networking systems such as Friendster, with the profiles containing a user's personal text, photos, videos, and so on, as well as a list of contacts or "friends" and feedback from said friends. MySpace differed somewhat at the outset at being friendly to independent artists like musicians, photographers, digital filmmakers, and the like, giving them a forum to promote their efforts. A band, for example, could provide profiles of its members, publicize concerts, and upload tunes; filmmakers could upload video trailers.

In the early days of the Friendster system, only an individual could set up a profile, but this was clumsy for, say, a band trying to get recognition, and so the company relented, allowing "fakester" profiles to be set up. People also liked to set up fakester profiles for fantasy characters or a pet, and users accepted it. MySpace accepted fakester profiles from day one, and has in fact has taken the idea farther. Now it's easy to set up a profile for a company, a movie, a TV show, new products, and so on, with Pepsi and Burger King having their own fakester profiles -- Burger King's profile had 135,000 friends as of October 2006.

MySpace users go along with this because such brand-affiliation linkages help establish an online identity for a user, listing the cool products a particular user likes. The difficulty is that the system then starts to look less like social networking and more like a highly targeted marketing research system, in which businesses can obtain as elaborate a set of statistics as desired on the customer base, and on the other hand be able to target advertising down to the individual level.

To be sure, as most Amazon.com shoppers know, a "smart" advertising system is a nice thing to have, providing tipoffs to desireable products -- advertising ceases to be a nuisance when it actually promotes products that are interesting. The problem is, as Roush points out, is that MySpace was not designed at the outset to be simply an advertising medium, it was designed to get people together. In turning into something not so different from a TV shopping channel, MySpace may be laying the seeds of its own ultimate irrelevance.

BACK_TO_TOP* THE HILLARY QUESTION: When Bill Clinton left the office of president of the United States in 2001, even many of those who had voted for him breathed a sigh of relief, being glad to be done with the personal scandals he had brought down on himself. Those who had hated him all along were downright gleeful to see his backside, and they were at least as glad to see the last of his wife Hillary -- seen as pushy, ambitious, frosty, calculating, elitist, and too much the embodiment of loose-cannon liberalism. Goodbye, Bill and Hillary.

Now New York Senator Hillary Clinton has declared she's in the running for president: Hello, Hillary and Bill? As reported by THE ECONOMIST's American columnist Lexington ("Hating Hillary", 27 January 2007), to absolutely no surprise the Hillary haters are now coming back out of the woodwork, selling Hillary-bashing mugs and T-shirts and writing books accusing her of everything from fascism to leaving the toilet seat up. Such was exactly predictable; the question is: what of it?

The track record of Senator Clinton has demonstrated a person with her eye focused on the prize. Like Hillary Clinton or not, nobody ever sensibly claimed she was stupid or lazy, and she has carefully, methodically trimmed her sails to the centrist line. There is the tale of a bank robber who was asked why he robbed banks, to reply: "Because that's where the money is." A smart politician similarly knows that the center is, effectively by definition, where the votes are.

Senator Clinton has been carefully establishing links with conservative colleagues on issues of common concern, treading carefully on abortion, denouncing flag-burning -- and has been a solid supporter of the war in Iraq. Indeed, she has been so diligent in cultivating her connections to the military community that when she spoke at a meeting of military officers there were worries ahead of time that some of the audience would walk out -- but they gave her a standing ovation instead. Some conservative politicians who once abused her have made retractions, unusual in itself in today's polarized political climate.

No matter what she does, however, there is a large and loud faction that hates her, both for herself and for her husband. The extreme Left has also not been happy with her cultivation of the center, in particular for her backing of the war in Iraq. She has been booed at Left-wing rallies, and antiwar activist Cindy Sheehan has compared her -- not very credibly -- to Rightist talk-show host Rush Limbaugh. However, Democrats who are still interested in reality are now coming to realize that she was never quite the Left-outfield boogeywoman the conservatives had painted her to be. Even her much-despised health-care plan had support of businesses, she was for welfare reform, and she is a practicing Methodist.

How much will Hillary hatred cost her? As far as the extreme Left goes, not much. If she were running against George W. Bush, the only alternative they would have would be to vote for Ralph Nader (again!) It is of course very hard to believe that the Republicans will offer a candidate with approval ratings as low as those of the current resident of the Oval Office, and no matter who wins the next election it is just as difficult to believe the new president won't be trying to put the Iraq fiasco to bed, rationalize the war on terror, embrace environmental issues in a more wholehearted fashion, and perform more effective international diplomacy. After all, no candidate who didn't is going to win. However, still, will the extreme Left vote for a Republican? Not likely.

The issue with the hard-core conservative Hillary haters has the virtue of being simple: they will, as the saying goes, vote for a dead yellow dog before they'll vote for Hillary. There aren't enough of them to prevent her from winning by themselves, but they could swing the vote. At the same time, it is hard to believe their hatred will sway anyone else. The only people who take any of the rabid Hillary-bashing books seriously are people who already believe it all anyway; as far as everyone else who reads any such book go, the only message they walk away with is that the author is a nut. In fact, Hillary hatred may actually hand her votes. One of the things that provokes the Hillary haters is that she is a successful, powerful professional woman -- and attacking her even implicitly on that basis will drive many women voters to her side. Indeed, given the fact that the Hillary haters vent their detestation without a moment's thought of who it alienates, Senator Clinton may find their venom has a silver lining.

Senator Clinton is certainly used to being attacked, and knows how to both defend herself from attacks, as well as how to turn attacks to her advantage. It's one of the advantages of being calculating. She has a powerful network of contacts and a massive campaign chest. If her husband is, to say the least, something of a mixed asset as far as public appeal is concerned, he is extremely well-connected, and nobody ever claimed that Slick Willie Clinton wasn't a very shrewd political operator. He also has experience in running two successful presidential campaigns.

That does lead back to the notion of Bill Clinton as the prince consort in the White House, an idea that inspires discomfort even among some who think Hillary might well be given her chance. However, the real issue is Hillary Clinton herself. Pushy and ambitious? Yes, but who gets to the White House who isn't? Cool and calculating? After the conviction politics of the current administration, maybe that doesn't seem like such a bad thing. [ED: The events of 2016 lend an interesting color to this article.]

BACK_TO_TOP* A SHORT HISTORY OF WHEAT (1): An article in THE ECONOMIST ("Ears Of Plenty", 24 December 2005) provided a quick history of one of humanity's greatest discoveries, the wheat plant, which was one of the enabling factors for civilization and still remains one of the top staple foods for the world.

About 12,000 years ago, after a period of warming, melting ice sheets in what is now North America dumped their flow out the Saint Lawrence Seaway into the North Atlantic. The result was the abrupt return of an ice age for about 1,100 years; glaciers returned to Northern Europe, and Western Asia became both colder and drier. The Black Sea almost completely dried up.

In what is now Syria, humans who had been living on acorns, gazelles, and grass seeds were forced to start cultivating plants: rye and chickpeas, local einkorn and emmer grasses, and later barley. Plants form cross-species hybrids much more easily than do animals, and einkorn and emmer grasses were hybridized to form what would become, after some further changes, modern wheat. Wild einkorn grass that grows in the mountains of southeastern Turkey still uniquely bears genetic patterns that it shares with modern wheat.

Wheat quickly became so different from its wild forebears as to be unable to propagate on its own. The seeds became larger; the "rachis" that bound the seeds together became less brittle and harder to break, allowing whole ears of grass and not just individual seeds to be collected; and the leaf-like "glumes" that covered the seeds loosened, making threshing much easier. In any case, humans had now started down the path of farming. 9,000 years ago, they had domesticated cattle, which could be fed wheat to produce milk and meat, with their manure used to fertilize fields. 6,000 years ago, they had invented the plow, improving crop yields.

5,000 years ago, wheat cultivation had spread as far as Ireland, Spain, Ethiopia, and India; it reached China 4,000 years ago, when rice paddy farming remained well in the future. The spread of wheat and farming in general meant the slow parallel rise in human population. Humans were no longer dependent on what they could scrounge for their food supply, and the numbers of humans increased accordingly.

Progress in the technology of wheat farming was slow, however. The Chinese invented the horse collar in the third century BC, allowing a horse (or mule) to pull a plow without being choked; the horse could plow faster than an ox, improving productivity. The next major improvement wasn't until 1701, when a Berkshire farmer named Jethro Tull developed a seed drill, based on organ pipes, that improved the productivity of planting by a factor of eight. His innovation prompted hostility, and it wouldn't be for the last time: a century later, the introduction of the threshing machine caused riots.

* The slow progress of the technology of wheat cultivation led the English economist Thomas Robert Malthus to propose, in 1798, that the human race would always tend to outstrip its food supply, leading to disastrous population crashes. In 1815, a huge volcanic eruption on the island of Tamboura in what is now Indonesia dumped huge amounts of ash and dust into the atmosphere, and the result was what was called "the year without a summer" in the Northern Hemisphere. There were frosts in New England in July, chilly days in France in August. Wheat prices skyrocketed as crops failed, and it looked like Malthus had been right after all.

Doomsday was then deferred by bringing more land under the plow in Australia and North America. However, depletion of the soil meant that crop yields slowly began to decline, and by the end of the 19th century there were fears that the Malthusian crash was once again imminent. The introduction of the farm tractor then came to the rescue, once again boosting farm productivity enough to keep a step ahead of the Malthusian devil.

The other key to improving agricultural productivity was to figure out improved ways to replenish the soil with nitrogen, phosphorus, and potassium. Manure or a "break crop" of legumes had long been used by prudent farmers to keep their land productive, but neither approach was particularly efficient. Finding other sources of fertilizer was hit-or-miss until the 1830s, when it was discovered that dry islands off the coasts of South America and South Africa were all but buried in nutrient-rich guano -- bird droppings. The guano islands were such a magnificent source of fertilizer that people actually fought over them. However, they were a depletable resource and were running dry by the 1880s -- when mineral nitrate deposits were discovered in the uplands of Chile, leading to the development of a massive mining industry there to provide fertilizer to the farms of Europe.

The Chilean nitrate mines were a depletable resource as well, but there was an effectively inexhaustible source of nitrogen in the atmosphere that covered the planet. In 1909, the German chemist Fritz Haber, aided by an engineer named Carl Bosch of the BASF firm, figured out a way to synthesize ammonia from the nitrogen in the air and hydrogen obtained from coal. BASF scaled up the process and the Germans used it to support production of explosives during the First World War, but after the war the Haber process became (and remains) the backbone of the fertilizer industry. It won Haber the Nobel Prize, his efforts to create poison gases being discreetly overlooked in the award.

Farmers were suspicious of purely artificial fertilizers for some time. They felt that a real fertilizer had to have been part of some life process, such as bird droppings, not churned out of a factory from raw materials. There was also the problem that more potent fertilizers made wheat stalks grow higher. The tall stalks had a tendency to fall over in winds, to then rot on the ground.

Well, that wouldn't be a problem if wheat didn't grow as tall. After World War II Cecil Salmon, a wheat expert attached to the Allied occupation authority in Japan, collected 16 strains of wheat from the country, including one named "Norin 10", which only grew 60 centimeters (two feet) tall. In 1949, Salmon sent samples to a plant researcher named Orville Vogel working in Oregon, and Vogel came up with a set of short-stalked wheat hybrids. In 1952, a plant researcher named Norman Borlaug, who was working in Mexico under a grant from the Rockefeller Foundation, obtained seeds of some of these short-stalked strains, and bred them to create a new high-yield, short-stalked strain. By 1963, that strain made up 95% of Mexico's wheat crop, and Mexican wheat production was six times greater than it had been before Borlaug's innovation.

In 1961, M.S. Swaminathan, an official in the Indian agricultural ministry, invited Borlaug to India to obtain his help on improving the country's agriculture. The country seemed faced with imminent Malthusian disaster, but when Borlaug arrived in 1963, he showed that his Mexican strains could provide four or five times the yield of the varieties then grown by Indian farmers. In 1965, over the protests of entrenched interests, Swaminathan managed to convince the Indian government to buy 18,000 tonnes of Borlaug's seed. The shipment was delayed by American customs, riots in Los Angeles, and a war between India and Pakistan -- but the war had the silver lining of suppressing internal opposition to the new seed. Farmers took the seed eagerly, and by 1974 Indian agricultural productivity had tripled. Norman Borlaug won the Nobel Peace Prize in 1970 for his contribution to what was called the "Green Revolution". [TO BE CONTINUED]

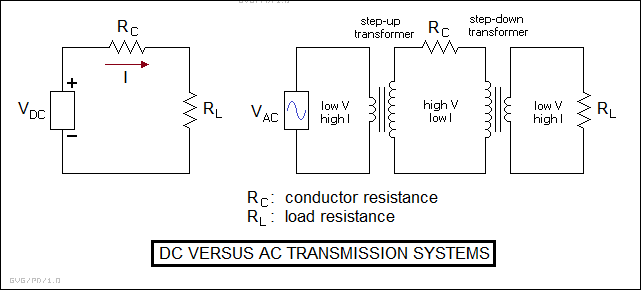

NEXT* INFRASTRUCTURE -- THE POWER GRID (3): The power grid that drives electricity across the country is distinctly hierarchical, with big power towers carrying AC electricity from power station switchyards, with the power ultimately passed down through city electrical networks to end users like ourselves. The hierarchy of the system is also reflected in voltage levels. Residences use 120 VAC, but the big trunk lines operate at hundreds of thousands of volts. The reason this is so requires another discussion of electrical theory.

All conductors -- in this case, the power lines -- have a certain small resistance of their own. Imagine having the same simple circuit given in earlier installments, with a voltage being driven across a resistive load. Now factor in the resistance of the wiring. If the resistance of the wiring is the same as the resistance of the load, then half the power will be lost in the wiring and won't be available for use in the load.

One way around this is to increase the load resistance. What this does is increase the resistance of the circuit as a whole, meaning that for a given voltage there's less current. Given a constant conductor resistance and less current, that means less power wasted in the conductor resistance. However, load resistance is something of a given, and it's not possible to just change it to whatever is needed. There is, however, a magic trick to make its resistance seem larger. That trick is called a "transformer".

As explained previously, an inductor consists of a coil of wire wrapped around a core. Now imagine wrapping a second coil around the same core. If a current is forced into the original "input" coil, it creates a growing magnetic field; this growing magnetic field then creates an electric current in the second "output" coil. This only works with AC, by the way, since with DC, once the current's stabilized in the input coil there's no changing magnetic field to create a voltage in the output coil.

That might seem pretty useless in itself, but suppose the second coil has ten times as many loops as the input coil. The changing magnetic field now cuts across tens times as many loops, and the effect is that the output coil has ten times the voltage. Of course, there's no way to get something for nothing, the power has to remain constant, and so the current is cut to a tenth to compensate.

Now take the simple example circuit, but with the input changed from a DC voltage source to an AC voltage source and then run through a "step-up" input transformer to increase its voltage by a factor of ten for transmission through the circuit. On the output side, the load is connected to a "step-down" output transformer that converts back down by a factor of ten, resulting in the original voltage. From the circuit side of the output transformer, the voltage appears ten times as great while the current appears ten times smaller -- meaning the load resistance appears a hundred times greater. This reduces the current in the circuit and so reduces the power lost in the conductor resistance. Some sources seem to imply that high voltage in itself is responsible for the improved efficiency, but though that's not wrong as such, it's not particularly helpful in making matters clear.

The original electric power distribution system was invented by Thomas Edison and used DC, which won't work with a transformer and could only be distributed at low voltages. George Westinghouse realized the virtues of high-voltage AC and pushed it as a competing system, leading to a bitter war between the two men. Edison insisted that high-voltage AC was inherently unsafe -- it has its hazards, nobody with any sense gets close to a downed high-voltage line -- but his DC system was doomed by its inefficiency. The risks of high-voltage AC proved manageable.

By the way, early US power plants ran at 25 Hz, not 60 Hz. There was a push early on to standardize on 50 Hz, but US electrical equipment makers pressed Congress to legislate 60 Hz here as a trade protection measure. Some early US electric trains ran on 25 Hz power and 25 Hz subnets linger here and there, as does 16 2/3 Hz in Europe. Aircraft and space systems run on 400 Hz; the reason is that transformers get smaller as the frequency gets higher, and the lighter transformers are a big plus on aircraft.

* Not so incidentally, electric power is typically transmitted by triplets of cables, not just two. The problem with AC as just described is that it doesn't make very effective use of the wiring, varying cyclically between a peak and zero. In practice, electricity is produced in three "phases", staggered by 120 degrees, with each phase transmitted over two of the three cables, making better use of the wiring. Some industrial equipment will actually use three-phase power, but for general residential use a single phase is "tapped off" from two of the wires. [TO BE CONTINUED]

START | PREV | NEXT* GIMMICKS & GADGETS: According to an article on WIRED.com, vending machines are starting to go upscale: it is now possible, in large airports, fancy hotels, and the like to buy iPods, headphones, and other electronic gadgets with costs a few orders of magnitude more than normal vending-machine fare with just the swipe of a card. It seems the concept has proven very successful, though the iPods of course are delivered with no music or video on them. The next step is to add a music-download kiosk -- just plug in your shiny new iPod and download away. Incidentally, the iPod doesn't fall down into a delivery chute with a CLUNK -- a little mechanical arm grabs it and hands it off in a more considerate fashion.

* A correspondent tipped me off to an interesting operation named "ReVend" that sells, get this, "reverse vending machines". They might better be called "smart trashbins", being used to recycle certain specialized discards -- recyclable glass bottles, plastic bottles and cans, and so on -- and providing a payout to the user. They do look have vending-machine cosmetics; it appears they are intended to support vending-machine kiosks.

* Pocket memory modules with a USB interface and a capacity of a gigabyte or two are now commonplace. Disk drive maker Seagate is now trying to do them one better, with a series of "FreeAgent Pro" pocket disk drives with capacities ranging from 320 gigabytes to a whopping 750 gigabytes of data. The drives feature a modular interface system that can accommodate a USB, serial ATA, or dual Firewire link. Prices range from about $200 USD to $420 USD.

Seagate also offers a "FreeAgent Go" series, in a slimline package with a fold-out USB interface and capacities ranging from 80 to 160 GB, for $130 to $190 USD, and even a matchbox-sized USB "FreeAgent Go Small" with 12 GB for $140 USD. The idea is that instead of lugging a laptop PC around, a user can just carry the pocket drive and plug it into the USB port of any handy PC. Obviously this is an idea that would work better if the drive also included a resident software suite, but for now all the modules carry is some data-management software.

* As the saying goes, everything old is new again, and an article on TECHNOLOGYREVIEW.com surveyed a new cyberfad: functional replicas of personal computers from the early days. For the real hardcore nostalgics, VintageTech sells a clone of the Digital Electronics Corporation's PDP-1 minicomputer. Although it's easy to find PDP-1 software emulators online and the clone is powered by Linux under the hood, the product gives the perfect look and feel of the real thing, allowing the user to "vatchen das blinken lites", as they used to say. It can be used to run SPACEWAR!, the very first two-person computer game, developed by MIT computer scientist Steve "Slug" Russell in 1961.

For those who don't want to go back that far, there's the "Replica 1", a clone of the 1976 Apple I, for only $160 USD. It's not a perfect clone down to the layout of the circuit boards, but it works the same. Those who want their nostalgia but still want to keep their computing power can opt for the Briel Computers AltairPC, which is just a case that makes an ordinary PC look like a MITS Altair, regarded by some as the machine that started personal computing. Download a MITS Altair emulator and it's hard to tell the difference.

BACK_TO_TOP* STEALTH DESTROYER: World War II destroyers were intimidating in appearance, bristling from bow to stern with turrets and guns and littered with antennas. As reported in POPULAR SCIENCE ("Invisible Warship" by Gregory Mone, November 2006), the US Navy's latest destroyer, the "DDG1000 ZUMWALT", the first new Navy destroyer design in three decades, is about as featureless as a surface warship could be thought to be, with inward-sloping sides rising seamlessly up into a superstructure in the shape of a truncated wedge, containing the bridge, planar radar and communications antennas, and even the smokestack.

The 183 meter (600 foot) long ZUMWALT has been designed for shallow water "littoral" warfare, the Navy not expecting a fight on the high seas these days. The blocky shape of the vessel helps reduce its visibility to radar, with the buried smokestack reducing the ship's infrared signature. The engine room is lined with soundproofing to reduce the acoustic noise signature. The ZUMWALT will be able to get in close to shorelines to dispatch SEAL commandos on assault boats, or on helicopters operating off the flight deck in the rear.

SEALS will receive fire support from the ship's armament after going in. There are two turrets forward, each with a single automatic 155-millimeter gun, and two small turrets aft, each with a single 57-millimeter gun. The 155-millimeter guns will be able to fire rocket-boosted GPS-guided shells at a high rate of fire, giving them long-range punch. For deep strike, antiship attack, or anti-aircraft defense, the vessel carries a real punch with 80 vertical-launch missile silos lining the forward turrets and the aft flight deck.

The ZUMWALT's sophisticated dual-band phased-array radar will give a comprehensive view of the threat environment from the surface to the air to the horizon, capable of cutting through countermeasures and rough seas to spot small targets. The reversed-sloped bow ends in a bulbous underwater nose containing a sophisticated sonar array. Construction of the ZUMWALT is expected to begin in 2007, with the ship commissioned in 2012. Six other members of the class are planned.

BACK_TO_TOP* CO2 LAWSUITS: According to an article in BUSINESS WEEK ("Global Warming: Here Come The Lawyers" by John Carey and Lorraine Woellert, 30 October 2006), the rising tide of concern over global warming is now starting to reach into US courts. After the destruction wrought by Hurricane Katrina on the US Gulf Coast in late 2005, some lawyers among the affected decided to take action. Since oceanic storms are produced by warm ocean waters, and global warming is increasing the temperature of the seas, they believe they have a case against industries whose carbon dioxide emissions have contributed to global warming. They are now suing a group of oil companies, coal companies, and utilities for damages.

That may sound bizarre, but it's not a completely unique case, there being over a dozen suits over climate change in the queue for US state and federal courts. It is one of the more extreme -- others involve a suit by the state of Massachusetts against the US Environmental Protection Agency (EPA), charging that the organization needs to work harder to clamp down on emissions, plus a suit being pressed by several Texas cities challenging power plants being planned by utilities, saying the plants don't do enough to cut down emissions. From that angle, the new crop of lawsuits is more focused on persuading the government to take global warming more seriously than it is a hunt for damages.

Some legal efforts along this line -- attempts by cities to hold gunmakers liable for damages due to shootings and crime, and by African-Americans to obtain reparations from slavery -- have been thoroughly shot down, but some, particularly the legal attacks on Big Tobacco, have been only too successful. Payouts over tobacco lawsuits have reached $300 billion USD and the companies have been legally forced to change the ways they do things. Even when the suits get shot down by the courts, the legal pressure often does have an effect on the targets.

Companies that are possible targets of global-warming lawsuits are taking the threat seriously, establishing joint operations for legal defense; some are also doing what they "reasonably" can to reduce emissions and lower their legal profile. Still, some of the plaintiffs have a formidable challenge to get their lawsuits into, much less through, the courts. In particular, the chain of culpability for damage by Hurricane Katrina is extremely flimsy and easily challenged, and the courts have been gradually becoming less open to dubious lawsuits.

However, the lawsuit of the state of Massachusetts against the EPA, based on the state's interpretation of the Clean Air Act and perception that the EPA hasn't been enforcing it, has plenty of legal substance. Ironically, if the state wins, it will cut the ground out from underneath other lawsuits that directly target businesses by identifying the EPA as the actual legal target. Other lawsuits seem more muddled: when the state of California tried to pass strong auto emissions regulations, auto manufacturers sued, with the state then pressing a countersuit against the car companies for their emissions. It would seem like a script only a lawyer could love.

[ED: One of the amusing aspects of this scenario is considering that since people exhale CO2, every individual could in principle be sued. I recall Walt Kelly's classic POGO comic strip, during a period where the inhabitants of Okefenokee Swamp were debating the fact that pollution was caused by people breathing. At one point two of the characters were on their way to visit the local undertaker, a vulture named Sarcophagus Macabre; one character says: "So, where does this vulture stand on the issue of people breathing?" The other answered: "Vultures are usually in favor of those who don't." The final, famous conclusion of the debate was: "We have met the enemy, and they is us!"]

BACK_TO_TOP* GENE CHIPS (2): While researchers refined the DNA chip, work on sequencing the human genome was accelerating towards completion, and the bioscience industry was becoming very excited, with share prices of companies either involved or expected to benefit from the human genome project skyrocketing. The beneficiaries included Affymetrix, which saw product demand soar. It soared enough to bring competitors into the fray.

Anyone familiar with high tech could easily recognize the potential for patent litigation in this scenario, and in fact it was a bit puzzling as to why it took so long. In 1997, Incyte Genomics bought out Systeni for $90 million USD, with Affymetrix then suing Incyte for patent infringement -- on the basis that Affymetrix's patents covered the entire concept of a DNA microarray, not any specific implementation. Many in the field found this claim an overreach, and the lawsuit also chilled investment by venture capitalists in DNA chip technology.

While the suit went on, Affymetrix ended up being on the receiving end of a lawsuit in turn. In 1995, Edwin Southern of Oxford had set up a firm named Oxford Gene Technology (OGT) to pursue his ideas on gene chips. Affymetrix then bought out a division of a company that had obtained a technology license from OGT, and Affymetrix officials claimed that gave their company a right to OGT technologies as well. OGT did not agree and sued Affymetrix in 1999. The lawsuits with Incyte and OGT were both settled for undisclosed sums in 2001.

Today, Affymetrix remains the leader of the pack, but there are major competitors in the DNA chip business -- including Agilent, a Hewlett-Packard spinoff; General Electric Health Care; and Illumina, which has focused on DNA chips to characterize genetic variation, providing information about predisposition to disease and response to therapy. Such arrays are becoming increasingly popular, and in 2004 Affymetrix sued Illumina for patent infringement, despite the fact that Illumina's DNA chip technology is very different from that used by Affymetrix. In 2006, Illumina retaliated with a set of countersuits; the fight is still going on.

* The litigation is obnoxious to researchers in the field, who see it as doing little to advance both the market and technical progress while doing much to enrich lawyers, but they have good reason to be pleased with the progress of DNA chip technology. Prices are now reasonable due to volume, competition, and improved manufacturing processes: in the mid-1990s, the production yield of Affymetrix's DNA arrays was only about 10%, but it's close to 100% now.

People keep finding new uses for the chips. In 1999 Todd Golub, then at the Whitehead Institute for Biomedical Researcher in Cambridge, Massachusetts, and his colleagues published a paper that classified cancers on the basis of their gene-expression profiles or "signatures". This allowed the identification of different cancers, such as leukemias, that looked identical under a microscope. Researchers have gone on to catalog a wide range of cancer signatures. Roche, the pharmaceutical giant, is working on DNA chip diagnostics based on the AmpliChip, and has several in clinical trials. One chip can sort out about 20 different variants of leukemias, and another will spot mutations in the p53 gene, which can affect a patient's inclination towards cancers.

Big pharma companies like Roche and Merck also used DNA chips in drug-discovery research. In 2001, Merck bought up Rosetta Inpharmatics, a software firm that specializes in the interpretation of gene-expression profiles. Merck researchers are now performing 40,000 DNA chip analyses a year, with the results put into a database that now has more than 200,000 entries and is interpreted using Rosetta's software. The microarray data has helped flag drug candidates that could have nasty side effects, allowing the drugs to be modified or dropped. According to Merck officials, about 20% of the Merck drugs now in clinical trials have been developed with the help of DNA chips.

The Broad Institute, a collaboration of the Massachusetts Institute of Technology and Harvard, is working on a similar project in the public arena. Institute researchers recently published a paper in AAAS SCIENCE describing a new database concept, which they call the "connectivity map". The idea is to express the action of drugs, genes, and diseases in the common language of gene expression profiles. Database software will then be able to sift through the profiles to identify matches and patterns. The project aims over the next two years to identify profiles for all approved drugs in the USA. The resulting comprehensive dataset will be useful for identifying new uses for existing drugs and for identifying previously unknown responses to drugs.

Researchers believe that they are only scratching the surface of what DNA arrays can do. Says Joe DeRisi: "We're at the beginning of genomics-based diagnostics and therapeutics." [END OF SERIES]

START | PREV* INFRASTRUCTURE -- THE POWER GRID (2): The previous installment in this series described basic "direct current (DC)" circuit concepts, as well as the concept of "resistive" loads. However, although battery-operated gear and cars run off of DC, the power grid is "alternating current (AC)". In AC, the voltage is varying all the time in a cyclical fashion, in the form of a sine wave. In the US, the standard power-line frequency is, as mentioned, 60 Hz, while it is generally 50 Hz outside of North America. Oddly, parts of Japan are 60 Hz, while other parts are 50 Hz.

The voltage varies from a maximum of +170 volts to a minimum of -170 volts, but the AC line voltage is actually given as 120 "volts AC (VAC)". This is actually the "root mean square (RMS)" value of the sine wave, or in other words the square root of the square of the average value of the waveform. This is the value that will give the proper conversion of the AC voltage into watts when it's put across a resistor. In fact, using RMS values, everything said about the DC circuit in the previous installment in this series is true for an AC circuit. Put 6 VAC across a 100 ohm resistor, the current is 60 milliamperes, and the power dissipation is 6 * 6 / 100 = 360 milliwatts.

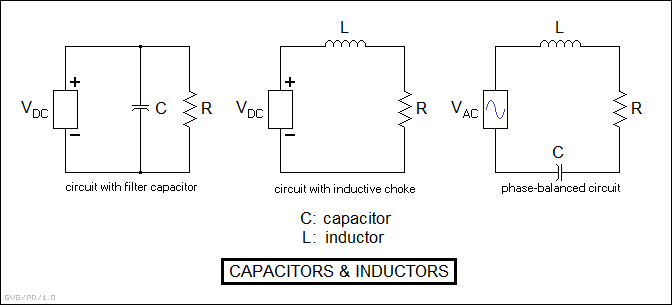

However, AC circuits can involve two other classes of loads besides resistors: capacitors and inductors. A simple capacitor just looks like a pair of plates separated by a gap, with a wire lead to each plate. Connect a battery to it, and it will store a charge proportional to the size of the plates and inversely proportional to the gap between the plates. Put certain types of nonconductive "dielectric" materials, usually various kinds of plastics, between the plates, and the charge storage or "capacitance" is multiplied by the "dielectric constant" of the dielectric.

A capacitor can be used in a DC circuit, placed in parallel with a load to provide a "surge tank" of electric charge when it's needed. A large capacitor is generally used in DC power supplies in a PC or the like to perform this task, the capacitor in this case being called a "filter capacitor", in that it filters out voltage irregularities. Of course, placing a capacitor in series with a load in a simple DC circuit is silly -- after the capacitor charges up, no more current flows through the circuit. A capacitor is said to be something like the electrical equivalent of a spring, storing up energy in the form of electrical charges and then releasing it later.

An inductor simply looks like a coil of wire, often looped around a core of iron or an iron-based compound called "ferrite". One of the basic rules of electricity is that a current flowing through a wire will generate a magnetic field. Switch a current through a wire and a compass will be turned by it. The other basic rule is that if a changing magnetic field is passed over a wire, it will set up a voltage in it. Switching a current into an inductive coil causes a growing magnetic field that cuts through the loops of the coil, creating a voltage that resists the current. This is called a "back-EMF". Once the current is stable, then shutting off the current flow causes the magnetic field in the coil to collapse, creating a voltage that tries to keep the current going. This current will easily arc across a gap, which is how a classic automotive distributor / spark plug system works.

An inductor is said to be something like the electrical equivalent of a flywheel, with its "momentum" resisting changes in current. The faster the change in current, the greater the back-EMF, which means that an inductor tends to block out high-frequency noise. This is why it's common to have cylindrical ferrite slugs or "chokes" on various types of cables used on computer gear and the like -- the choke makes sure high-frequency noise won't make it through the cable.

A full description of the operation of capacitors and inductors in AC circuits would be too difficult to consider here, and it's not necessary. A few simple considerations will suffice. In an AC circuit, a capacitor in series will not act like an open circuit -- it will act like a load that obeys ohm's law, except that instead of resistance it provides a "capacitive reactance" or:

capacitive reactance = voltage / current

An inductor will similarly provide an "inductive reactance". In either case, the reactance differs from a resistance in that no energy is dissipated -- it's stored during one part of the cycle and released in the other. This implies that voltage across and current through a capacitor or an inductor are not in phase:

Since current leads voltage in a capacitor but lags it in an inductor, if a capacitor and inductor of the appropriate relative sizes are placed in a circuit together, they can cancel out and the overall circuit has current and voltage in phase. Generally, when investigating the power grid, it's not necessary to worry much about capacitive or inductive loads, since the whole thing can be generally modeled in a simple way as a resistive circuit, but there are some features that would be hard to understand without a little knowledge of them. [TO BE CONTINUED]

START | PREV | NEXT* SLITHER: Visitors to the US Southeast quickly become aware of a green vine named "kudzu" that carpets the landscape. It was a Japanese import that found the climate there to its liking and decided to take over. According to an article in THE ECONOMIST ("Burmese Days", 2 December 2006), a patch of the South, the great swamp complex of the Everglades in Florida, is dealing with a less pervasive but somewhat more alarming invader: the Burmese python.

Nobody's quite sure how the damn things got there, the assumption being that they started out as exotic pets that got loose, but over the last few years they have been increasing in number and size, occasionally making meals of pets of Miami residents. The snakes like the Florida climate and breed quickly, making them a particular threat to the wildlife of the Everglades.

Wildlife authorities are trying to exterminate the snakes and have come up with a few ingenious tricks. One is to catch female pythons, tag them with radio transmitters, and release them, in hopes of tracking them to nesting areas. Another scheme is to use female python pheromones -- odors produced at breeding time -- to trap male snakes. Dogs are being trained to track the pythons as well. The Florida legislature is considering new regulations to handle the threat, for example requiring permits to own such creatures -- though some suggest the pythons should be simply banned, period.

[ED: After reading this little article, I had to double-check the date on the magazine to make sure it wasn't April 1st -- THE ECONOMIST has a tradition of April Fool's articles. It brings back a little nostalgia for THE X-FILES: "Mulder, you can't honestly believe that the cultists were eaten by pythons, can you?" "The truth is out there, Scully."]

BACK_TO_TOP* AUTOMOTIVE BLACK BOX: Everybody's familiar with the fact that cars generally have computerized boxes under the hood. What everybody isn't so aware of is that, according to TIME ("Psst, Your Car Is Watching You" by Margot Roosevelt, 14 August 2006), such boxes could be used to provide evidence to legally incriminate drivers.

About a third of the cars on the roads of the USA, and about two-thirds of the new cars being sold here, contain "event data recorders (EDRs)", which will record about 20 seconds of vehicle operational data up to a crash. Two New York City teenagers who got into a street race, one driving a Corvette and the other a Mercedes, ended up plowing into a Jeep, killing a woman and her fiance. One of the teens told detectives that the cars hadn't been going faster than 55 MPH, but the Corvette's EDR said that it had been going 139 MPH when the race came to its disastrous end. The two teens ended up in prison for three years on manslaughter charges.

There is an ongoing debate over EDRs, with advocates claiming they improve automotive safety and the critics claiming they are unacceptably intrusive. The US government likes them and in fact is passing regulations to standardize them so they can all be read, by a laptop computer or whatever, using the same interface and software. Some advocates are pushing for laws to make EDRs mandatory for every car.

The critics have been vocal enough to push the US National Traffic Safety Administration to call for regulations specifying that dealers must disclose if a car has an EDR. Several US states already have this as a law, and also mandate that EDR contents cannot be downloaded without the owner's permission. Some state legislators have pushed for a law that would modify EDR systems so the owners could turn them off.

There is a concern that EDRs could be used to spy on people's driving habits. A standard EDR doesn't have the memory capacity to be much of a spy, but transport companies often fit their trucks with full-fledged black boxes to monitor driving habits over the longer term. Corporate fleet car operations also sometimes have comparable black boxes, and they are even available for, say, parents who want to make sure their teenagers aren't racing around behind their backs, with the box capable of issuing warnings when the driver speeds. The critics worry that expanded EDRs with wireless links might allow the police to bust drivers for infractions nobody witnessed, or let insurance companies adjust their rates depending on driving habits. In fact, some insurers are starting to push ideas along this line.

EDR data is currently admissible as evidence in 19 US states, and has led to a number of convictions. The political quarrel over EDRs continues. For now, everyone agrees that drivers should realize that their car may be keeping tabs on them.

BACK_TO_TOP* IRAN RUNNING DRY: The current regime in Tehran has been a persistent annoyance to the West, but as discussed in an article in BUSINESS WEEK ("Surprise: Oil Woes In Iran" by Stanley Reed, 11 December 2006), opponents of Iran can take some comfort in the fact that the country is now finding it harder and harder to pump oil.

At 137 billion barrels of oil, Iran has the second biggest deposits in the world, after Saudi Arabia. However, the rate at which the Iranians have been pumping that oil has been steadily declining. In 1974, five years before the Islamic Revolution, Iran was pumping 6.1 million barrels a day; now it's only pumping 3.9 million. About 60% of Iran's fields are more than 50 years old and are starting to run dry. Officials of the National Iranian Oil Company (NIOC) know there's a problem and have been warning the government that unless things change, Iran's oil flow will continue to dwindle.

Underinvestment is the key to the problem. Iran funds NIOC at about $3 billion USD a year, which is about a third of what's needed. Gasoline prices are set at only 35 cents a gallon, guaranteeing high consumption -- in fact, so high that national gasoline use has outstripped Iranian refinery capabilities. Iran imported about 40% of its gasoline in 2006 at a cost of $5 billion USD. The subsidy on gasoline is part of the general problem that the current Iranian government under President Mahmoud Ahmadinejad has been bracing up public support by lavish public spending. To an extent that generosity has been boosted by high oil prices, but oil prices have been falling and the Tehran government could find itself in a nasty pinch.

The government also seems to have mixed feelings about obtaining foreign help to pump oil. To be sure, the Iranians have good cause to be suspicious of foreigners involved in their oil industry, having been handed some raw deals in pre-revolutionary days, but the truth of the matter is that Iran needs the help. Xenophobia and revolutionary bureaucracy have choked off deals, as have international sanctions -- which are likely to get tougher as long as Iran continues to flaunt its nuclear program. Iran shares a huge offshore natural gas field with Qatar, but while the Qataris have been signing huge deals to tap into the field, Iranian efforts have been moving along at a crawl at best.

Those tempted to gloat about Iran's fumblings in the oil business should probably not take their glee too far, however. The Iranians were able to substantially boost oil production during the Iran-Iraq War of the 1980s without any foreign assistance, and the Iranians have shown they can be enterprising, for example having developed a local arms industry capable of building sophisticated weaponry. There's no law of physics to prevent Iran from turning around its oil industry -- though the critics of the current government can find some hope in the thought that such a turnaround may imply at least a regime less focused on grandstanding and causing trouble, and more focused on taking care of business.

* As another case in point, a BBC WORLD TV report indicated that Iran is suffering the worst "brain drain" of any major country on the planet. Young educated Iranians are leaving the country in mass to seek a better life elsewhere. Their motivations are not particularly ideological: it's just that unemployment is high in Iran and there's no work for them, an unpleasant circumstance adding to the impression that the current government is theatrical but incompetent.

Recent elections showed reformists regaining some of the ground they had lost to the conservatives, but again, those who might gloat should find it useful to remember that past hopes for a kinder, gentler Islamic Republic of Iran have consistently led to disappointment -- and given that current US attitudes towards Iran are, with some good reason, not exactly kind and gentle themselves, doesn't give an excessive basis for optimism.

BACK_TO_TOP* GENE CHIPS (1): The sequencing of the genetic code of humans and other organisms were remarkable achievements. Such feats couldn't have been performed without the development of advanced biotechnology. As discussed in an article in THE ECONOMIST ("New Chips On The Block", 2 December 2006), one of the technologies used in these efforts, the "DNA microarray" or "DNA chip", is now proving revolutionary in its own right. A DNA chip is a glass slide marked with a grid of thousand of samples of different DNA fragments that permits a rapid examination of a genetic sample. The chips are now used in almost every field of biology, including toxicology, virology, and diagnostics. So far, they have been a lab tool, useful for determining which genes are activated or "expressed" during normal or pathological cell operation -- but gene chips are now finding their way into the clinic.

In 2004, American and European regulatory bodies approved for the first time a medical test based on a DNA chip. The chip, the "AmpliChip CYP450", made by pharmaceutical giant Roche in collaboration with DNA chip-maker Affymetrix, can identify 31 different variations in two genes that affect how individuals react to a range of commonly prescribed drugs. This allows physicians to determine the appropriate drug and its dosage level for a particular patient.

* The origins of the DNA chip go back to the late 1980s. In those days, analysis of genes was a laborious lab process. Work was underway towards miniaturizing and automating analytical tools, with Edwin Southern, a well-known professor of biochemistry at Oxford University in the UK, obtaining a pioneering patent on microarrays in 1988. However, the real push towards DNA chips was provided by an American researcher named Stephen Fodor.

In 1989, Fodor was a new hire at Affymax Research in Palo Alto, California, a company that was working on analytical tools for drug development. Fodor and his colleagues came up with a scheme for a tool that borrowed technology from the semiconductor industry, and published a paper on the concept in the journal AAAS SCIENCE in 1991. The paper envisioned using photomasking techniques to set up a microarray of different biomolecules on a substrate coated with light-sensitive chemicals. The substrate would be flooded with a particular biomolecule; light passing through a hole or holes in a photomask would cause the biomolecule to stick to the substrate in the appropriate pattern. The process could be repeated with different biomolecules and photomasks to build up the microarray. The paper focused on building microarrays of short protein fragments called "peptides", but Fodor had his eye on developing DNA microarrays.

In the meantime Patrick Brown, a professor of biochemistry at the school of medicine of Stanford University in California, was thinking along similar lines. He worked with Dari Shalon, a grad student from the engineering department, to develop a robotic process for placing an array of different single-strand DNA sequences on an ordinary microscope slide. DNA, as is generally known, is normally a "double-strand" molecule, in the form of the famed "double helix" or "twisted ladder", with its information for assembly of protein and other life functions coded by arrangements of the four DNA "bases" -- A, C, T, G. A half-strand of DNA will mate up with another half-strand of DNA, or its similar cousin RNA, that has the mirror or "complementary" sequence of bases, just a key will fit into a specific lock.

Such a DNA microarray could be flooded with DNA or RNA segments marked with fluorescent molecules. The segments would then mate with any of the array elements in the microarray that had a complementary sequence of bases. After the DNA microarray was washed clean, the matches would be retained, and the locations where they occurred could be spotted by exposing the microarray to ultraviolet, with the matches fluorescing in response.

* Both Fodor and Brown applied to the US National Institutes of Health (NIH) for grants. NIH officials didn't know what to make of the microarray concept and were not enthusiastic. Fodor did get a grant, mostly because influential biochemist Leroy Hood liked the idea and pushed it through; armed with millions of dollars of grant money, Fodor and a group of colleagues set up Affymetrix in 1993. Brown also got a grant, but only by writing around the microarray concept in his grant application. The two efforts then went forward in parallel.

Affymetrix's first product was a chip for detecting mutations in HIV, the AIDS virus. In the meantime, Brown and Shalon were investigating use of microarrays for analysis of gene expression, working with another Stanford group to analyze the mustard plant Arabidosis thaliana, a common "guinea pig" for studies of plant biology. The effort produced a paper published in AAAS SCIENCE in 1995, reporting that 45 genes of the mustard plant were observed and showed major differences in gene operation between root and leaf tissue -- that is, the same genetic "program" was being executed in different ways. The paper attracted considerable attention, and helped drum up business for Systeni, a company founded by Shalon to provide gene-expression analysis services to clients.

Affymetrix began to sell gene-expression arrays in the mid-1990s, which were generally purchased by pharmaceutical companies and research labs. Demand was rising outside of those niches, but Affymetrix didn't have the production capacity to fill that demand, and the company's products were expensive anyway: the DNA chips cost thousands of dollars each, and the chip reader cost $175,000 USD. At this point, Joe Derisi, at the time a grad student in the service of Brown who had something of a classic "hacker" mentality, posted detailed instructions to the Internet to show others in the community how to "home brew" their own DNA chips and readers for a fraction of the cost of Affymetrix's products.

By the late 1990s, DNA chips were in widespread use, with high-profile research establishing their value. In 1997, Brown's lab provided 100 chips that covered the entire yeast genome for the first "whole genome expression" study. Such was the excitement in the community that in 1999 the journal NATURE GENETICS published an issue dedicated to DNA chips, with the cover titled "Array Of Hope". [TO BE CONTINUED]

NEXT* INFRASTRUCTURE -- THE POWER GRID (1): Chapter 6 of Brian Hayes' book INFRASTRUCTURE focuses on the power grid that delivers electricity from power plants to end users.

It's useful here to first explain some very elementary electrical theory. There are four forces in the Universe: two of them, the strong and weak nuclear forces, only operate at the subatomic level and we don't notice them directly at our level. We do, however, notice the other two, gravity and electromagnetism.

We generally have an intuitive grasp of gravity, and it's also useful to give it a quick outline here since it its operation is similar to that of electromagnetism in many ways. Gravity is an attractive force between two masses, proportional to the product of the two masses and inversely proportional to the square of the distance between them. Down here on the surface of the Earth, we see gravity in a simpler fashion, being a force exerted on any mass we try to lift that would accelerate it at 9.81 meters per second squared if the mass were released, and which is constant with height -- or at least it seems to be from our ant's point of view, a rucksack weighing for all practical purposes the same at the bottom of a mountain as it does at the top. (The Earth's gravitational force is effectively exerted as if all the mass of the planet were at its center, and the distance climbing up the mountain is so much smaller than the radius of the Earth as to make the change unnoticeable, though it's not unmeasurable.)

The most useful example of gravity in this context is water falling down a pipe. The taller the pipe, the greater the energy acquired by the water per unit mass as it falls down: double the height, double the energy. The height of the pipe can be said to correspond to the gravitational "potential" of the flow, with the potential increasing with the height of the pipe. Of course, the total energy, not just the energy per unit mass, is proportional to the cross-section of the pipe and the amount of water that flows down the pipe: double the cross-section, double the energy. Double both the height and the cross-section, get four times the energy.

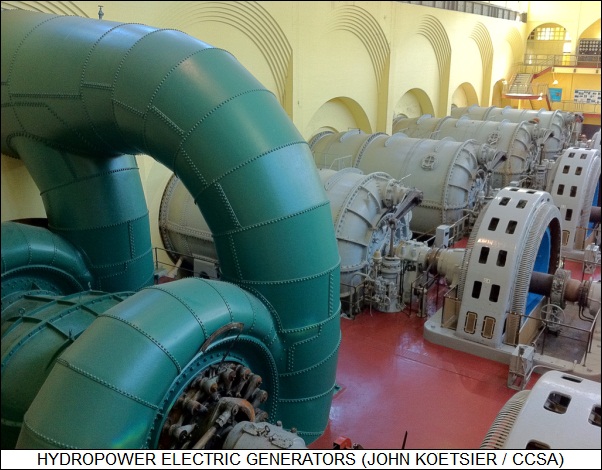

This is the physical basis of a hydropower station, with the amount of power obtained from a turbine proportional to the height or head of water flowing into it and the flow rate of that water. Incidentally, power is the rate of energy, or energy per second. If a hydropower station was to empty a reservoir with a given amount of water in it, the result would be the same amount of energy no matter how quickly it was done, but the power would depend on the rate at which the water was drained. Double the flow rate, double the power -- but the reservoir is emptied twice as fast and the total energy remains the same.

Electromagnetism is similar to gravity in that it is a force between two objects with a quantity of what is called "electric charge", with the force proportional to the product of the charges of the two objects and inversely proportional to the square of the distance between them. It differs from gravity in that there are two types of charge, "positive" and "negative", and the force can operate in two directions, not just one: like charges repel, unlike charges attract.

So what exactly is a "charge"? It is difficult to define since it's a fundamental concept. What's a charge? It's what creates the electromagnetic force. What's the electromagnetic force? It's what's created by charges. In practice, we can think of a charge as consisting of a collection of subatomic particles called "electrons", each of which has a single fundamental unit of (negative) electric charge. The more electrons, the greater the charge. There's more than a little fine print to this description -- one distinction being that electrons aren't charges, they are particles that have charge -- but such issues will be ignored here for the sake of simplicity, and we can think of an electric charge as just being an accumulation of electrons.

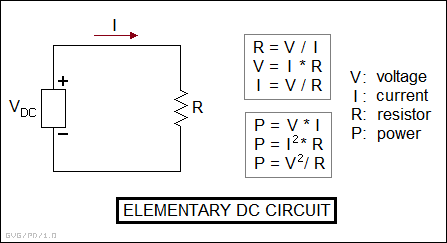

To get a more practical notion of electricity, consider the simplest possible electric circuit, consisting of a battery with a resistor "load" wired across its terminals. The battery is a source of electricity, provided at a given "voltage" or "electromotive force (EMF)". Voltage is given in "volts" and formally defined as the "energy per unit charge", but more intuitively it can be seen as analogous to the "height" of a flow of water falling under a gravity, and in fact voltage is also called "electrical potential". The electrons pouring out of the battery form a "current" given in "amperes", flowing by convention from the "+" to the "-" terminals of the battery -- though this convention exists for historical reasons, the electrons actually flowing from "-" to "+".

Anyway, just like with a hydropower station, in which the power of the flow is given by the height times the current flow, in our little electric circuit the electric power is the voltage times the current flow:

power in watts = voltage * current = volts * amperes

That is, given a 3-volt battery driving 2 amperes, the output power is 6 watts.

Batteries operate at a more or less fixed voltage output. What determines the current for that voltage is the size of the resistive load. It's just like having a pipe of one diameter or another at our hydropower station, able to support more or less current flow depending on that diameter. In fact, one definition of resistance is that it gives the ratio of current to voltage:

resistance in ohms = voltage / current

That is, given a 3-volt battery and 2 amperes of current, the resistance value is 1.5 ohms. (This is a very small value of resistance.) This equation can of course be rearranged into two other useful forms:

voltage = current * resistance current = voltage / resistance

That is, push a current of a half an ampere through a 100 ohm resistor, the voltage across the resistor is 50 volts. Similarly, if 3 volts is placed across a 100 ohm resistor, then the current is 30 milliamperes.

Actually, although the definition of resistance as the ratio of voltage to current is true, as described later it's a bit oversimplified, and there's an important and not-to-be-forgotten distinction in the definition of resistance, in that the energy pumped into the resistor is entirely consumed by it -- that is, a resistance is a circuit load that converts electrical energy into other forms. (This is not true for all circuit loads.) For example, the heating elements of a toaster or electric stove are essentially just resistors that convert electrical energy into heat. A traditional electric light bulb is a resistor that converts electric energy into heat and light. Since:

power = voltage * current voltage = current * resistance current = voltage / resistance

-- then by a little algebra, the electric power burned up in a resistor is given by:

power = current^2 * resistance power = voltage^2 / resistance

That is, drive half an ampere through a 100 ohm resistance, the power output is 25 watts. Put 3 volts across a 100 ohm resistance, the power output is 90 milliwatts.

It might be nice here to point out the definitions of an "open circuit" and a "short circuit". As implied above, electricity has to flow in a complete loop or circuit; put a switch in the circuit described above and open it, then no current flows through the circuit -- "open circuit". However, attempting to wire across the load in this circuit -- "short circuit" it -- and the resistance drops to a very low level. If the voltage is constant, then the result is a large current and usually a bit of fireworks.

The current that flows in a short circuit is limited by the amount of current the source can produce. A small battery cannot produce much current, so usually shorting one out doesn't create much ruckus, though it at the very least drains the battery. Electrical engineers like to think of a source as having an "internal resistance", sort of like a resistor magically integrated into the battery, with the resistance value given by the open-circuit voltage divided by the short-circuit current.

A source that can produce a lot of current has a low internal resistance -- a good example being an automotive battery. People will often say they got a shock off of a car battery, but one could put a thumb on each terminal of a car battery and not feel a thing, the voltage is too low to drive a perceptible current through the body's resistance. However, one terminal of the battery is connected to the car chassis while the other is connected to automotive systems like the starter, and if a mechanic clumsily places a wrench from a "hot" element to the chassis "ground", the result is a short circuit and sparks. An automotive battery can source a lot of current, it has a low internal resistance, and that is why it's not at all a good idea to wear a watch with a metal band when working under the hood of a car.

Incidentally, high voltages are not necessarily dangerous in themselves. The static shock one gets after scuffing one's shoes on a pile carpet actually has a surprisingly high voltage -- it has to be to throw off a spark, which is due to the ionization of molecules in the air -- but the total charge is low and the spark carries little power. [TO BE CONTINUED]

START | PREV | NEXT* CANADA ROUNDABOUT: As discussed in THE ECONOMIST ("Joining The Rotary Club", 11 November 2006), over the past few years, Canadian drivers have become accustomed to the roundabout, where instead negotiating a four-way stop the drivers turn right into a loop and then turn right again to go on their way. The notion of a roundabout wasn't entirely new to the country, the idea having been implemented many decades ago, but the old roundabouts were much bigger and had a peculiar feature: the vehicles entering the loop had right-of-way over vehicles in the loop. From a modern perspective, that was obviously the wrong approach, making the roundabout into a traffic obstruction instead of a benefit.

The bad taste lingered for a long time. The modern roundabout was invented in the UK in the 1960s and caught on in Europe, but Canada and the US -- which had gone through much the same experience as the Canadians -- were leery. However, the Americans started adopting roundabouts in the early 1990s, and the Canadians finally began to take notice. Canadian traffic engineers did a detailed study of the US experience and concluded that roundabouts eased traffic flow, were safer than four-way stops, improved gas mileage, and reduced emissions. They also worked even when the traffic lights were down.

Now there are traffic roundabouts all across Canada. Canadian drivers haven't had much trouble with them, though one driver who turned left instead of right ended up going in reverse around the loop. There have been problems in big cities like Montreal with drivers refusing to let pedestrians get across, but now drivers are being heavily fined if they don't yield to foot traffic. There were concerns about snow, but snowplows seem to be able to keep the roundabouts working by simply going round until the snow is all cleared away.

[ED: Traffic roundabouts are still not the norm in the US, mostly being found in new shopping malls and housing developments. Retrofitting them to existing street systems is expensive, and so it's taking time to put them in place. A roundabout was installed at a somewhat tangled street intersection a stone's throw from my house to straighten out the traffic flow, and it seems to work well.

I really like roundabouts. Not only do they eliminate the petty confrontations, frustration, and occasional bungles of a four-way stop, but they give a slight fun feeling from way back of being on one of those theme-park kiddie-car rides that go in circles and circles -- not that I've ever been inclined to keep going around and around in the loop, though on rare occasions a traffic conflict can force me to do another 360. There's also a tendency to landscape the roundabout center nicely or even put statuary and fountains in it, the most spectacular example being the roundabout in Idaho Falls I mentioned in the travelogue for my last road trip.]

BACK_TO_TOP* SPAM HUNTERS: Last spring, the BBC conducted an investigation of a particular series of spam email messages trying to sell pharmaceuticals. According to the report on the exercise from BBC.com ("Spam Trail Uncovers Junk Empire" by Mark Ward) the investigators uncovered some interesting details. The spam under scrutiny was originally sent out during April and May 2006. The emails were typical of spam hawking pharmaceuticals, except for the fact that the volume was unusually heavy, with about 100 million emails sent out every 14 days. Many of the emails had citations from JRR Tolkien's classic fantasy novel THE HOBBIT, with the text included to try to convince spam filters that the emails were legitimate. This is a common trick among spammers; what was more interesting was that there were about 2,000 variations in the content of the messages, with the content changed a few times an hour during the distribution to prevent spam filters from getting wise.

Tracking the origin of the emails uncovered a "zombienet" of about 100,000 compromised PCs in 119 different nations, with most of the PCs centered in Europe. Since spam filters will target particular PCs that are sources of spam, the zombienet tended to acquire new PCs on a regular basis and discard old ones. To allow customers to buy the drugs, the spam network included 1,500 different web domains, with some of the domains run by ISPs who advertise themselves as "bullet proof" -- meaning they resist attempts to shut down their hosted websites, making them friendly to cybercrooks. The links vectored potential customers to websites that looked like slick, legitimate businesses, even with street addresses and other physical data, though they were inevitably "virtual" -- complete fabrications.

The investigators used a one-shot credit card to make a purchase, and actually got the product they ordered, though the expectation was that the exercise was a credit-card ripoff operation. The orders were fulfilled by a pharmaceutical firm in India; the drugs were sent for testing to see if they were what was advertised, though the results hadn't come back by the time the story went to press. The investigators have been trying to hunt through the cybermaze further, and have passed on data to the US FBI on a US-based hosting firm noted to have been associated with spam and cybercrime efforts in the past.

* A later BBC.com article claims that cybercrime gangs are taking a long-term view of their activities, with students enrolled in university computer science programs being given "scholarship" by the gangs to help them obtain degrees -- and giving them a guaranteed job after graduation. The gangs also surf forums and chat rooms to find young "helpers", sometimes no older than 14, to run errands while being groomed for greater efforts later. The gangs also attempt to recruit corporate personnel to help line up very profitable "inside jobs".

A report in CNET.com says the London Metropolitan Police have stated that local police forces in the UK are now being swamped with complaints about cybercrimes, while very few hackers and virus-writers end up in the dock. The recommendation was to establish a national cybercrime unit to take up the load.

[ED: On my personal cybercrime front, I recently got an email to ask me to check my Paypal account. It was an obvious scam, one giveaway being it didn't refer to me by name, another being that it came into an email account that Paypal knows nothing about. What was amusing was that when I brought up the email in Outlook, the banner described the character set as "Cyrillic" -- hmm, missed a trick there, Russky boy.

The email did have a good trick in providing what would seem to be a completely valid hyperlink to "http://www.paypal.com". My initial reaction was: "Huh?" How could they pull off a scam referring me to the legitimate PayPal website? The answer immediately popped into my head: It was a bitmap image of link text, not the link itself, hiding the scam link underneath.]

BACK_TO_TOP* 21CN FOR THE UK: The United Kingdom has been demonstrating a certain enthusiasm for the internet revolution. By the spring of 2006, about 19% of UK residents had broadband internet connections, a higher proportion of the population than in the US, and broadband networks cover the entire country, with any resident able to obtain access at will. Now, according to an article from IEEE SPECTRUM, ("Nothing But Net" by Steven Cherry, January 2007), the UK's British Telecom (BT) is taking a great leap forward, embarking on a project to convert the nation's telephone system completely to digital internet protocol (IP). BT plans to shut down all 16 of its legacy X.25 and ATM digital telephone networks by 2012, replacing them with a single IP network, linking the nation's 22.5 million households. The plan, known as the "21st Century Network (21CN)", is already in motion, with some British phone subscribers performing calls over IP by the end of 2006, unaware of any change in the status quo.

No other nation currently has plans for a complete IP makeover of a national telecom system. It isn't a trivial exercise, since it means merging the current computer-based IP network with the current telephone network, and both have their own equipment, protocols, software, billing systems, and staff. That is precisely why the merger is being done: a unified network means elimination of redundancy, and enormous cost savings -- at least once the enormous cost of the transformation has been absorbed.

* The system is initially being implemented in South Wales. The telecommunications system there is handled through three large "superexchange" offices or "metro nodes", one each in Newport, Swansea, and Cardiff. There are six subordinate exchanges as well, with all nine exchanges reaching homes through 70 local offices. The end user connections currently include two services: traditional telephony and "digital subscriber lines (DSLs)". If a household uses both services, its copper lines are connected at the central office to a "splitter", which separates the voice channel from the DSL. The voice channel goes through a "circuit switch", a computer that handles making the connection to the remote phone -- a system initiated in the 1970s. The DSL channel is passed on to a "DSL access multiplexer (DSLAM)".

Maintaining dual systems is expensive, demanding more equipment, support, and even office space, with the central offices traditionally crammed to the ceiling with gear and wiring networks. Under 21CN, the circuit switch and DSLAM will be replaced by a single "multiservices access node (MSAN)". The IP network will use a scheme known as "multiprotocol label switching" to simulate the "virtual circuits" for phone conversations previously created by earlier digital technologies such as ATM.

The MSANs are the key to the 21CN system, and there will be over 5,500 of them by the time the conversion is complete, accounting for 40% of the cost. The MSANs were obtained from Fujitsu of Japan, which had already been selling DSL gear to BT, and to Huawei Technologies of Shenzhen, China, a relative newcomer. BT officials admit that Huawei won on price and that BT has no previous experience with Huawei as a vendor, but add that the company was "thoroughly vetted" before being awarded a contract: 21CN is a big project and nobody wants to court disaster. Huawei will also supply some of the "transmission equipment" to convert between fiber-optic links and end-user digital systems.

Ciena Corporation of Linthicum, Maryland, USA, is also providing transmission equipment. Ciena is a major manufacturer of fiber-optic gear and the award of a contract by BT to the company was no surprise, nor were other contract awards to big players like Alcatel, Cisco, Juniper, and Siemens. However, the failure of Marconi Telecom, a long-time supplier to BT and the last major British telecommunications equipment supplier, to win any contract for 21CN, was a shock, in fact enough of a shock to push Marconi Telecom into bankruptcy. In late 2005, Marconi's equipment businesses were bought out by telecommunications giant Ericsson of Sweden.

Ericsson had long been a major vendor to BT, supplying such things as central-office switches and software for direct-dial international calling, call waiting, caller ID, voice mail, three-way calling, call logs for billing, emergency service, and so on. 21CN implied moving all this software to a new "inode" computers that are replacing the old switches; there was absolutely no alternative to selecting Ericsson to do that particular job, and so Ericsson got an exclusive contract.

That was, not so incidentally, the only single-vendor deal for 21CN. BT was otherwise very cautious about relying on any one vendor for the rest of the system. In particular, the core routing network, which sends torrents of digital data over the trunk lines that link the metro nodes, is completely duplicated, with one set of terabit routers from Cisco and the other from Juniper. The rationale was that a virus penetrating the network would shut it down for days, but since each of the duplicate networks uses different software and different security technology, it would be very difficult to compromise both at the same time.