* 22 entries including: evolution & information, North & South Korea, smart "bots" for computer games, GPS phone applications, USAF X-37B spaceplane, DARPA MEMS program, global domination of English, better stoves for developing nations, data mining, futuristic German hotel, ESA Earth Explorer satellite series, and mutant "fancy" goldfish.

* NEWS COMMENTARY FOR MARCH 2009: On 3 March, Sri Lanka's cricket team was in a road convoy in Lahore, Pakistan, that was attacked by twelve masked gunmen. Six police and two others were killed, while seven cricket players and their British coach were wounded. It is an indication of long-running tensions between Pakistan and India that the attack was blamed on India, the belief being that it was retaliation for the attack on Mumbai in November 2008 by a gang of Islamic terrorists that killed more than 170 people. To bolster this conspiracy theory, its advocates point to the fact that the assailants escaped -- good Islamic terrorists of course always conduct suicide attacks. India had also pointedly refused to send their national cricket team to the games, with Sri Lanka's standing in.

Many Pakistanis are in denial over Islamic terrorism, and in fact it is often believed there that al-Qaeda is a fiction invented by Islam's enemies. In reality, Pakistani intelligence has fingered a home-grown terrorist group named "Lashkar-e-Jhangiv (LeJ)", which has links into the al-Qaeda network and has performed a number of attacks previously. Pakistani intelligence has captured LeJ operatives who have said under interrogation that they were being trained for attacks on international cricket tournaments. The attack was a low blow: cricket is immensely popular in Pakistan, the Pakistani national team is world-class, and it's a humiliation for Pakistanis to realize that the chances of Pakistan being one of the hosts for the 2011 World Cup games has just evaporated.

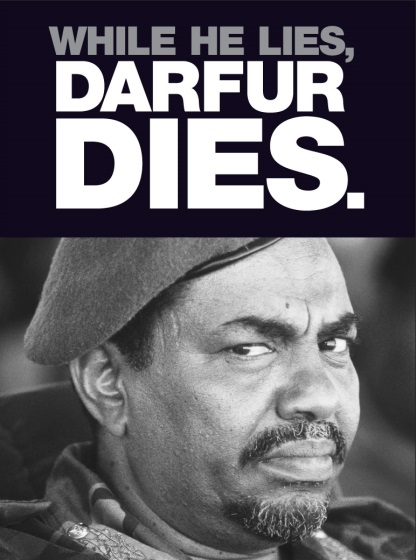

* On 4 March 2009, the International Criminal Court (ICC) in The Hague issued an arrest warrant for Omar al-Bashir, president of the Sudan, citing war crimes and crimes against humanity. This was the first time a sitting head of state had ever been indicted by the ICC. The indictment follows the 2008 decision by the ICC's chief prosecutor, Luis Moreno-Ocampo, to declare all of Darfur a "crime scene" in light of evidence of murders and mass rapes carried out by government-backed "janjaweed" militias.

The actual implications of the exercise are unclear. Of course Bashir denied all charges and refused to cooperate. The 108 governments that signed the Rome Treaty, which created the ICC, are obligated to assist in bringing Bashir to justice, but about a third of the signatories, mostly African and Islamic states, have made it clear they do not approve of the indictment. The UN Security Council remains split on the issue.

Following the indictment, a number of the main Western aid organizations in the Sudan were told to get out of the country immediately. The Sudan had finally accepted, under extreme pressure, a joint United Nations / African Union peacekeeping force. The force, currently 15,000 strong, may face reprisals from the government. Some nations are arguing that the indictment be shelved for the time being, while others say they do not want to promote a "climate of impunity" that would encourage the continuation of atrocities. The hope is that putting up a wanted poster for Bashir, the ICC warrant will deepen conflicts in the regime and force him out.

The ICC's position is legally troublesome, the court only being allowed to take on cases when no other authority is in a position to do so. Its past and current investigations have been slow and halting, and it has yet to win a conviction. However, things seem to be looking up for the ICC, most importantly because US President Barack Obama is supportive. The previous Bush II Administration had been downright hostile to the ICC -- the Clinton Administration had signed the Rome Treaty, but President Bush ordered that the US withdraw its signature, insisting that the US would never hand over an American to the court. Congress was not happy about the idea either.

The atrocities in Darfur had made George W. Bush reconsider his position on the matter to an extent, and Barack Obama seems likely to carefully examine measures that the US can take to support the ICC. There is a limit to what the president can do, since the US Constitution makes it clear that no international body can override the US government in decisions regarding American citizens -- but the USA has been heavily supportive of tribunals for Yugoslavia, Rwanda and Sierra Leone. Politics is an art of flexibility, and it seems plausible that some harmonious formula can be determined.

* Regarding the series this month on the two Koreas, in the face of mutual provocations -- upcoming North Korean long-range missile launches on one side, and joint South Korean / American military exercise on the other -- the North Korean government demonstrated its usual civilized style by saying that it could not guarantee the safety of South Korean airliners passing through its airspace.

About 30 international flights a day usually pass through North Korean airspace to and from the South. Passenger planes normally leave Seoul for the eastern United States by swinging north over the Sea of Japan to follow the Korean coastline towards Russia and then towards Alaska. The military exercises are an annual affair and Pyongyang always protests, but the protests are not usually accompanied by barely-veiled hints of violence.

Diplomatic relations between North Korea on one side and South Korea, Japan, and the USA on the other are currently at a low, and normal is not all that good. A South Korean government official responded in blunt terms: "Threatening civilian airliners' normal operations under international aviation regulations is not only against the international rules, but is an act against humanity." Somewhat more dryly, the US State Department described the North's announcement as "distinctly unhelpful."

COMMENT ON ARTICLE* SMART ADVERSARIES (2): Smart bots make for better video games, but for a game to retain interest the bots also have to get smarter as the game goes on. Game players are very quick to notice predictabilities in the behavior of game bots, and once the players have understood the bots well enough, the game becomes more like a shooting gallery than a challenge. The traditional solution is just to code in some randomness in bot behavior, or gradually raise the "level" of play over time. The better way is for the bots to learn a player's predictabilities in turn and adjust their own play accordingly.

Actually, some games had featured "machine learning" capabilities a decade ago, but they were unusual exceptions. The "Game-playing, Analytical methods, Minimax search & Empirical Studies (GAMES)" group at the University of Alberta in Edmonton, Canada, has now developed a "learning engine" designated the "Player-Specific Stories via Automatically Generated Events (PaSSAGE)" for general use in game software. As the name implies, PaSSAGE is about storytelling, which is an obviously important feature in video games. Such games usually have a "backstory", which can be very elaborate; in some cases, the story can take on variable forms, and a few instances, such as the popular SIM series of games, the players can build their own world with its own story. PaSSAGE picks up information about the interests and preferences of players as a game progress and then adapts the story to suit the players.

PaSSAGE is built around the game engine from NEVERWINTER NIGHTS, a medieval swords & sorcery fantasy game. Using PaSSAGE, scriptwriters determine only the most general story arc, fleshing it out with a library of possible encounters that might happen to the player's avatar. The software builds up a "dossier" on a player over multiple games and adjusts the gameplay accordingly: a player who likes to fight will get more combat, a player who wants to amass wealth will get more chances to obtain jewels or other rewards. The software maintains a history of the evolution of the gameplay to ensure that the "story" remains consistent over time.

Machine learning can also be used to define bot tactics, which traditionally have been "hard-coded" in most games. In 2005, Pieter Spronck and his colleagues at the University of Tilburg in the Netherlands demonstrated this capability using NEVERWINTER NIGHTS. The GAMES team at the University of Alberta has been following up on Spronck's experiment, with team members currently working on a multiplayer online game named COUNTER-STRIKE, which pits a group of terrorists against a team of antiterrorist commandos, with characters either controlled by a player or by the computer.

So far, that project has produced a formal system for analyzing and classifying a team's opening moves. That may not sound like much, but it wasn't a trivial task since the positions and actions in COUNTER-STRIKE are far less constrained than they are for, say, a game of chess. The system has been used to analyze extended logs of tournament-level COUNTER-STRIKE play. The next step is to figure out how the bots can learn from the log information to develop their own tactics. An MIT group is working on similar technology for THE RESTAURANT GAME, with the objective of allowing bots to use logs of play to learn how to speak and act believably in social settings. [TO BE CONTINUED]

START | PREV | NEXT | COMMENT ON ARTICLE* EVOLUTION & INFORMATION (4): As discussed in the previous installment, as far as contemporary information theory is concerned, the amount of information in a string depends only on how disorderly the string is. Given a bitmap image, the compression ratio only depends on the randomness of the image, it doesn't depend on what the image is -- the image could be of anything, even an incoherent image, pure noise. The compression algorithm is completely indifferent to the meaning of the image; information theory says nothing about it. How could it? What sensible, broadly applicable scheme could be implemented to quantify the meaning of an image? Would a picture of the Mona Lisa get a high value and a pornographic cartoon get a low value? What about the information content of a book? Who hasn't read a book that talks a lot and says nothing?

The critics have been gradually realizing that the definitions of information as provided by information theory don't provide a good basis for their criticisms, and they have been increasingly trying to define concepts such as "functional information" or "complex specified information" that factor in what the information actually does, as opposed to what might be called the "raw information" measured by KC theory. From the point of view of mainstream information theory, the idea that the "code" of the genome contains "information" while a snowflake does not is nonsense. When confronted with this, the critics reply: "We're not talking about raw information. We're talking about functional information."

Such a notion of "functional information" makes a certain amount of obvious intuitive sense, and it's certainly possible to provide measures of it -- but only for very specific cases and for narrowly specific purposes. It may not be impossible to come up with a generally applicable scheme of quantifying meaning that isn't arbitrary and mathematically useless, but people have tried and so far not had much luck. Without any real ability to quantify "functional information", it is difficult to show that it is lost or gained in various processes, and no way to show any basis for a "Law of Conservation of Information".

It might seem obviously true that the instruction sheet for putting together a model kit with a hundred parts contains more "information" than one for putting together a kit with ten parts. We can easily say that we should end up longer instructions, say ten times longer, for the more complicated kit, and in fact we could specify the "functional information" in the instructions for model kits as, say, the number of individual panels in the instructions.

However, the number of panels on the instruction sheet is just an ad-hoc statistic of interest only to model builders, and even at that it doesn't say anything about the relative elaboration of the individual panels or the clarity of the instructions. Does a brief instruction sheet that's clear have more or less information than a long instruction sheet that's incomprehensible? There's nothing in the number of panels to permit comparison to anything but the instructions for another model kit, much less come up with some fundamental rule of information theory. The most information theory could say about the information content of an instruction sheet is to digitize it and say what the size of the file is after compression, regardless of the actual volume of the instruction sheet.

Even in the much more specific case of a digital system, it is hard to define functional information, since it implies some way of assigning "functional weight" to bits of information. Consider as a hypothetical example the navigation system of a drone, an unmanned aircraft, and imagine that it uses a 16-bit binary number to provide its directional bearing -- north, south, east, west. A 16-bit binary number can store values from 0 to 65,535, and so it could give the bearing down to less than a 180th of a degree. The interesting thing is that if the rightmost, least-significant bit of that value is changed, the direction of drone is only changed by an all but unnoticeable fraction of a degree -- but if the leftmost, most-significant bit is changed, it changes the value by 32,768 and sends the drone flying in entirely the opposite direction. In other words, even in a strictly digital system, changing a bit of information may have no visible effect, or it may have a drastic effect. There's no neat mapping between bits and functionality.

Along similar lines, how much information is there in a computer program? Just as with the model kit instructions, it seems intuitively obvious that the more complicated the task being performed by the program, the more information there is in the program. However, once again this is very difficult to nail down. One problem is there's no known general way to assign a value to the functionality being implemented -- how can we say that one program does twice as much as another except if they do pretty much the same things?

We can certainly compare programs that, say, perform the same mathematical function to show which program is more compact and works faster, but how could we compare two programs that perform entirely different tasks? How could we assign values to the functionality implemented? Even on an informal basis, it's very hard to determine any general relationship between the size of a program and what it accomplishes. A program that draws a real-world object of any particular elaboration tends to be long-winded, but elaborate iterative "fractal" patterns can be drawn by very simple programs -- and the detail of elaboration of the fractal pattern can be multiplied by changing the number of iterations, without changing the size of the program in the slightest.

To make matters worse, different computer languages and algorithms might be able to do a particular job much more easily than others. The most we can sensibly say is that the information in any given program is represented by its size after compression, which has no strong relationship to what the program does.

It's tricky enough to define the "functional information" in a computer program; trying to do so for a biosystem that has only the faintest resemblance to a digital system is simply nonsense. The whole notion of "functional information" remains a fantasy for the time being, all attempts to define it ending up being either ad-hoc measures or tail chases. Going back to the comparison of the genome with a snowflake, the notion that the genome has "functional information" seems plausible at first, but it turns out to be a red herring. Aside from elaboration, the exact same laws of physics and chemistry apply in both cases, and there is no real dividing line, no "magic barrier", between the two.

The critics sound very confident when they speak of "functional information" or "complex specified information" and the "Law of Conservation of Information", but the reality is they simply made them up. They are little more than sophisticated-sounding rephrasings of flimsy traditional attacks on MET, have no basis in mainstream information theory, and have not been backed up by credible arguments. [TO BE CONTINUED]

START | PREV | NEXT | COMMENT ON ARTICLE* UBIQUITOUS GPS: WIRED magazine had an interesting survey in the February 2009 issue of applications that make good use of cellphones with Global Positioning System (GPS) location capabilities:

* X-37B TO SPACE: The US Air Force has been in space from the beginning of the space race, and now operates an extensive constellation of satellites to support US military operations. Although it might have seemed logical for Air Force "blue suiters" to have also pursued a "spaceplane" -- a winged space vehicle with more resemblance to an aircraft than a satellite, to support space operations -- the service effectively gave up on the concept after the cancellation of the X-20 Dynasoar crewed spaceplane project in the early 1960s.

Now things have come full circle. As reported in AVIATION WEEK ("Higher And Faster" by Craig Couvalt, 4 August 2008), the USAF plans to launch an experimental robot spacecraft, the "X-37B Orbital Test Vehicle (OTV)", on an Atlas 5 booster from Cape Canaveral this year or the next. Although the X-37B will be launched like any other spacecraft, it will glide back to a runway for landing, to be serviced in no more than three days for a new mission. The Air Force sees the X-37B as the prototype for the "Space Maneuvering Vehicle (SMV)", an operational robot spaceplane that could perform a wide range of space missions, such as surveillance and rapid deployment of microsatellite constellations in a crisis. Air Force brass have said little about long-range fast-reaction strike, but it is clearly an option.

The project has complicated roots. It was initiated by the US National Aeronautics & Space Administration (NASA) in the late 1990s. The original X-37 spaceplane was envisioned as a testbed for future spacecraft designs, with the vehicle sent into space as a shuttle payload. Boeing was the prime contractor, with the company contributing funding; the Air Force Research Lab (AFRL) was also a "minority contributor" to the effort.

An unpowered subscale glide demonstrator, the "X-40A", was built and dropped from a carrier aircraft on tests from 1998. NASA and Boeing did work on a full-scale X-37 demonstrator and performed similar drop tests, but in 2004 NASA passed the program on to the Defense Advanced Research Projects Agency (DARPA). The AFRL remained involved; in 2006, the USAF finally decided to take ownership of the effort, under the direction of the Air Force Rapid Capabilities Office (AFRCO). DARPA and NASA remained as "minority contributors".

The X-37B has a bullet-shaped fuselage, stubby low-mounted "double delta" wings, and a vee tail. It has a launch weight of about 5,000 kilograms (11,000 pounds), a length of about 8.8 meters (29 feet), a wingspan of about 4.6 meters (15 feet), and a height of 2.9 meters (9.6 feet). It will be launched inside a payload shroud. Once deployed in orbit, it will open up a small payload bay and deploy a solar array for space power.

The X-37B has an orbital maneuvering system using storable hydrazine and nitrogen tetroxide propellants; it also features advanced thermal protection tiles and carbon-carbon materials to protect its aluminum and carbon-composite skin during reentry. The X-37B will conduct its mission using an autonomous flight control system for several weeks up to reentry and landing, with the spacecraft returning to Earth on the shuttle landing strip at Vandenberg Air Force Base in California following its maiden flight; the landing strip at Edwards AFB will be used as an backup.

Ironically, the Air Force is working on its own spaceplane just as NASA is planning to phase out the space shuttle. There is no commitment to a second flight of the X-37B at the present time; whether further development to an operational SMV seems justified will depend in a large degree on the results of the initial flight.

COMMENT ON ARTICLE* DARPA DOES MEMS: There's been considerable interest in recent years in the development of tiny flying and crawling robots for military applications, with the US Defense Advanced Research Projects Agency (DARPA) being one of the leaders in the effort. One of the issues with such "microbots" is that tiny robots require comparably tiny payloads. As reported in an article in THE GUARDIAN in the UK ("Nanotechnology Goes To War" by David Hambling), DARPA isn't ignoring the need for "micro electromechanical systems (MEMS), with one of the five branches of the agency's "Microsystems Technology Office" fully dedicated to the task.

DARPA isn't a newcomer to MEMS development either, with the agency having been a prime mover in the development of "lab on a chip" chemical analysis systems in the early 1990s. A number of US startup companies picked up that technology and ran with it to develop more powerful, more compact, and cheaper biological analysis tools. Now DARPA is expanding the horizons of MEMS research, with work in progress to develop specific new MEMS technologies:

Over the longer run, DARPA wants to build complete and self-contained miniaturized systems. DARPA organizational literature describes "matchbook-size, highly integrated device and micro-system architectures", including "low-power, small-volume, lightweight microsensors, microrobots and microcommunication systems". Much of the effort is to integrate different components so that "electronic, mechanical, fluidic, photonic and radio/microwave technologies" all work together on the same chip.

DARPA isn't ignoring the need to power their MEMS systems, with work underway on tiny combustion engines and devices that obtain energy from sunlight, motion, or other environmental inputs. DARPA researchers are even developing a "micro isotope power source", a tiny atomic battery with a volume of less than a cubic centimeter and a power output of 35 milliwatts.

DARPA has had its failures, but the agency is widely respected by industry and the military. Its charter is to take on high-risk investigations that neither industry nor the armed services themselves could afford to fund, and its successes, such as the internet, show that overall the money has been well invested. DARPA's experimental MEMS systems may seem like science-fiction today, but in a few decades we may well take them for granted.

COMMENT ON ARTICLE* SMART ADVERSARIES (1): Artificial intelligence (AI) researchers have long been interested in software that's smart enough to play games with human users, chess being the most significant example. As reported by IEEE SPECTRUM ("Bots Get Smart", by Jonathan Schaeffer, Vadim Bulitko, and Michael Buro, December 2008), it's not all that surprising once pointed out that video-game designers have a strong interest in AI, seeking to develop ever more intelligent computer adversaries for their games.

Game designers have always sought more credible characters, or "bots", for their games but have traditionally focused more on their appearance. However, the bots also need to be able to carry on plausible conversations, plan their actions, find their way around their virtual environments, and learn from mistakes. Traditionally, game designers haven't had the capability to make their bots very smart and could do little more than "fake it" with a handful of tricks.

The idea of applying AI concepts to video games actually came out of academia. In 2000, John E. Laird, a professor of engineering at the University of Michigan, and Michael van Lent, now chief scientist at Soar Technology, in Ann Arbor, Michigan, published a paper that suggested video games as an AI "killer app". Not only would video game development boost resources for AI research, but the AI technologies implemented in games would have a very wide range of possible application elsewhere. Purists might have turned up their noses at the idea, but others found it intriguing.

Consider the game "FIRST ENCOUNTER ASSAULT RECON (FEAR)", developed by Monolith Productions of Kirkland, Washington and released in 2005. It achieved a degree of success; more significantly, it was one of the first video games to incorporate serious AI technology. The AI of FEAR was loosely derived from a classic AI application, the "Stanford Research Institute Problem Solver (STRIPS)", developed by Richard E. Fikes and Nils J. Nilsson, both now at Stanford University in California.

STRIPS was introduced in 1971. The general idea was to define one or more goals along with a set of possible actions, with the software assessing the actions and determining the sequence required to achieve the goals. In FEAR, the planning engine kept track of the virtual environment and determined which actions by bots were allowed. Once actions were completed, the environment and the allowed actions list were modified accordingly.

The soldier bots in FEAR were assigned goals such as patrolling, killing the player's avatar, and taking cover to protect their own virtual lives. Each different kind of bot was assigned its own set of possible actions to achieve these goals. One advantage of this approach was that it eliminated the need to define specific actions in response to specific events: the FEAR bots could react sensibly even to scenarios the game designers had never imagined. In fact, the planning system in FEAR gave its bots a surprising appearance of intelligence even though they were only following simple sets of predefined actions, for example cooperating to set up ambushes for the player's avatar. [TO BE CONTINUED]

NEXT | COMMENT ON ARTICLE* EVOLUTION & INFORMATION (3): What Claude Shannon was trying to do in establishing information theory was determine how big a message could be sent over a communications channel. He had no real concern for what the message was; if somebody wanted to send sheer gibberish text over a communications channel, that was from his point of view the same as sending a document; noise was simply information other than the message that was supposed to be sent over the channel. The amount of information in the message only related to the elaboration in the patterning of the symbols of the information. The content of the message -- its "function" or "meaning", what it actually said -- was irrelevant.

In KC theory, the amount of information was defined as the best compression of this information, along with the size of the programs needed to handle the compression. The compression of the information increased depending on how structured or nonrandom the information was -- for example, if the information was very repetitive, it could be compressed considerably. If it were highly random, in contrast, it would not compress very well.

Notice that in this context, "information" can be "nonrandom" or "random" -- "information" and "random" are not opposed concepts. In addition, "messages" and "noise" are simply information, the first wanted and the second not, and either can be "nonrandom" or "random" as well.

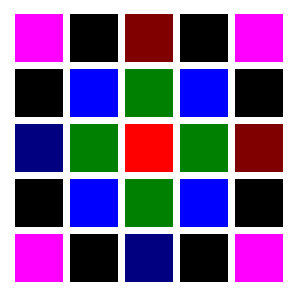

For an example of information theory in action, consider the PNG image-file format used on the internet, which uses a two-dimensional variation on RLL to achieve compression. Converting an uncompressed image file, such as a BMP file, to PNG can result in a much smaller file that will download faster from a website over an internet communications channel. However, the amount of compression depends on the general nature of the image. A simple structured image, for example consisting of some colored squares, is highly nonrandom and gives a big compression ratio -- a casual way of putting this is that the image contains a lot of "air" that can be squeezed out of it. A very "busy" photograph full of tiny detail is much more random and doesn't compress well. An image consisting of completely random noise, sheer "static", compresses even more poorly. Consider the following image file containing a simple pattern:

-- and then a file containing a "busy" photograph of a flower bed:

-- and finally a file containing sheer meaningless noise:

All three of these image files are full color and have a resolution of 300 by 300 pixels. In uncompressed BMP format, they all are 263 kilobytes (KB) in size. In compressed PNG format the first file, with the simple pattern, is rendered down to 1.12 KB, less than half a percent of its uncompressed size -- a massive compression, not surprising since the image is mostly "air". The second file, with the flower bed, compresses poorly, down to 204 KB, only being squeezed down to 78% of its uncompressed size. The third file, with the noisy pattern, does worse, compressing to 227 KB, 86% of its uncompressed size. It might seem that the third file wouldn't compress at all, but random patterns necessarily also include some redundancy, in just the same way that it's nothing unusual to roll a die and get two or more sixes in a row -- in fact, never getting several sixes in a row would be in defiance of the odds. Purely random information can be compressed, just not very much.

In any case, the bottom line is: which of these three images contains the most information? As far as KC theory is concerned, it's the one full of noise. The intuitive reaction to that idea is to protest: "But there's no information in the image at all! It's pure gibberish!"

To which the reply is: "It is indeed gibberish, but that doesn't matter -- or to the extent that it does matter, it has lots of information because it is gibberish. The information content of the image is simply a function of how laborious it is to precisely describe that image, and it doesn't get much more laborious than trying to describe gibberish." [TO BE CONTINUED]

START | PREV | NEXT | COMMENT ON ARTICLE* SCIENCE NOTES: As reported in THE ECONOMIST, dogs make a fascinating subject for studies of genetic analysis. Not only has human selective breeding managed to distort dogs almost out of recognition from their wolf ancestor -- dogs are actually more closely related to wolves than coyotes are, though it would be hard to see that in a pekinese -- but their inbreeding makes identifying genes relatively simple, and their short generation times and large litters make them good lab subjects.

Elaine Ostrander of the US National Human Genome Research Institute has acquired expertise in the dog genome, using it to help nail down cancer genes paralleled in the human genome and to detail the dog family tree. In a recent paper she described how simple gene changes have accounted for many of the distinct features of dogs.

For example, the size of the Portuguese water dog is governed by a gene named the "insulin-like growth factor 1", and she believes it plausible that the same gene controls size in other dogs. Similarly, short legs -- a condition referred to as "chondrodysplasia" or more commonly "dwarfism", and exhibited by breeds like corgis and dachshunds -- are the result of the reactivation of a "broken" gene. Chromosomes are littered with broken genes; in the case of corgis and similar dogs, a "jumping gene" element popped into the fossil gene and reactivated it.

Ostrander's paper also pointed out that the wide variations in dog fur -- short, long, and wiry, with some breeds featuring "furniture" like mustaches -- is largely accounted for by combinations of differences in only three genes. The conclusion of the paper is that a considerable amount of difference in form and function can be caused by surprisingly minor changes in the genome. Ostrander emphasized the practical utility of understanding dog genetics as well, suggesting that an understanding of dog dwarfism might cast light on the same condition in humans.

* In news from LIVESCIENCE.com, a fish named the "barreleye" has unique eyes. It has a transparent head with two leaflike structures buried in it; they are actually eye structures that can be pivoted up or to the front. It also has two organs in front that look like eyes at a distant inspection, but which are really smell organs. It appears the barreleye likes to scavenge food from siphonophores, the group of jellyfish-like colonial organisms best know from the Portuguese Man o' War. They have long trailing stinging tendrils cells that makes stealing the prey caught in them difficult, but the barreleye has a number of adaptions to allow it to get away with the trick -- most spectacularly the heavily shielded eyes.

* Another LIVESCIENCE.com article from a few months back reported on a close relative of the barreleye, the "brownsnout spookfish", which has even more bizarre eyes. In the depths of the Pacific where it lives, a kilometer below the surface, there is little light, and such light as there is tends to come from bioluminescent markings on the fish around it.

While the spookfish looks like it has four eyes, in fact it only has two, each of which is split into two connected parts. The top part points upwards, giving the spookfish a view of the ocean, and potential food, above. The bottom part, which looks like a bump on the side of the fish's head, points down. These "diverticular" eyes, as they are called, are unusual in that they use a mirror to make the image. Scallops have mirror-based eyes, but they are not known in any vertebrates except for the spookfish.

Spookfish have been known for 120 years, but it wasn't until live specimens were observed in action recently that the unusual nature of the fish's eyes became apparent. Images of the fish from below showed a reflection from the lower part of the eyes that tipped off researchers. The lower part of the eyes uses a mirror surface to reflect light to the common retina of the upper and lower eye. It seems plausible that the spookfish acquired this adaptation because it helped give warning of attacks from below.

COMMENT ON ARTICLE* SPEAK ENGLISH PLEASE: It is no surprise that, as reported by THE ECONOMIST'S rotating European columnist "Charlemagne", European nations are not entirely happy about the ever-increasing global predominance of the English language. In practice, however, they are finding it hard to resist the tide, with the European press setting up websites with English and local language versions. The well-known German weekly DER SPIEGEL has led the way in creating a network of English-language news websites, with major publications in the Netherlands and Denmark signing up, as well as talks in progress with newspapers in Spain and even France, where anti-English sentiment is as a strong as it gets -- a "Prix de la Carpette Anglaise (English Doormat Prize)" is handed out every year to those perceived as toadying up to the linguistic invader.

Outside the DER SPIEGEL network, major newspapers from all over Europe have English-language websites. The motive is not to toady up to English-speakers; it's to address neighbors in a language they all understand. English also means access to international advertisers, who know they are reaching the broadest possible market in a linguistic sense, and makes interviews with world leaders much easier.

The end result is the rapid erosion of the European Union policy of "mother tongue plus two" -- citizens are encouraged in effect to learn English and something that isn't English. Europeans born before World War II are just as likely to speak English, German, or French -- but 15-to-24-year-olds are five times as likely to speak English than any other foreign tongue, with 60% of that group speaking English "well or very well". Once they have English, any other language is usually just a luxury.

This is not an unalloyed triumph for English-speakers, however, since as mentioned above the major objective is not to communicate with native English-speakers, but to communicate with people whose native tongues are not English. It allows Poles and Italians and Dutch and Finns to hold sensible conversations. An official of the Dutch news media shrugs over the English issue, calling English merely "an instrument", not "a surrender to a dominant culture." Any language could have been "the instrument" -- English just ended up being the one.

Indeed, the pan-European conversations in English are of no great interest to native English speakers, who prefer their own news media. Britons only account for 5% of DER SPIEGEL's traffic to English language websites, and the UK's dailies are not even all that interested in European affairs, focusing more on light sensational material. Foreign reports are heavily biased towards tales of British tourists and expatriates in trouble, a genre nicknamed "Brits in the shit". However, it should be noted that 50% of DER SPIEGEL's English-language traffic does come from North America.

To be sure, it's parochial and obnoxious that English-speakers are showing ever less interest in learning a second language, with the decline from an already low level. However, Philippe van Parijs, a Belgian academic, sees that as perfectly rational and inevitable. At EU meetings, what language do they use? English. Even if the meeting is dominated by Francophones, there's no sense in conducting a conversation that can't be followed by the minority that doesn't speak French. Learning a second language ends up being a luxury to Anglophones, and they'd have to be able to speak that second language very well to compete with communicating in English.

However, there is even more irony in the dominance of English, in that Anglophones are regarded as the hardest to understand in multinational conversations: they talk too fast and they use colloquial expressions that nobody follows. A retired French businessman named Jean-Paul Nerriere has written a book to suggest that "international English" is becoming a distinct language of its own, which he calls "globish". He describes it as consisting of a vocabulary of only about 1,500 words, with conversations depleted of humor, metaphor, abbreviation, and anything else that could cause cross-cultural confusion. One speaks slowly and in short sentences. Ultimately, it seems Anglophones will have to become bilingual after all, knowing not only their own flavor of English but the stripped-down variant known as globish. The balance will in the end be restored, at least to a degree.

ED: The notion of globish is easily understood by anyone who's ever tried to communicate over email with someone who writes in another language, using notoriously braindead machine translation software. It took me a while, but I finally figured out the protocol. I get an email in, say, Spanish, I translate online, and I reply as best I can figure out. I reply in English, the only Spanish being a link to the translation software webpage -- I've learned that as a rule the Spanish-speaker will continue to write in Spanish even given access to translation software, which can be irritating if I've translated my reply into Spanish. Requiring translation on both ends maintains the balance.

The first part of my reply says: "Please use short sentences. Please use simple words. Please do not use informal expressions." Think like a machine, make it as easy for the idiot translation software as possible. If they don't follow that advice and just keep on writing as though I were fluent in Spanish, the next reply is: "I do not understand. Please use short sentences. Please use simple words. Please do not use informal expressions." OK, sport, you can play along or we can stop here, your choice.

I usually don't have to do that twice to get them to play along. I've been astounded to be able to hold sensible conversations with people when we don't understand a single word in common. Of course, if someone emails me in, say, Serbo-Croatian, there's not much I can do about it -- it's hard to find a utility that can translate it.

COMMENT ON ARTICLE* BUILD A BETTER STOVE: As reported in THE ECONOMIST ("Fresher Cookers", 6 December 2008), about half of the world's population does its cooking with fuel such as wood, dung, and coal. It would seem like there would be a market for improved stoves to burn such materials, but in reality in many parts of the world, the stove is still at a Stone Age level of refinement. A better stove is not just a matter of nicety, either. The World Health Organization (WHO) estimates that toxic emissions from cooking stoves lead to 1.6 million premature deaths a year, half of them children under the age of five. Studies show that stoves contribute heavily to maladies such as lung cancer, respiratory disease, and cataracts.

Researchers have invested some effort into the problem, but it turns out to be more difficult than it looks. Initially, the focus was on stoves that burned more efficiently to reduce deforestation, but now the goal is to reduce toxic emissions produced by the stoves. There has also been a belated realization that the proper design of such stoves requires a good understanding of how poor folk actually use their stoves.

The basic physical problem is to ensure that the stoves get the right amount of air to perform optimum combustion. It's not just a question of getting enough air; too much air cools the fuel and reduces combustion efficiency as well. The traditional "three stone fire" used in many parts of the world does a very poor job of combustion. Even relatively modern stove designs have poorly insulated combustion chambers, allowing heat to leak out instead of contributing to efficient burning. Envirofit, an organization developing cookstoves for India with backing from the Shell Foundation, has developed a cookstove using carburetion, with chimneys that draw air in through precisely designed inlets. Another modern cookstove, the "Oorja", developed by British Petroleum and the Indian Institute of Science, has a built-in, battery-powered fan to direct heat to wood pellets in the combustion chamber, improving efficiency.

And then there is the problem of ensuring proper ventilation -- not such an issue if cooking is done outdoors, as it often is in sunny undeveloped countries, but very troublesome if a stove is used indoors in structures that do not include chimneys. Add to this the problem of building a stove that poor folk can afford, but will stand up to abuse -- an issue complicated by the reality that the stoves have to be made of locally-available materials by resident fabricators.

Finally, there is the question of ensuring that the stoves are compatible with local foods and cooking habits. Early efforts to introduce modernized cookstoves were naive, falling down over a lack of field testing. In the refugee camps of Darfur, for example, dough for the staple bread, known as "assida", had to be stirred vigorously, and the stoves provided simply fell over. The lesson has been learned, with lab research now accompanied by extensive field trials. Says one researcher: "You don't get what you expect -- you get what you inspect."

COMMENT ON ARTICLE* TWO KOREAS (3): Few believe that North Korea has a future. The question is that nobody has any idea of how long it can continue to shamble around as a malevolent zombie -- and as long as it does so, it remains a potential threat.

The two Koreas are still technically at war, and Pyongyang is quick to rattle the saber at Seoul. North Korea has a huge army, particularly relative to its population. However, most of its weapons are obsolete, and it is an interesting question of how much of them are even still in working condition. The new Russian state has no interest in militarily backing North Korea, and the Chinese have made it clear that they do not feel obligated to come to North Korea's defense. The US has 30,000 troops in South Korea; their numbers will come down in the near future, but only because the South Koreans have been beefing up their military and the US forces are less needed -- and both the US and South Korea insist the military alliance between the two countries remains strong.

If North Korea attacked South Korea, the North Koreans would be crushed by South Korean and American firepower. However, the attackers could do an immense amount of damage before they were put out of business, particularly since the North Korean regime has stockpiled chemical and biological weapons, along with a rudimentary nuclear weapons stockpile and an arsenal of medium-range missiles to deliver them. South Korea has no nukes, but the Americans have declared they will respond in kind if North Korea goes nuclear, limiting the ability of North Korea to intimidate the region.

Unfortunately, as long as North Korea has nukes, it still presents a menace, and Pyongyang knows this. Nukes may not be a very useful weapon as such, but they have proven a marvelous bargaining tool. Since 2003, America, Russia, China, Japan, and the two Koreas have engaged in the "six-party process" to persuade North Korea to give up its nuclear arsenal in exchange for bribes such as food and fuel. US hawks blast the talks as propping up a thug regime; those engaged in the talks have no illusions about what they're dealing with but say some progress is being made, and ask the hawks for alternatives. The nasty truth is that the regime needs to be propped up, since as bad as it is, its abrupt collapse would be absolutely calamitous. In recent years, South Korea has taken a conciliatory and helpful tone with North Korea. Lee Myung-bak has adopted a somewhat harder line, but still the general hope of all those dealing with Pyongyang is that, somehow, reason will prevail and North Korea will be guided toward the light in a process of gradual change for the better.

Even those advocating such an approach know perfectly well that events may not be kind to it. Rumors of Kim Jong Il's health problems have created a rumble of concern, though nobody is predicting massive change if he dies or is incapacitated. There was considerable speculation that things would change when his iron-fisted father Kim Sung Il died in 1994, since few thought that Kim Jong Il, noted for his playboy lifestyle and cruel whimsies, could fill his shoes. The reality was that the ruling class was dedicated to the Kims and the regime survived, though it has fallen on tougher times.

Nowdays, nobody's willing to read too much into rumors from North Korea. One of the reasons is because few really want to face the prospect of what would have to be done if the regime finally imploded. This is not so much out of a refusal to face reality than a simple understanding that such an event would overwhelm all capability to deal with it.

* In the meantime, South Korea continues its efforts to achieve parity with the rest of the rich world. The economic system is in need of liberalization, and the country needs to pay more attention to energy and environmental issues. As mentioned earlier, democratic processes need some polish as well. Given that nobody knows when the status quo with North Korea is going to change in a significant way, the South Koreans have every reason to make sure their own house is in order, and try not to worry too much about what might happen in the worst case.

Still, beyond the horizon of immediate difficulties and threats, there's a simple realization that there should be one Korea, not two, that this clumsy relic of the Cold War needs to be put to rest. One Korea, not divided against itself and working as a whole, would be a more significant player on the world stage. Unfortunately, how that state of grace might be achieved remains anyone's guess. [END OF SERIES]

START | PREV | COMMENT ON ARTICLE* EVOLUTION & INFORMATION (2): To understand the failings of the supposed "Law of Conservation Of Information" requires an understanding of definitions from mainstream information theory. The roots of information theory can be traced back to Claude Shannon (1916:2001), an electrical engineer and mathematician who was employed by AT&T Bell Laboratories. After World War II, Shannon was working on the problem of how much telephone traffic could in theory be carried over a phone line. In general terms, he was trying to determine how much "information" could be carried over a "communication channel". The question was not academic: it cost money to set up phone lines, and AT&T needed to know just how many lines would be needed to support the phone traffic in a given area. Shannon was effectively laying the groundwork for (among other things) "data compression" schemes, or techniques to reduce the size of documents, audio recordings, still images, video, and other forms of data for transmission over a communications link or

Shannon published several papers in the late 1940s that essentially established information theory. He created a general mathematical model for information transmission, taking into account the effects of noise and interference. In his model, Shannon was able to define the capacity of a communications channel for carrying information, and assign values for the quantity of information in a message -- using "bits", binary values of 1 or 0, as the measure, since binary values could be used to encode text, audio, pictures, video, and so on, just as they are encoded on the modern internet.

A message size could be described in bits, while the capacity of the communications channel, informally known as its "bandwidth", could be described in terms of bits transferred per second. Not too surprisingly, Shannon concluded that the transfer rate of a message in bits per second could not exceed the bandwidth of the communications channel. Shannon somewhat confusingly called the actual information content of a message "entropy", by analogy with thermodynamic entropy -- though the link between the two concepts is tenuous and arguable. It might be better informally described as just the amount of information.

Noise, in his model, was not distinct from a message -- they were both information. Suppose Alice is driving across country from the town of Brownsville to the town of Canton. Both towns have an AM broadcast radio station on the same channel, but they're far enough apart so that the two stations don't normally interfere. Alice is listening to the Brownsville station as she leaves town, but as she gets farther away, the Brownsville station signal grows weaker while the Canton station signal gets stronger and starts to interfere -- the Canton broadcast is "noise". As she approaches Canton, the Canton broadcast predominates and the Brownsville broadcast becomes "noise". To Alice, the message is the AM broadcast she wants to listen to, and the unwanted broadcast is "crosstalk", obnoxious noise -- not categorically different from and about as annoying as the electrical noise that she hears on her radio as she drives under a high-voltage line.

As far as Shannon was concerned, the only difference between the message and noise was that the message was desired and noise was not, both were "information", and there was no reason the two might not switch roles. In Shannon's view, message and noise were simply conflicting signals that were competing for the bandwidth of a single communications channel, with increasing noise drowning out the message.

* Shannon's mathematical definition of the amount of information is along the lines of certain data compression techniques -- "Huffman coding" and "Shannon-Fano coding", two different methods that work on the same principles. His scheme involves taking all the "symbols" in a message, say the letters in a text file; counting up the number of times the symbols are used in the message; assigning the shortest binary code, say "1", to the most common symbol; and successively longer codes to decreasingly common symbols. Substituting these binary codes for the actual symbols produces an encoded message whose size defines the actual amount of information in the message.

The Shannon definition of information is useful but not as general as might be liked, so two other researchers, the Soviet mathematician Andrei Kolmogorov (1903:1987) and the Argentine-American mathematician Greg Chaitin (born 1947) came up with a more general (and actually more intuitive) scheme, with the two men independently publishing similar papers in parallel. Their "algorithmic" approach expressed information theory as a problem in computing, crystallizing in a statement that the fundamental amount of information contained in a "string" of information, is the shortest string of data and program to permit a computing device to generate that string.

To explain what this means, Kolmogorov-Chaitin (KC) theory defines strings as ordered sequences of "characters" of some sort from a fixed "alphabet" of some sort -- the strings might be a data file of roman text characters or Japanese characters; or the color values for the picture elements (picture dots, "pixels") making up a still digital photograph; or a collection of elements of any other kind of data. As far as the current discussion is concerned, the particular type of characters and data is unimportant.

The information in a string has two components. The first component is determined by the total quantity of information encoded in that string. This quantity of information is dependent on the randomness of the string; if the string is nonrandom, with a structure that features repeated characters or other regularities, then that string can be compressed into a shorter string. The information is the length of the smallest string into which the original string can be compressed.

In effect, given for example a data file containing some text and assuming a given compression scheme, the KC information can be effectively thought of as the smallest file that could possibly result from compressing the uncompressed file. If the file contains completely random strings, for example "XFNO2ZPAQB4Y", then it's difficult to get any compression out of it. However, if the strings are structured, for example "GGGGXXTTTTT", they can be compressed -- for example, by listing each character prefixed by the number of times it's repeated, which in this case results in "4G2X5T". This is an extremely simple data compression technique, known as "run-length limiting (RLL)", but more elaborate data compression schemes work on the same broad principle, exploiting regularities -- nonrandomness -- in a file to reduce the size of the file. The KC information gives the absolute minimum possible size of the file. Real-world data compression schemes don't do quite that well, but an effective text-file compression scheme can cut the size of a typical text file in half without losing any information.

As mentioned, this conclusion involves the assumption of a particular compression scheme and glosses over the second component of the KC information of a string, defined by the size of the smallest program -- algorithm -- to convert the string. To convert the string "GGGGXXTTTTT" to the string "4G2X5T" requires a little program that reads in the characters, counts the number of repetitions of each character, and outputs a count plus the character. To perform the reverse transformation, a different little program is required that reads a count and the character, then outputs the character the required number of times. The sum of sizes of the compressed string and the programs gives the information. Smarter programs using a different compression scheme might be able to produce more compression, but a smarter program would also generally be a bigger program: You can't get something for nothing. [TO BE CONTINUED]

START | PREV | NEXT | COMMENT ON ARTICLE* This last February was an active month for space shots:

-- 02 FEB 09 / OMID -- An Iranian Safir-2 booster put the "Omid (Hope)" satellite into orbit. It was the first spacecraft launched by Iran. It was described as a research and telecommunications satellite. The launch site was not disclosed, though no doubt foreign intelligence has mapped it using surveillance satellites.

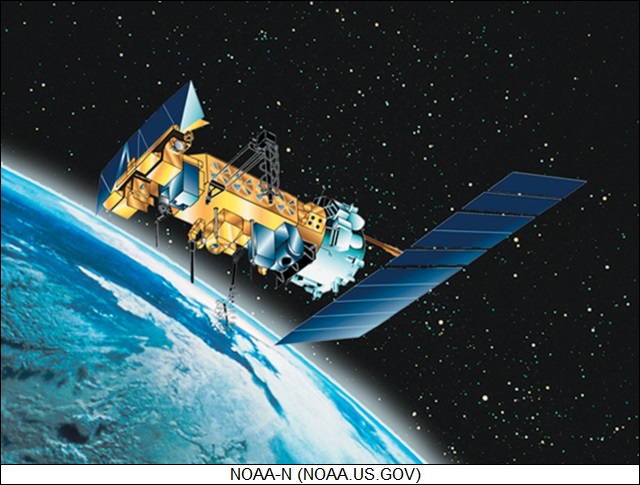

-- 06 FEB 09 / NOAA-N PRIME (NOAA-19) -- A Delta 2 7320 booster was launched from Vandenberg AFB in California to put the "NOAA-N Prime" low-orbit weather satellite into space. It was placed in a Sun-synchronous orbit, crossing the equator at 1400 local time on each orbit. It alternated with a European METOP satellite that crossed in the morning. It was renamed "NOAA-19" after delivery to orbit.

NOAA-N Prime was built by Lockheed Martin and had a launch mass of 1,420 kilograms (3,130 pounds). Its payload included:

Secondary payloads included the "Advanced Data Collection System (ADCS)", provided by the French CNES space agency to relay data from buoys, balloons, and remote ground stations; and a Canadian-build COSPAS-SARSAT rescue beacon relay system.

NOAA-N Prime was the final member of a series that traces its way back to the initial TIROS series of civil weather satellites of the 1960s. It was the 16th in its own series, tracing back to 1978. It had been involved in a factory accident in 2003, when it fell over on its mount, badly damaging it; most of its systems had to be replaced, but since it was the last of the line there were spares available for use. It was to be replaced by the NPOESS joint military-civil low-orbit weather satellite system.

-- 10 FEB 09 / PROGRESS M-66 -- A Soyuz-U booster was launched from Baikonur in Kazakhstan to put the "Progress M-66" unmanned tanker-freighter spacecraft into orbit on an International Space Station (ISS) resupply mission. It was the 32nd Progress launched to the ISS and was also known as "Progress 32P". Progress M-66 docked with the Pirs docking module on the ISS on 13 February. It replaced Progress M-01M, which had been launched in late November 2008; it was undocked on 5 February 2009 and re-entered on 8 February.

-- 11 FEB 09 / EXPRESS AM44 & MD1 -- A Proton M Breeze M booster was launched from Baikonur to put the "Express AM44" and "Express MD1" geostationary comsats into orbit for the Russian Satellite Communications Corporation (RSCC). Express AM44 was built by Reshetnev, a Russian aerospace company formerly known as NPO PM, while Express MD1 was built by Khrunichev; both spacecraft had communications payloads built by Thales Alenia Space.

Express AM44 had a launch mass of 2,530 kilograms (5,580 pounds) and carried a payload of 16 Ku-band / 10 C-band / 1 L-band transponders. It was placed in the geostationary slot at 11 degrees West longitude, replacing the old Express A3 satellite to provide video and data services to users across Russia, former Soviet states, Europe, Asia, and Africa.

Express MD1 was the first of a new series of smaller communications satellites to be launched for RSCC. The satellite had a launch mass of 1,140 kilograms (2,515 pounds) and had a payload of 8 C-band / 1 L-band transponders. It was placed in the geostationary slot at 80 degrees East longitude to increase communications capacity for government and private users. Government communications support included secure channels.

-- 11 FEB 09 / HOT BIRD 10, NSS 9, SPIRALE x 2 -- An Ariane 5 ECA booster was launched from Kourou in French Guiana to put two geostationary comsats, "Hot Bird 10" and "New Skies Satellite (NSS) 9", plus two French "SPIRALE" experimental missile launch warning satellites into orbit.

The Eutelsat Hot Bird 10 was a direct-to-home TV broadcast satellite. It was built by EADS Astrium and based on the Eurostar E3000 platform, with a launch mass of 4,880 kilograms (10,760 pounds) and a payload of 64 Ku-band transponders. Hot Bird 10 was initially placed in the geostationary slot at 7 degrees West longitude to replace the decade-old Atlantic Bird 4 spacecraft to broadcast TV programs into the Middle East. It was later moved to 13 degrees East longitude, joining its identical sister satellites Hot Birds 8 and 9 to beam TV and radio shows to homes across Europe, North Africa, and the Middle East.

The SES New Skies NSS 9 satellite was built by Orbital Sciences and was based on the Star 2 comsat platform, with a launch mass of 2,230 kilograms (4,920 pounds), a payload of 44 C-band transponders, and a design life of 15 years. The comsat was placed into the geostationary slot at 177 degrees West longitude to provide communications between Asia and North America for government, commercial and maritime customers. It replaced the NSS 5 comsat, launched 11 years previously.

The two SPIRALE microspacecraft, designated "SPIRALE A" and "SPIRALE B", were acquired by the French Ministry of Defense and carried infrared sensors to evaluate systems and procedures for an operational missile early warning satellite system. The name was from the French acronym for "Preparatory System for IR Early Warning." They were built by Alcatel Space subcontracted to EADS Astrium and were based on the Myriade smallsat platform, designed by the French CNES space agency. They had a launch mass of 120 kilograms (265 pounds) each; they were placed in a highly elliptical orbit, ranging from low Earth orbit up to the geostationary belt.

-- 24 FEB 09 / ORBITING CARBON OBSERVATORY (FAILURE) -- An Orbital Sciences Taurus XL booster was launched from Vandenberg AFB to put the NASA "Orbiting Carbon Observatory (OCO)" into near-polar Sun-synchronous orbit. OCO was built by Orbital to observe the carbon cycle in the Earth's atmosphere, mapping the globe every 16 days. It was to join a constellation of five other environmental observation spacecraft in the NASA-led "A-Train". The previous two A-Train spacecraft, Cloudsat and Calipso, were launched in collaboration with the Canadian and French space agencies in 2006. Unfortunately, the booster's payload shroud failed to separate and the OCO was a loss.

-- 26 FEB 09 / TELSTAR 11N -- A Zenit 3SLB booster was launched from Baikonur to put the Telesat Canada "Telstar 11N" geostationary comsat into orbit. Telstar 11N was built by Space Systems / Loral and was based on the Loral 1300 comsat platform. It had a launch mass of 4,010 kilograms (8,840 pounds), carried a payload of 39 Ku-band transponders, and had a design life of 15 years. It was placed in the geostationary slot at 37.5 degrees West longitude, just east of equatorial South America, to provide data services to customers in North America, Western Europe and Africa. The comsat also included an Atlantic Ocean beam to provide mobile broadband services for ships and airplanes on transoceanic routes.

-- 28 FEB 09 / RADUGA 1-9 -- A Proton K Block DM booster was launched from Baikonur to put a "Raduga 1" AKA "Globus" military communications satellite into geostationary orbit. It had a launch mass of 2,300 kilograms (5,070 pounds) and carried a payload of six transponders. It may have actually been a "modernized" Raduga-1M satellite, the previous launch in the series having been the first Raduga-1M. It was the ninth Raduga-1/1M spacecraft to be launched since the first in 1989, and joined three other operational Raduga / Globus comsats in geostationary orbit.

* OTHER SPACE NEWS: On 12 February, the Iridium 33 low-orbit comsat ran into the Russian Cosmos 2251 satellite. It was a Strela-class military comsat, launched in 1993 and out of operation for over a decade. The collision, at an altitude of 800 kilometers (500 miles) spread debris along the orbital paths of the two satellites. Much of the debris was expected to fall to Earth, but much was going to stay in orbit for a long time. The Iridium organization had a spare satellite in orbit to replace Iridium 33 and was able to maintain the network.

* According to AVIATION WEEK, smallsat maker Surrey Satellite Technology LTD (SSTL) of the UK is now promoting a new low-cost Earth observation satellite, named "Accuracy, Reach, Timeliness (ART)", that could map the Earth to a minimum resolution of 60 centimeters (two feet) once every 30 months. ART's target market is geo-portals like Google Earth; the spacecraft will weigh 350 kilograms (770 pounds) and will cost about $50 million USD, a fraction of the price tag for a current commercial Earth-observation satellite. ART would make imagery available for 20 cents per square kilometer, an order of magnitude or two less than current imagery. SSTL is also pushing a low-cost small geostationary comsat platform for nations and organizations on a budget.

COMMENT ON ARTICLE* DATA MINING: It's obvious to anyone who spends time online that a good deal of information is being collected on the internet's citizens. What is maybe a little bit less obvious is the potential of linking that information together in a process known as "data mining".

As discussed in an article in THE ECONOMIST ("Know Alls", 27 September 2008), what would the British authorities do if a young chemistry graduate of Muslim background took a menial job at a farm-supplies store? On the face of it, there would be no immediate reason for alarm. Maybe he couldn't find a job and took what he could get to make ends meet. The fact that such a job might give him access to a supply of fertilizer that could be converted into explosive might be a bit worrisome. Worries would start growing if the records then showed that:

Alarm bells might start going off. Intelligence services see the potential of data mining for hunting terrorists and are eager to develop it; in contrast, civil libertarians see the potential for victimizing citizens whose patterns of innocent behavior accidentally happened to add up to something that seemed sinister, and are very worried about it.

In late 2002, press reports described a data mining system being developed by the Pentagon named "Total Information Awareness". Criticism followed, and though the military renamed the effort "Terrorism Information Awareness" to emphasize that the goal wasn't to spy on law-abiding citizens, the US Congress was not impressed and cut funding a year later. Citizens' rights had been protected -- or had they? The effort went on almost unchanged, broken into smaller projects that were kept under a wrap of secrecy.

In fact, much work on government data mining is secret, and what is visible is almost certainly only the proverbial tip of the iceberg. In early 2008, the US Department of Homeland Security announced that the organization had three efforts in progress to hunt for suspicious patterns in stolen goods. The Bush II Administration was clearly enthusiastic about data mining and in its last acts took efforts to legalize its use. It seems likely the Obama Administration will act more cautiously, but it isn't a good bet to think data mining research is going to disappear.

The US is not the only country involved in data mining work, with efforts also underway in the UK, China, France, Germany, and Israel. Even countries that have no apparent interest in data mining tend to be at least indirectly involved with data mining efforts elsewhere. The European Union has no known collaborative data-mining program in the works, but thanks to an agreement signed with the USA in 2007, airlines flying from the EU to the USA are required to hand over security-screening information about all passengers. Even internally, the EU requires that internet service providers and telecom operators track data on websites visited and calls made by users, though content does not have to be recorded and the data will be handed over to the authorities only on request. The data must be kept for two years. Of course, as noted, commercial firms, particularly credit-reporting companies, have long tracked customer activity. They have found intelligence services to be eager customers, and though nobody says much about it, the business of selling commercial customer data to governments is a lively one.

Legal challenges against data-mining procedures have not been very effective. Not only have court decisions tended to favor the data-miners, the laws have not been able to keep pace with improvements in technology. The courts have ruled on what sorts of information can be legally obtained, but haven't seriously considered the implications of integrating information that is legally obtained.

The worry is that even the most conscientious government bureaucracies can be clumsy, fossilized dinosaurs at times, stepping mindlessly on those unlucky enough to get caught underfoot. Technology doesn't change this reality; it simply makes it possible for more people to get stepped on. This is a particular worry in the UK, following several well-publicized bungles in which large amounts of government information were simply lost. Such bungles haven't been a major factor in the USA so far, but there are still worries -- the US Federal Bureau of Investigation (FBI) has a "terrorist watch list" with about a million names on it, and it's getting bigger all the time. The problem is that terrorism is a very unusual crime, and it's hard to believe that even a single percent of the people who are on the watch list are actually terrorists or terrorist sympathizers. Once somebody gets on the list, it's very hard to get off of it again.

There's some question that data mining will even be a very effective tool over the long run. There's a tendency to grab onto a technological solution because it seems slick and easy, but terrorists are smart about weaving their way through a maze of obstacles, and some extremist online forums like to trade tricks about how to obscure patterns of behavior. Data-mining programs try to be discriminating, weeding out "false positives", misleading noise, from useful leads so the authorities don't waste effort running down blind alleys; if the black hats figure out the criteria the systems use for such discrimination, they can "game" the system.

The Bush II Administration acquired considerable leeway to legally engage in data mining in the wake of the 11 September 2001 attacks on the US. Restrictions were set up in the summer of 2008 when the original authorizations ran out, but civil liberties groups say government power to data-mine citizens still remains very broad and unaccountable, with work in the field cloaked in secrecy. In an age of international terrorism, protecting the abstract rights of citizens tends to be a hard sell in the face of terrorists determined to slaughter people at random.

COMMENT ON ARTICLE* FUTURE HOTEL: As reported in an article on BBC.com ("Germans Pioneer Hotel Heaven"), Beeb reporter Steve Rosenberg had the privilege of checking into the "hotel room of the future", developed by the Fraunhofer Institute for Industrial Engineering & Organization as a demonstration of what new technology could do for the hotel experience.

Rosenberg's first reaction on stepping into the room was that he had climbed aboard a UFO. The room was circular, with no square corners -- researchers had found that a boxlike room made guests feel cramped. To enhance the UFO similarity, the room was white and crammed with gadgets, with a large bed facing a wide window as though it was the front glazing of a spacecraft. There was a smaller display on the wall that linked to a computer, with commands provided by voice. Want the bed to rock? Just ask the computer politely; it answers politely and gently moves the bed back and forth. A guest can change the lighting to red or blue or green, whatever feels more comfortable.

Well, lounging on the bed was all very well -- but having rested a bit, it was time to go to the washroom and clean up. Sensors in the floor turned on lights as Rosenberg made his way into the high-tech bathroom. It didn't seem too futuristic, but the mirror was also a display for the room computer, allowing him to search the web, watch videos, or put an aquarium screen-saver while he bathed. There was a vapor pot pouring out lemon-scented steam, and the jacuzzi featured radiative thermal panels in the walls to keep him warm.

Back in the room, Rosenberg could make use of the mini-bar -- which was actually a mobile robot. And then he could ask the computer on the wall, by then displaying a log-fire screensaver, what was for dinner that night, or when breakfast was served in the morning. Not hungry? Press a button and the room window turns into a wide-screen TV.

Some might feel a bit of suspicion of the realism of such an accumulation of indulgent high-tech gadgetry, but the Fraunhofer Institute believes the exercise is not a mere fantasy, that it points the way to the next generation of luxury hotels, with some of the technologies trickling down to lower-cost hotels. Circular rooms? Maybe not, cheap hotels wouldn't like to waste the floor space, but if they could add a few gadgets at low cost, they might well consider them worth the investment.

* Back in April, Rosenberg similarly visited a high-tech automated restaurant in Nuremberg. Each table was numbered and color-coded, with a touchscreen display; customers would enter their table and make an order from the menu on the display. The order then went up to the kitchen, with the customers then surfing the internet on the display to pass the time while the food was prepared.

Once the food was ready, it was shipped out of the kitchen in containers marked for the particular table and then set out on a mini-rail system. The rails were set up in spirals, with switches to send the proper food to the proper table. The carriers were unpowered since they just had to run downhill. To pay? Use a smart card or, one of these days, a wallet phone. A winner of a dining experience? Maybe, maybe not, but it would certainly be a fun attraction at a theme park.

* In related news, SCIENTIFIC AMERICAN reports that William Lehman Injury Research Center (WLIRC) in Miami, Florida, has been experimenting with a medical robot designated "RP-7", built by InTouch Technologies INC of Santa Barbara, California. The RP-7 weighs 90 kilograms (200 pounds) and is 1.7 meters (67 inches) tall; it looks a bit like a sleek blue Dalek robot with a video display and camera on top. It maneuvers over floors on three spherical balls, with infrared sensors and a bumper preventing it from running into walls or staff. It maintains communications through a broadband wireless connection.

The RP-7 of course does not carry Dalek death rays, but it also doesn't have any arms or other manipulation tools. It's for telemedicine, intended to allow specialists at other locations to see what they need to see in any ward, from any angle. With the face on the display, the patient can also see the specialist and carry on a conversation.

The RP-7 might seem like a particularly obnoxious gadget, the impression being that it's just another way to pile expense on a US healthcare system that is showing signs of collapse under its own gold-plating -- the RP-7 costs $250,000 USD. However, telemedicine does promise to help reduce costs, and US Army doctors are working with the WLIRC to evaluate the RP-7. The military, with two wars on the table, needs to deal with "severe trauma" patients and doesn't have all the staff needed to do the job. The RP-7 might help spread expertise in short supply around to where it's needed.

COMMENT ON ARTICLE* TWO KOREAS (2): Although South Korea has its many problems, they seem trivial in comparison with North Korea's. Kim Jong Il's regime is extremely secretive, with foreign visitors kept under strict control, and so what is known about it only obtained by implication. However, the implications make it clear that North Korea is a wretched land in a state of flux.

Troubles began to pile up during the ghastly 1995:1998 famine that killed up to 4% of the population. Even the North Korean regime couldn't avoid bringing in outsiders -- aid agencies and non-governmental organizations (NGOs) -- to help, though it did so grudgingly and made life difficult for them. Some, like the UN World Food Program (WFP), were kicked out once they had outlived their immediate usefulness, but smaller NGOs that were able to keep a lower profile remained. One such NGO, a South Korean Buddhist organization named "Good Friends", stayed in North Korea and continues to publish low-key bulletins on events there.

The reports indicate widespread hunger and an exodus of North Koreans across the border into China. The danger of trying to flee the country is great, with people hanged if they're caught, but death by starvation makes hanging a risk worth taking. The regime is incompetent at both food production and distribution, so both rural and urban citizens have been forced to flee. In the wake of the original famine a decade ago, about 80,000 North Koreans found refuge in China. However, having figured out the procedures for escape, after the end of the famine the numbers continued to increase, and now there's about a half-million North Koreans in the neighboring regions of China.

North Koreans living in China rarely live in luxury there, but even at that the difference between their present and former lives is staggering, a shock to those whose only view of the outside world had been through North Korean government propaganda. The window into the outside world obtained by North Koreans in China works both ways, with researchers able to question the refugees about the life they left behind. The picture the research paints makes that life sound sorry indeed, with about a quarter of men and more than a third of women saying a family member died of hunger, and more than a quarter of the total saying they had been arrested. 90% say they witnessed forced starvation in prison camps; 60% saw people killed by beatings and torture; and 27% had witnessed executions. From a third to half of the refugees are clearly suffering from "post-traumatic stress disorder". Bitterness is common among them, particularly from the belief that international food aid to North Korea was diverted from the starving to support the military and party loyalists. The regime has long regarded about half the population as potentially disloyal, and nowdays that estimate doesn't seem far wrong -- in fact, it may be far too conservative.

The picture of dysfunction painted by the refugees doesn't stop there. The command economy seems to have generally ground to a halt. Such production as takes place ends up on the black market, when the factories haven't been stripped down and sold off piecemeal. In fact, the only economy that is actually working is the black market, with the iron-fisted state forced to concede that without the system of "foraging, barter, and petty trade" the system would completely collapse. The Chinese border is a particular hotspot of trade, both official and illegal. Some North Korean government officials are said to be making fortunes on the black market.