* 23 entries including: Ronald Reagan's war on terror, a survey of the global oil business, the Curta mechanical calculator, solar power potential, computer games as advertising, barcodes & cellphones, malware for cellphones, BAE Systems Wolfpack sensor network, Tambora eruption of 1815, intelligent clothing, fish farming, cellphones for the developing world, classic farm windmills, chemtrail conspiracy, the internet of machines and products, bogus ABMT cancer therapy, jetliners with robot pilots, Russian problems with radioactive materials, and rejection haiku.

* A SOLAR FUTURE? As discussed in an article on SCIENCEMAG.org ("Is It Time To Shoot For The Sun?" by Robert F. Service, 22 July 2005), at the present time, the inhabitants of planet Earth produce about 13 terawatts (TW) of energy, with 85% of that power provided by fossil fuels that spew carbon dioxide into the atmosphere, raising the specter of global warming. Although there is considerable dispute over the global warming threat, nobody can deny that humans are now conducting a large-scale experiment in the global environment whose results are difficult to predict. Fossil fuels are also being depleted in the bargain, with production expected to peak around 2040. Right now, the US is opening two natural gas plants every week, and China is starting up coal-fired plants at a similar rate.

Worse, projections of population and development indicate that 30 TW more power will be needed by 2050. Where will this huge increment of power come from? Even increasing the power supply by 10 TW to 2050 would require construction of 10,000 fission power plants, meaning one new plant would have to come online every other day. Fission power creates troublesome radioactive wastes that are notoriously hard to deal with; fusion power promises to be much cleaner, but nobody has any idea when, or even if, it will be practical.

Renewable energy sources are another option, but they have limitations. Hydropower has environmental drawbacks as well, and it is hard to see that it can be expanded by any order of magnitude. Wind power is very promising, with costs of $0.05 USD per kilowatt-hour at present, cheaper than any other source of energy except coal and natural gas. However, an estimate of the maximum potential of wind power from the European Wind Energy Association gives its maximum global capability at about 6 TW, more than enough to make the technology worth the investment, but not near enough to meet needs -- though a Stanford University study places its potential as an order of magnitude greater. Biomass, geothermal, and ocean wave energy remain marginal at this time, and it is unclear just how much their use can be increased.

Now a recent report from a US Department of Energy (DOE) workshop makes the case for solar power. Right now, the DOE only spends a bit more than $10 million USD a year on solar power research, a modest sum for the US government. The report only outlines priorities for research and doesn't specify funding levels, but DOE officials are talking about increasing the total to $50 million USD a year.

Solar is currently a $7.5 billion USD industry worldwide and is growing at about 40% a year, but even at that it's a niche player in the power industry. From a theoretical point of view, solar might seem irresistible, since the Sun dumps 170,000 TW of energy on the Earth every second. A third of that is reflected back into space, but total human energy production is a fraction of a percent of what's left over. It would seem like solar energy is pure gold going to waste.

The problem is figuring out how to capture that energy. Few doubt that cost-effective photovoltaic solar cells with efficiencies of at least 10% are within reach, but with that technology, to produce 20 TW about 0.16% of the Earth's surface would have to be carpeted with solar cells. That may not sound like much, but it equates to an area about the size of a large US state or European country, on every continent. Even if all the houses in the US were roofed with solar cells, that would only provide 0.25 TW of power.

Along with the solar arrays themselves, a power transmission system would have to be built to feed the solar power into the rest of the power grid. That would be expensive but practical. However, storing the power for when the Sun isn't available is a major problem that nobody has really good ideas of how to fix; batteries and flywheels would be a problematic solution on a large scale, and other schemes have their difficulties as well.

Even without considering infrastructure, at present solar is ten times more expensive than wind power, and to complicate matters only about 10% of all energy consumed is used in electrical form, the rest being used in the form of fuels for heating, transportation, and so on. To be sure, houses could be electrically heated, but that's not as efficient as natural gas even when driven by conventional electrical power sources. To be competitive for heating, solar power costs would need to be cut even more drastically.

The DOE study admits that there are indeed major obstacles to wider use of solar power. It remains an attractive possibility, however, not only because there is, on the face of it, no cleaner power technology than solar, but because the technology seems to be at a level where it could be made practical on a large scale with an increment of effort. That increment of effort would have to be made to get solar power into large-scale use by 2020, just in time to provide a basis for growth up to the power infrastructure of 2050.

The size of the "increment" is arguable. DOE officials, as mentioned, are talking about a $50 million USD effort, while some solar advocates, with backing from a few members of US Congress, are talking billions of dollars a year. The reality is that even $50 million USD is a hard sell in a time of skyrocketing budget deficits. However, even those who acknowledge the problems believe it's time to start laying the groundwork for a solar power effort.

* FOOTNOTE -- SOLAR TECHNOLOGY: Although it is possible to generate solar energy by, for example, using mirrors to heat water into steam to drive a conventional power turbine or other thermal generator system, the main drive of solar power technology has been the "photovoltaic cell", which converts sunlight directly into electricity, with no moving parts. Solar cells first became available in the 1960s, being used as a power source for spacecraft, and since that time they have steadily increased in efficiency and dropped in cost. In Japan, for example, solar power costs have dropped 7% a year from 1992 to 2003. However, to be competitive, solar power costs will have to drop further by a factor of 10 to 100.

Traditional "monocrystalline" photovoltaic cells are based on much the same technology used to produce microprocessor chips. By making monocrystalline solar cells with multiple layers, each sensitive to a different band of light energy, it is possible to achieve conversion efficiencies of up to 30%. Unfortunately, monocrystalline solar cells are comparatively expensive, with multilayer cells so costly that they are really only suited to spacecraft power systems and the like.

New fabrication techniques and tricks to obtain higher efficiency may reduce the cost of monocrystalline solar cells and increase their efficiency. There has been work in fabricating "nanoparticles", something like ultraminiaturized solar cells, that can be produced cheaply using relatively simple processes, with the nanoparticles massed together to form large-scale devices. "Amorphous" solar cells have also been designed, in which the materials are in a disorderly state instead of arranged as a nice neat crystal; some amorphous cells can even be fabricated as plastic strips. Amorphous solar cells are far cheaper than monocrystalline solar cells, but they also have efficiencies of only a few percent. Advocates believe that amorphous technology can be considerably improved.

Solar cells produce electricity, but cannot directly produce fuel. Researchers have developed light-absorbing catalysts that can break down water into hydrogen and oxygen, with the hydrogen then used as fuel. However, the catalysts have conversion efficiencies of only a few percent, and they tend to be too "touchy" for the real world outside of the lab. Researchers think they can be improved; some researchers think it may even be possible to create solar-driven catalysts to convert atmospheric carbon dioxide into hydrocarbon fuels such as methanol, which would provide fuels compatible with the current transportation system while halting the growth of atmospheric carbon dioxide.

All these technologies can benefit from the use of solar concentrator systems to focus sunlight. Finding materials that can tolerate the very high heat provided by concentrators has been troublesome. Still, researchers are confident that such materials can be found, and that the price of solar power systems will continue to drop as efficiency increases.

BACK_TO_TOP* ADVERGAMES: As discussed in an article in THE ECONOMIST ("And Now, A Game From Our Sponsor", 11 June 2005), mass media has been becoming a contradiction in terms over the last few years, with the big broadcast networks first undermined by cable and satellite TV -- and then all of them undermined by the Internet, which has also been busily undermining print media such as newspapers and magazines. This has made life interesting for advertisers, who find themselves trying to figure out the best way to get the most exposure for their money in a fragmenting media market. One consequence of this is a deluge of ads on TV and the Internet, but that approach is somewhat self-defeating, since the end result is a flood of noise that the folk on the receiving end increasingly tune out, to the frustration of everyone concerned. The utopian dream of the advertiser is an ad that people want to see.

Enter the advertising-oriented computer game, or "advergame" for short. The idea of using computer games to promote products isn't entirely new, since "embedded advertising" of products inside computer games has been around for some time. However, such "game adverts" are not all that effective and not in widespread use, though the emerging ability to update Internet-enabled computer games does promise some expansion of this technology in the future.

Only about $20 million USD was pumped into game adverts by US advertising firms in 2004, while about $90 million was pumped into true advergames, in which the game is effectively the ad. One of the early examples was a recruiting tool for the US military named "America's Army", released in 2002 and based on a commercial action game, in which "recruits" can engage in virtual military activities, ranging from training to combat missions. There are now millions of downloaders. For those with a less militaristic bent, this last spring the United Nations World Food Program released "Food Force", in which aid workers fly helicopters, deal with local warlords and village elders, and try to rebuild communities shattered by disasters.

Of course, commercial firms are also into advergames. Dodge's "Race The Pros" allows players to race hot Dodge cars, with current racing scores from the real world downloaded automatically into the game every now and then, and billboards alongside the track pointing to local Dodge dealers -- users enter their postal codes when downloading the game. Coca-Cola, producers of feminine-hygiene products, and many other firms are now using advergames to deliver their message. They are usually simple Flash Macromedia-style games, not anywhere near as elaborate as "America's Army", but cheap to produce (about $25,000 to $50,000 USD).

Advergames tend to stay in circulation a long time, and have far more consumer impact than a 30-second TV ad. Technology may present challenges to advertisers, but it also presents opportunities for those shrewd enough to see them.

BACK_TO_TOP* A SURVEY OF OIL (2): Although much can be said about strategies of oil producers, there is an underlying reality to the business: we can't keep pumping oil indefinitely, it has to run out sooner or later. Everybody with sense knows this. The only argument is over whether it will be "sooner" or "later". In 1956 M, King Hubbert, a geologist at Shell Oil, predicted that US oil production would peak in the early 1970s and then go into irreversible decline. He turned out to be almost exactly on schedule, though discoveries of oil in the Gulf of Mexico did end up stretching out the deadline. Now the question is how "Hubbert's curve" applies to global oil supplies. The pessimists think that we are now cresting the top of the curve.

The optimists think that it won't happen until 2030 at earliest, more like 2050 or even later, and they actually have good reasons for that belief. Economics and technology improvements mean that the supply of oil, though not inexhaustible, is flexible. As the value of oil increases, resources that were thought not worth exploiting become profitable; new technologies also mean that oil once regarded as too much trouble to pump becomes a money-maker. In the 1960s, only about 20% of the oil in reserves was actually pumped; it's up to 35% now, and that means there's still considerable room for improvement.

The optimists also point out that there are still large regions of the world that haven't been surveyed for oil, and that there are oil deposits many kilometers deep in the Earth that are simply too difficult to tap with existing technology. Modern deep-ocean oil rigs are marvels of technology that could hardly have been imagined 50 years ago; another 50 years is likely to bring even further advances, multiplying the practical supply of oil.

However, the majors have not been investing in research at anything like the necessary rate. Cheap oil cut into research and development funding, and now pricey oil has bred the complacent view that R&D is a luxury. Oil service firms like Schlumberger and Halliburton have moved into the vacuum, providing advanced technology to the NOCs and helping to undermine the majors over the long run. Some of the majors have seen the errors of their ways: Exxon is once more plowing big money into R&D.

* The other criticism of the optimistic point of view is that, even if new reserves are located and exploited more efficiently, they still have to run out some day. The day of reckoning may be postponed, but it can't be canceled. Of course, in practice people usually think delaying the inevitable is a good idea, but it still leaves the question of what comes after oil.

The energy shocks of the 1970s led to a global case of nerves over energy supplies, with much fuss over alternative energy, renewable energy, and conservation -- "ARC" for short -- that sometimes resulted in boondoggles. Many conservatives still remember these fiascos, take a dim view of the overstatements of some of the wilder modern Greens, and have a general distrust of ARC. In 2001, US Vice President Dick Cheney, a former boss of Halliburton, defended the Bush II Administration's energy policy by saying: "Conservation may be a sign of personal virtue, but it is not a sufficient basis, all by itself, for a sound, comprehensive energy policy."

The Bush II Administration's policy has focused on development of domestic energy supplies. Critics point out that the US consumes 25% of the world's oil but only has 3% of the known reserves; if that doubled to 6% or even in the wildest optimism tripled to 9%, it still wouldn't give America anything resembling "energy independence". Advocates of ARC also point out that the boondoggles of the 1970s are misleading, that major strides were made in conservation in the late 1970s and early 1980s that had considerable impact. In response to the oil shocks, Europe and Japan introduced energy taxes to encourage conservation. That wasn't politically acceptable in the US, so fuel economy standards were mandated, through the "Corporate Average Fuel Economy (CAFE)" law. In both cases, a period followed in which oil consumption fell while GDPs rose, breaking what had once been assumed to be an iron law between energy use and GDP. That means that there is an effective precedent for ARC and that it shouldn't be simply dismissed out of hand just because some of the fringe likes it, too. In fact, alliances are arising between Greens, who want to tackle global warming, and conservatives, who want energy independence, to push for ARC.

There are actually many useful things that can be done, though no one of them is a magic answer. Public transport is more energy-efficient than cars, but in most cases light rail systems and the like are only practical in dense urban areas where getting around in a car and finding parking is troublesome. Otherwise, everybody wants the independence of having their own vehicle.

Of course, cars can be made more efficient. Technology is much less the issue than political will. In the US, there has been little pressure as of late to improve the energy efficiency of the nation's vehicles. Tightening CAFE would be prudent, but higher energy taxes would very likely be more effective. If gas prices remained high at the pump, people would buy more hybrid cars and automobile manufacturers would be more enthusiastic about introducing new, even more efficient hybrids. Raising gas taxes would certainly be politically troublesome, particularly for an administration that seems very tax-averse, but with the American public currently adjusting, not too grudgingly, to higher prices at the pump and budget deficits rising, there may be no time like the present to give it a shot.

Hybrids would help, but they would only provide an incremental reduction in oil use. Over the long rule, alternative fuels are seen as the answer. Biofuels like ethanol seem like the best bet for the time being. To be sure, biofuel ethanol is mostly a crop subsidy right now, with critics claiming it takes more energy to synthesize than it provides, but improvements in biotechnology and processing should make it perfectly effective and a valuable supplement, if not necessarily a replacement, for gasoline.

Natural gas-derived fuels are another intriguing option, but the long-term favorite is hydrogen. Since it can be produced by running electricity through water, it can be generated by almost any energy source, and when it is burned or otherwise recombined with oxygen in a fuel cell the major end product is water again, eliminating the menace of global warming. The difficulty with hydrogen is in distributing and handling the gas, but optimists think those problems can be overcome.

Some of the oil majors are contemptuous of ARC, with an Exxon boss blasting renewable energy as "a complete waste of money" and calling global warming a fraud. However, that attitude seems to be resisting the tide in every sense, and presents Big Oil with the unpleasant possibility that they will be the next Big Tobacco, publicly hounded and harassed by citizens and government alike. Other capitalists are swimming with the tide. Giant General Electric, an outfit that nobody would think a haven of New Age fuzzy-mindedness, includes a broad range of ARC products and services in the company portfolio. The GE wind power division expects to rake in $2 billion in revenues in 2005. The company is also into solar energy and fuels cells -- as well as, to emphasize their commitment to profits and not greenery, clean coal and nuclear power. One GE official says the company is into "a little bit of everything."

Oil will remain the lifeblood of the world's economy for the immediate future. Beyond that horizon, however, there will be another future where oil will be of declining importance, and the fact that there may not be any one technology that takes oil's place doesn't mean that it won't happen. [END OF SERIES]

PREV* REAGAN'S WAR ON TERROR (4): Following American actions in the ACHILLE LAURO hijacking, Ronald Reagan's old bogeyman, Colonel Qaddafi, came back to the forefront again. On 17 December 1985, airports in Rome and Vienna were bombed. 20 people were killed, including five Americans. US intelligence claimed Libya was responsible, and the Americans also suspected he had backed the ACHILLE LAURO hijacking. In January 1986, the US Navy went back into the Gulf of Sidra again to intimidate Qaddafi. There was an exchange of shots in late March, with two Libyan patrol boats sunk by US Harpoon antiship missiles. Qaddafi decided to retaliate by extraordinary means, ordering his embassies, or "People's Bureaus" as they were called, to conduct terrorist attacks on US targets.

On 5 April 1986, the La Belle disco in West Berlin, a popular hangout for American military personnel, was bombed, killing an American soldier, a Turkish woman, and wounding hundreds of others. Communications intercepts made it absolutely clear who was behind the attack. Investigative journalist Bob Woodward said: "I actually had in one of my books the language of the intercepts. And when you lay it out, it's clear that they promoted this bombing, knew it was going to occur, and then got a thumbs-up report back right after the discotheque had been bombed." [ED: This would later be proven to be a cover story. The Libyans used cryptographic gear from the Swiss Crypto AG firm, which was actually run by the US CIA and German BND intelligence.]

Now the advocates for military action against terrorism in the Reagan Administration had a green light. There was no doubt who was guilty and where the guilty parties were. The result was Operation EL DORADO CANYON, an elaborate set of coordinated air strikes conducted by over 200 aircraft on 14 April 1986. The US Air Force, operating F-111 precision strike bombers from England, hit Tripoli, while Navy strike aircraft hit Benghazi. A Navy pilot described it: "Oh, it was really cool. And then we launched everybody on the flight deck. And I was actually the last guy off, and I was ... just screaming there in the cockpit, just out of frustration. I was afraid I was going to miss it."

He wasn't the only one who thought it was really cool. American TV audiences were treated to images taken on the videorecorders of strike aircraft dropping laser-guided bombs, with targets in the crosshairs going up in huge blasts. The Libyans claimed that 37 people were killed and 93 injured. The strikes missed Qaddafi, but damaged his house. As far as anyone can tell, he was in a state of shock over the raid, and immediately became more careful in his activities.

It was another publicity coup for the Reagan Administration. There were complaints that the Americans had singled out Qaddafi simply because he was an easy target, his erratic actions having left him with few friends who might care if somebody bombed him. On the other hand, with terrorists taking potshots at Americans for years, it was perfectly logical that when the US actually caught someone doing it and had a clear shot back at him, they weren't going to hesitate to take it.

Not everything went so neatly in the matter. On 16 April, three employees of the American University in Beirut, including two Americans and a Briton, were murdered by the Arab Revolutionary Cells, a Libyan-backed Palestinian group associated with the terrorist Abu Nidal. The group announced the murders were in retaliation for EL DORADO CANYON.

The wheels of justice for the La Belle disco bombing turned very slowly. Five suspects were eventually arrested and put on trial in a German court. Verdicts were delivered in 2001, sentencing two of the defendants to 14 years in prison, two to 12 years, and acquitting the fifth.

* Although EL DORADO CANYON had been a flag-waving triumph for the Reagan Administration, policy on terrorism remained basically confused, and soon presented Reagan and his people with their greatest political crisis. On 3 November 1986, Al-Shiraa, a Lebanese Arab-language newspaper, published a report on the secret arms deals between the US and Iran. The ripples from that event spread outward and grew, eventually rocking the Reagan Administration in a massive political scandal. Not only had the government violated its own public statements on refusing to deal with terrorists, but it had used the opportunity to secretly fund a war in Central America.

One of the agendas of the Reagan Administration was the overthrow of the Leftist Sandinista government in Nicaragua. The Sandinistas had overthrown the thuggish Somoza dictatorship during the Carter Administration and set up a revolutionary government. Sandinista leaders had visited with Carter and, in a demonstration of enthusiasm over sensibility, personally abused him at length for past American misdeeds in Nicaragua. Whatever the facts of past history were, Carter wasn't fond of foreign interventions and almost certainly wanted to at least coexist with the Sandinistas, or even come to a mutual understanding with them.

When Reagan came to office, the Sandinista found themselves confronted with a new president who saw them as puppets of Fidel Castro, the president's other major bogeyman. The Reagan Administration helped fund a loose confederation of Nicaraguan counter-revolutionaries named the "Contras", who began to conduct raids and raise hell in Nicaragua. However, they were not popular in the Democratic-controlled US Congress, with many seeing the Contras as little more than reactionary thugs and bandits. In 1982, Congress passed the Boland Act, restricting funds for the Contras, and in 1982 the act was amended to cut off funds for the Contras almost completely. Since agents of the Reagan Administration were engaged in trading arms with Iran on a covert basis, the logical thing to do was to funnel the payments provided by the Iranians to the Contras, in defiance of Congress.

This was the sort of spook trick that might have been winked at in the 1950s, but in the 1980s everyone involved knew it was subject to the law: DON'T GET CAUGHT. Given a world-spanning conspiracy, organized with a fundamental approach resembling that of a chimpanzee piling boxes on top of each other to reach a bunch of bananas, getting caught was highly probable. People might debate whether the scheme was unethical, but nobody could deny it was stupid. One way or another, the Reagan Administration was highly embarrassed.

The focus came down on National Security Adviser Admiral John Poindexter, who had overseen the funneling of money from the arms deal to the Contras, and his aide, the gung-ho Marine Lieutenant Colonel Oliver North, who had carried it out. They were the target of extended interrogations by an independent prosecutor, Lawrence Walsh, assigned by Congress to investigate the case. The investigation would go on for eight years. Poindexter resigned, North was fired. While National Security Adviser Robert McFarlane was convicted on obstruction of justice charges, the president pardoned him and several other high officials.

How high the conspiracy went still remains unclear. Reagan was a hands-off manager who delegated a high degree of responsibility to people he trusted, and it was nothing unusual for other presidents to tell subordinates who might well go out of bounds: "Don't bother me with the details."

North claimed that Reagan had full knowledge of the matter, but North had been proven to be a liar, and had no credibility. Reagan insisted that he had not known, and when questioned on suspicious events, replied that he could not clearly recall the details. That response was treated with great skepticism, but would become very plausible within a few years. [TO BE CONTINUED]

START | PREV | NEXT* SCANNING BARCODES WITH CELLPHONES: When the cellphone started to make it big in the 1990s, few had any idea of how far the technology would actually go. Using a cellphone as a pocket calculator or pocket computer certainly was an option at the time, but phones capable of being used as a digital music player or digital camera were something of a surprise. A recent ECONOMIST article ("Phones With Eyes", 12 March 2005), suggests that these new capabilities have unexpected benefits as well. The main idea of a camera phone, for example, was to be able to show something to listeners on the other end instead of just tell them about it, but camera phones can do much more. Samsung of Korea has introduced a new phone-camera that can be used as a business-card scanner: the user takes a picture of a business card, then optical character recognition (OCR) software in the phone reads the card and puts the data into an address book. Sanyo of Japan has similarly designed a phone-camera that can read English text and more or less translate it into Japanese.

Such supersmart phones are only following up innovative applications generated by phone-camera users. In Japan, commuters often take pictures of train schedules so they can call up a picture later as a reminder. The Amazon arm in Japan has come up with another clever trick: phone-camera users take a picture of the barcode on a music CD or book, send the picture to Amazon, and automatically get back Amazon's price quote on the item. Japanese consumers can similarly scan the barcode on a package of fish and get back when it was caught, and even get the name of the boat and fisherman.

Japan has pioneered the use of two-dimensional barcodes, which replace the classic one-dimensional striped barcodes with a square grid of dark and light squares to provide more data, such as an Internet address. Japanese business cards may have 2D codes to provide all the relevant information for a smart phone-camera or other scanning device. A poster for a concert may have a 2D code that allows a user to buy tickets, while a music CD might have a 2D code to allow the user to ring up a sample song.

2D barcodes are starting to catch on elsewhere. Semacode, a startup company in Ontario, Canada, has created the "Semacode" system for bus stops in California. Users scan the 2D codes on a bus stop sign, and then get back the time of the next bus arrival. Semacode has also worked with cellphone vendors to conduct promotional "treasure hunts", in which kids hunt down 2D codes posted in various places in order to find a prize. Other possibilities are 2D codes in art galleries or the like to give narratives, or 2D codes on fliers to get more information, and so on.

* CELLPHONE MALWARE: Smart, reprogrammable cellphones are becoming increasingly widespread. Of course, given the malicious ingenuity of hackers and cybercriminals, it is obvious that such reprogrammability leads to security concerns for cellphones. The issue is quickly ceasing to be theoretical. A WASHINGTON POST article from late 2004 revealed that cellphones have now become the latest frontier for designers of malicious software. Gullible cellphone users who downloaded freebie software to provide ringtones and screensavers found out that it disabled their phones, and put little skull-and-crossbones images in place of menu icons.

"Skulls", as it was known, was one of five malicious programs attacking cellphones released in 2004. More are certain to be on the way, now that phones are becoming much more reprogrammable. They are very vulnerable at present, since they have little inherent security and their users are not familiar with attacks. In June 2004, a European gang of hackers who call themselves "29A" released a virus that spread through the Bluetooth short-range wireless network, used by some cellphones to communicate with computer devices. Once it had passed on to other devices, the "Caribe" virus then shut down the Bluetooth interface and cycled the device to run the batteries down.

Some of these attacks appear to be less simple malice than attempts to sound an alarm that much more unpleasant things could happen. One potentially nasty trick that is already making the rounds is falsifying caller ID to phones, a dodge that works with fixed phones as well as cellphones, incidentally. It's not hard to do, and there are actually legitimate reasons to do it -- for example, a salesman on the road may want to prompt a customer to call the home office and not a motel room. However, it can also be used to crack into somebody's voicemail, and a cybercriminal might pose as a bank official to try to con somebody into giving up account information.

Says an official at a Finnish cellphone company: "The nightmare scenario with cell phones is a virus that would delete the contents of your phone, or start calling [a toll number] on its own from the phone or recording every single one of your conversations and sending the recorded conversation somewhere." Lack of standardization in cellphone software does create a barrier to the spread of viruses and the like and companies are now starting to implement defenses, but it may take a major fiasco to put cellphone security on the map.

BACK_TO_TOP* WOLFPACK IN TEST: As discussed in an article in AVIATION WEEK ("Pack Mentality" by Robert Wall & David A. Fulghum, 25 October 2004), during the Vietnam War, the US military implemented a network of ground sensors under the IGLOO WHITE program in order to stop infiltration of supplies and troops from North Vietnam into South Vietnam. The effort wasn't completely successful, but the idea hasn't gone away. Now British Aerospace (BAE) Systems and the US Defense Advanced Research Projects Agency (DARPA) are working on elements of a new battlefield networked distributed-sensor system under the "Wolfpack" research program.

The focus of the Wolfpack is on electronic warfare (EW). In Iraq, insurgents use small short-range handheld radios, relaying messages over long distances. The low-power radios are hard to spot and hard to nail down. The Wolfpack consists of a set of coffee-can sized sensor modules that can be set up by troops from helicopters, dropped by unmanned aerial vehicles (UAVs), or shot from artillery. The network of sensor modules will be able to locate low-power transmitters, eavesdrop on their communications and if necessary jam them, even inserting viruses into enemy computers.

Concepts being investigated in the Wolfpack program include relaying data up to more powerful EW platforms, such as the Grumman EA-6B Prowler jamming aircraft, and using a special-purpose vertical takeoff or landing (VTOL) UAV named the "AirWolf" to deploy the sensors and recharge their batteries. The BAE Systems AirWolf is a ducted-fan drum about 1.2 meters (4 feet) in diameter and with short pivoting wings. BAE Systems hopes to have a demonstrator flying in 2005 and have the vehicle in production by 2009.

The "wolves" will operate in "packs" of five. All the nodes will be identical, with one dynamically assigned to be the "leader" to relay inputs to the greater battle network. The packs will be able to reorganize themselves if a wolf is lost or if circumstances demand a change in operation. High-level software will integrate and sift through the data provided by the Wolfpacks to give battle commanders a "picture" of what is going on. The system should be able to pinpoint emitters to within 10 meters (33 feet), which by probably no coincidence is also the "circular error probability" of a GPS-guided weapon.

As is typical with DARPA efforts, Wolfpack is strictly a research project and there is no commitment to fielding the system. Wolfpacks will participate in a number of military exercises to validate the technology. If it works as planned, the armed services will pick up the technology for fielding.

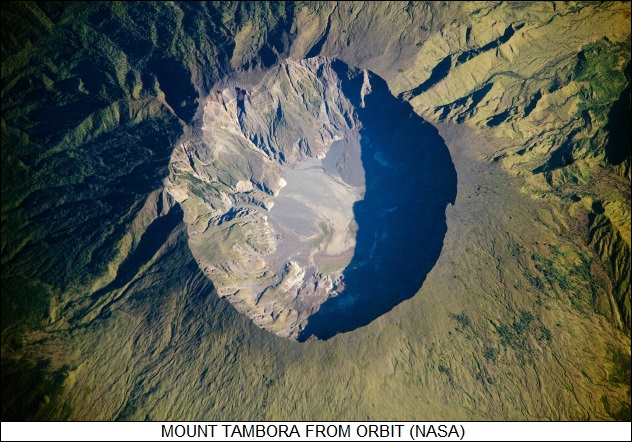

BACK_TO_TOP* APOCALYPSE 1815: As discussed in an article from SMITHSONIAN magazine a while back ("Blast From The Past" by Robert Evans, July 2002), in early April 1815, Mount Tambora, a volcano about 4,000 meters (over 13,000 feet) tall on the island of Sumbawa in the island chain now known as Indonesia, began to rumble loudly. Soldiers on Java, hundreds of kilometers to the north, thought there was a battle in progress nearby and sent out scouts to find out what was going on.

On 10 April 1815, Tambora blew its top, blasting up three columns of fire and a plume of smoke, gas, and ash that reached up 40 kilometers (25 miles) into the sky. Blast winds uprooted trees and pyroclastic flows -- burning floods of incandescent ash -- ran down the slopes of the mountain at more than 160 KPH (100 MPH), rolling and hissing into the sea 40 kilometers away. Huge floating rafts of pumice blocked ships in harbors. When the volcano went quiet again in mid-July 1815, Tambora had lost about 1,200 meters (4,000 feet) of its top. The explosion itself killed an estimated 10,000 people almost immediately. The volcano's vomitings killed plant life and fouled drinkable water supplies, and 80,000 more people on Sumbawa and neighboring Lombok died over the next few months.

That wasn't the end of the matter. The explosion had tossed cubic kilometers of material into the stratosphere, creating a haze of sulfuric acid droplets, dust, and ash that gradually circled around the world. The result was that 1816 became the "year without a summer". There were crop failures all around the globe, and a ghastly famine in Ireland, followed by a typhus epidemic. In the United States, there were snowfalls and freezes in July as far south as Virginia. The hardships of the harsh year led many American Easterners to decide to migrate to the West. To be sure, a cooling trend had been underway for several years before the eruption of Tambora, and the weather in the Southern Hemisphere was not particularly unusual in the years following the eruption.

The Tambora "event" was the greatest volcanic eruption in recorded human history. It has been overshadowed by the great eruption of Krakatoa, also in Indonesia, in 1883, but only because modern communications were available at the time in the form of the intercontinental telegraph. Climate researchers now find a record of the eruption of Tambora in ice cores taken on the polar icecaps that show high sulfur content in the layers for 1815 and 1816. There is also a universally famous, if oblique, reference to the disaster in popular literature. In 1816, the poet Percy Bysshe Shelley, his future wife Mary Wollstonecraft, Lord Byron, and others in their circle were vacationing on Lake Geneva. Given the dampness and gloominess of the year, the conversation drifted towards talk of gothic and horror literature. The result was Mary Shelley's FRANKENSTEIN, which says nothing about volcanic eruptions but carries its images of darkness and gloom to the present day.

BACK_TO_TOP* A SURVEY OF OIL (1): As discussed in a survey in THE ECONOMIST ("Oil In Troubled Waters: A Survey Of Oil" by Vijay Vaitheesaran, 30 April 2005), in 1998 oil prices were at $10 USD a barrel; by the middle of 2005, they had cleared $60 USD a barrel. The recent surge in oil prices has of course created a lot of anxiety, since it brought back memories of the 1970s, when the "energy crisis" sent economies into nosedives, creating a bizarre situation in which growth was slow or negative while inflation soared.

Americans who can remember those years of steady price increases must feel a bit perplexed, if relieved, that it hasn't really happened this time around. Things are a bit different now. First, in modern dollars, oil peaked at $80 USD a barrel in the 1970s; $60 USD a barrel is not good but not as severe. Central banks are more skillful now at bottling up inflation without stifling growth. Furthermore, although the current social climate in the US seems indifferent to conservation, appearances are a bit deceiving: the US uses only half as much oil per unit of GDP as 30 years ago, though this is in large part due to the economic shift from manufacturing to services.

However, nobody really questions the idea that high oil prices are a dead weight on economic growth. That leads to two questions: first, how did the prices get so high? Second, what happens next?

* The rise in oil prices seems to be due to several factors. One is that OPEC likes to cut production when stocks are flush to prevent prices from going too soft. There is a trade-off in raising prices, in that the OPEC nations in general and the Saudis in particular do realize that raising economic hell in consumer nations does not do anyone any good; it would also encourage the consumers over the long term to find alternate energy sources. OPEC nations keen a keen eye on inflation, and the central banks have managed to keep a lid rising prices has made OPEC confident enough to scale back production.

Another factor in the mix include political nervousness -- most notably over troubles in Iraq, but also Russian government interference in the operation of the Russian oil firm Yukos, and civil unrest in other producer states such as Nigeria and Venezuela. Finally, there has been a global surge in demand, and there hasn't been enough investment in the machinery to pump, produce, move, store, and deliver oil to keep pace.

There are those who think oil has now achieved a price "floor" of about $30 USD a barrel, and that it's unlikely to get cheaper than that. However, despite the interest of producer nations to get the most from their oil, they can't completely eliminate competition, and the demand surge seems to be fading off.

* For the moment, the high prices have made the non-national "major" oil firms, like Exxon Mobil, Royal Dutch / Shell, and Chevron Texaco, stock-market darlings. Company executives trumpet their strength, but a little analysis shows this confidence to be somewhat hollow. The majors have access to a limited fraction of the world's oil reserves, and they are depleting it rapidly. Any new oil that they find will be more difficult and expensive to exploit. That challenge has led to the consolidation of the majors, with the companies pooling their assets to take on harder tasks.

The bulk of the world's oil reserves remain in the hands of national oil companies (NOCs), like ARAMCO of Saudi Arabia. In the interests of "energy security", NOCs are also becoming bigger players in hunting for oil outside their own borders, competing with the majors. This is not necessarily good news for anyone except those associated with the NOCs. Anti-globalism activists may have good reasons to be suspicious of the majors, but the NOCs aren't saints, either. Although there are some well-run NOCs, they are often clumsy state bureaucracies, and in the worst cases, Nigeria being the most visibly worst, they are corrupt, with the oil money lining the pockets of the people in charge while ordinary citizens go hungry. Oil, instead of being a benefit to the people, turns out to be a curse. Activists also find out that it is much harder to get leverage against NOCs than the majors.

The majors do retain an edge in being more efficient, energetic, and technically smarter than the NOCs, which means that if the majors can survive over the short term they may be in the lead in the longer. The majors are now investing major cash into finding fuel in tar sands, oil shales, coal-bed methane, and other resources that most NOCs don't have the long view or skill to exploit. The majors are also big on exploiting natural gas, which used to be regarded as a nuisance that had to be flared off to get at oil, but which is now seen as valuable in itself and more environmentally benign than oil. Natural gas is technology intensive, since it has to be cooled and pressurized into a liquefied ("LNG") form for transport -- not a trivial task. The majors are in some cases collaborating with the NOCs to provide skills, such as natural gas exploitation, that the NOCs can't handle with themselves. [TO BE CONTINUED]

NEXT* REAGAN'S WAR ON TERROR (3): The bombing of the US Marine barracks in Lebanon was followed by further terrorist attacks. On 12 December 1983, a suicide truck bomber attacked the US embassy in Kuwait, killing himself and five others, and injuring more than 80 others. The attack was part of a series of bombings, with targets ranging from the French embassy to the airport control tower. The group behind the attacks was thought to be backed by Iran. Kuwaiti authorities arrested 17 people, and the liberation of the "Kuwait 17" would be the objective of a series of further terrorist attacks.

In the meantime, Westerners in Lebanon were being kidnapped as hostages. The kidnappings had begun in 1982 and would go on for a decade, with a total of 30 men eventually seized. The whole matter was a terrible nuisance for the Reagan Administration, but it took a big step up on 16 March 1984, when the replacement CIA station chief in Lebanon, William Buckley, was seized, apparently by Hezbollah militants. Snatching a high-ranking US CIA official was a major coup, and another humiliation for the Reagan Administration. Buckley would eventually die in captivity, apparently from health problems aggravated by mistreatment.

Then, on 20 September 1984, yet another truck bomb destroyed the US embassy annex in Aukar, north of Beirut, killing 24, including two US military personnel. Hezbollah was suspected again. There was no official retaliation, but William Casey, director of the CIA, apparently decided to fight fire with fire. It is believed he had lunch with Prince Bandar, the powerful Saudi ambassador to the US, and the two men talked about taking secret measures to deal with the Hezbollah. The prime target was Sheik Mohammed Hussein Fadlallah, a Shiite Muslim cleric who was the spiritual inspiration for, and probably the secret leader of, the Hezbollah. A chain of actions was set in motion that ended with the detonation of a car bomb on 8 March 1985 in a public square outside a mosque in Beirut. 80 people were killed, but its target, Sheik Fadlallah, was not harmed, since he had stayed late after the prayer service.

The exact details of this incident remain unclear. It is difficult to believe that the CIA would have authorized such an indiscriminate attack, with all its attendant bad publicity. It was apparently done by overenthusiastic Lebanese agents of the CIA who had slipped off the leash. A grim joke went around that it had to have been done by the CIA, since the attack killed everyone but the target. It doesn't appear that the people at the top of the Reagan Administration had any more knowledge of the matter than anyone else, except for Bill Casey, who played his cards very close to his chest. He would die in 1987 of a brain tumor, taking his secrets to the grave with him like a good little spook. In any case, the word came down from the top to knock off the dirty tricks.

* The Reagan Administration hadn't really found any sensible way to deal with terrorism, and the incidents continued. On 3 December 1984, Kuwait Airways Flight 221, on its way from Kuwait to Pakistan, was seized by hijackers, apparently linked to Hezbollah, and forced to land in Tehran. The hijackers demanded the release of the Kuwait 17, and when their demand wasn't met, murdered two American officials of the US Agency for International Development. On 9 December, Iranian security forces stormed the plane, freed the hostages, and arrested the hijackers. The Iranians said the hijackers would go to trial, but they were allowed to leave the country.

That was just a warmup. On 15 June 1985, TWA Flight 847, with over 150 on board, was hijacked while en route from Athens to Rome, and forced to land at Beirut Airport. Most of the passengers were Americans, including six military personnel. The theatrical crisis lasted for 17 days in full view of the world's news media.

The hijackers, believed to be associated with the Hezbollah, wanted to obtain the release of the Kuwait 17, as well as the release of 700 Shiite Muslim prisoners being held by the Israelis and the Israeli-backed South Lebanon Army. As a gesture, the hijackers released 17 women and two children, sending them down the emergency exit chutes. When the hijackers didn't get their way, they tied up a US Navy diver named Dean Stethem, beat him bloody, stood him up in the open door of the airliner, shot him in the head in full view of news cameras, and dumped the corpse onto the tarmac.

Reporters conducted an interview with the plane's captain while a hijacker stood there pressing a gun to the captain's head. The hostage crisis finally ended when Israel started freeing some of its prisoners. There never was an official "deal", and US officials said the Israelis had been planning to release the prisoners anyway. In any case, the hijackers gave up the airliner. In 1987, the Americans secretly indicted four men for the TWA 847 hijacking, including Imad Mughniyah, a senior officer of the Hezbollah. One of the four was captured in Frankfurt, and in 1989 the German courts sentenced him to life in prison. Imad Mughniyah was never captured.

* The TWA 847 incident involved a certain implied negotiation with terrorists. By the summer of 1985, the US was in fact actively negotiating with terrorists. Although the Reagan Administration repeatedly stated in public that there would be no negotiation, in practice the US was dealing with the Iranians to obtain the release of Western hostages in Lebanon. Iran, under an international arms embargo, was running out of spares and munitions to fight its protracted, bloody war with Iraq, and since the Iranian military was largely equipped with American weapons, that gave the US a lever.

Reagan was a person of conviction who didn't like saying one thing and doing another, but he felt he had a personal obligation to help rescue the hostages in Lebanon, and gave the go-ahead for the deal. The first clandestine arms shipment, a batch of a hundred antitank missiles sent by the Israelis, arrived in August 1985, and more shipments followed. Three American hostages were released up to November 1986 as a result of the deal, but three more hostages were seized in their place. However, by that time stability was beginning to return to Lebanon, and would eventually result in the release of all surviving Western hostages.

The US was also starting to become more assertive in dealing with terrorist attacks. On 7 October 1985, the Italian cruise liner ACHILLE LAURO, then off the coast of Egypt, was hijacked by four gunmen, apparently associated with the extreme Palestinian Liberation Front. The hijackers demanded the release of Palestinian prisoners in Egypt, Italy, and other countries. When their demands weren't met, they murdered a disabled 69-year-old American tourist named Leon Klinghoffer. The terrorists gave up the ship on 10 October to accept safe passage on an Egyptian airliner, as part of a deal with the Egyptians. The Americans were listening in to communications on the deal, and US Navy F-14 Tomcat fighters intercepted the airliner and forced it to land in Italy, where the four hijackers were arrested. The action was a great surprise and very popular with the American public. [TO BE CONTINUED]

START | PREV | NEXT* DRESS SMARTLY: As discussed in an article from a while back on SCIENCEMAG.org ("Electronic Textiles Charge Ahead" by Robert F. Service, 15 August 2003), the idea of integrating electronics into clothing may seem a bit gimmicky, and in fact to the extent that such "smart clothes" are now on the shelves, gimmickry is the selling point. It is now possible to buy a ski jacket with a built-in MP3 digital audio player, and a British company is manufacturing a foldable, touch-sensitive fabric keypad and cursor-pointing device that can be used to control a pocket computer and cell phone. There are dresses wired up with light-emitting diodes and even tablecloths that can be used to play board games.

The potential of such items is of course mixed. Some such gimmicks will catch on, others will end up in THE TRAILING EDGE or THE DULLER IMAGE catalogs. However, smart textile researchers believe that the field may soon provide significant solutions for serious problems.

The first big step forward in this direction was in 1996, when two Georgia Institute of Technology researchers, Park Sung-mee and Sundaresan Jayaraman, created fabrics with electrical connections to power and read electronic sensors to monitor a wearer's breathing, temperature, and heartbeat. The "wired shirt" can be plugged into ordinary medical monitors, and then unplugged and washed. In 2000, Georgia Tech licensed the scheme to a company named Sensatex, which is developing the "SmartShirt" for commercial sale. SmartShirts would not only be useful for medical work, they might be used by athletes in training or for keeping track of the well-being of firemen and other emergency workers.

The military, not surprisingly, is very interested in electronic textiles and is considering some seemingly far-fetched applications. One idea is to develop an acoustic sensor that could be incorporated into tents to locate enemy vehicles and the like. The tents might also incorporate solar cells to power the systems. The military is interested in less exotic applications as well, such as wearable keypads, SmartShirts for infantry, and radio antennas that are woven into a soldier's combat vest, eliminating the whip antenna that makes a radio operator such a prime target in an ambush.

The military believes that commercial applications will pave the way for military applications. Researchers at International Fashion Machines, which produces the LED-wired dress mentioned earlier, have developed fabrics that change their color patterns, laced with a grid of conductive wires and using yarns coated with dyes that change color when heated. Such schemes might also be used for military camouflage that can adjust itself to its surroundings. German chipmaker Infineon Technologies is working on carpets that contain intruder and fire detection sensors, a concept that has military applications as well.

There are of course challenges. Researchers at the US Army Natick Soldier Center in New Hampshire point out one of the most obvious problems: wear and tear. Clothing has to be more or less flexible, and that's hard on wires and electrical connections. Some researchers favor wiring with multiple strands to reduce breakage, while others are looking at flexible conductive polymers, though these have the drawback that they can carry less current than metals. Connections are another problem. Clothing for combat or outdoor sports will get wet and muddy, and conventional connectors won't tolerate that sort of treatment. Natick researchers have developed a plastic buckle that not only hooks up straps, it hooks up electrical connections.

Power is a really serious issue. Carrying around a battery pack is a nuisance. Some battery manufacturers are developing lithium batteries based on layers of foils, with such batteries incorporated into the lining of a jacket. Other companies are going further, developing batteries in the form of threads with concentric layers that can be woven into textiles.

Researchers are optimistic about the technical possibilities of smart fabrics, but for now the technological pieces are being developed on a custom basis, or for limited production. What smart clothing advocates are looking for right now is a "killer application" that will put the technology on the map, leading to the manufacture of standardized components in volumes. Once all the components can be selected from catalogs, the field is likely to explode, and there will come a day when people will take smart garments for granted.

BACK_TO_TOP* FARMING FOR FISH: As discussed in an article from THE ECONOMIST ("The Promise Of A Blue Revolution", 9 August 2003), it has been said that the world's fisheries are the last domain of the hunter-gatherer. On land, all significant food production has been taken over by the garden, the farm, the ranch. At sea, people are still hunting for their meals, with such improved technology that environmentalists fear the mass extermination of many fish populations. At the same time, people are eating more fish than ever. Prices for seafood have risen in general over the past few decades, while prices for land-based animal products have fallen.

The solution to this quandary would seem obvious: raise fish in farms instead of going to sea to catch them. In fact, fish farming is booming. Production volumes have been growing at an average rate of 10% a year since 1990, with a production of 36 million tonnes of fish and shellfish in 2000, with China the world leader. Half the seafood now eaten by Americans is farmed. The dark side of this shiny picture, critics charge, is that fish farming is an environmental disaster and the products it produces are not healthy to eat.

* Advocates of fish farming point out that modern mass aquaculture is in its infancy and there is much room for solution of such problems as exist. In addition, there is nothing absolutely new about aquaculture; China's had it for thousands of years -- all that is required is a pond, stocked with species of fish like carp that can put up with fairly brackish water and live happily on scraps of rotten vegetables and fruits. Nobody objects to such schemes, as these fish provide a useful nutritional supplement in poor rural areas and provide a valuable resource to developing nations.

However, modern industrial aquaculture is, forgive the expression, a different kettle of fish. It began about three decades ago with the cultivation of salmon, and then went on to sea bass, flounder, halibut, sole, hake, haddock and sea bream. These species are much harder to domesticate than carp. Raising them demands a thorough knowledge of their lifecycles and careful attention to their living conditions, involving such factors as stocking densities, water quality, breeding conditions, health monitoring and maintenance, and nutrition. Domesticating a fish species takes years of work and a great deal of research. There is substantial interest in farming cod in Northern Europe, for example, but it is a tricky fish to domesticate, since unlike many other fish, cod fry don't hatch with any reserves of nutrients and have to be cared for from the start.

Of course, simply creating an environment where captive fish can thrive is only half the challenge. The other half is truly domesticating the fish to tailor them to desired specifications, such as increased growth rates and fertility; more efficient conversion of food into body meat; resistance to disease; and tolerance of cold or poor-quality water. Selective breeding of tilapia, a freshwater herbivorous fish popular in the US, has resulted in a domesticated strain that grows 60% faster and is hardier than its wild cousins. There is considerable interest in genetically modified (GM) fish, but this work is in its infancy and nobody is farming GM fish just yet.

Fish farming has a double commercial impact. Not only does it increase supplies of fish, it also allows fish to be supplied to consumers on a consistent basis, which has had a great positive effect on consumer demand. Fish farming, then, has a strong appeal to the consumer, but it is also an irritant to activists. Fish are usually farmed in pens connected to large open bodies of water, and waste from fish farms, such as body wastes, dead fish, and uneaten food, can pollute the sea. Shrimp farming has a particularly bad reputation for destroying wetlands and mangrove swamps.

There are concerns that overdosages of antibiotics can have subtle long-term health effects, not only on the fish but on the people who eat them. Diseases may travel rapidly through the confines of fish pens, and fish often escape due to storms and other accidents, where they can infect wild fish stocks. In addition, if the fish that have escaped have been selectively bred or, eventually, genetically modified, they may overwhelm wild populations or dilute their genetics with domesticated genes, with unpredictable effects.

The bad environmental reputation of fish farming does seem to have a basis in fact, but steps can be, and in many places have been, taken to improve matters. Fish farmers have been developing new feed formulas that are more digestible and produce less waste, and have been trying to emphasize vaccines over expensive and troublesome antibiotics. Work is under way to breed more environmentally-friendly fish. Finally, in much the same way that an experienced aquarium keeper knows how to balance different species to keep the aquarium bubbling along smoothly, different species can be raised at fish farms to keep things clean, for example using tilapia to tidy up after shrimp.

To the extent that the critics acknowledge that better management can make fish farming more environmentally friendly, they point out that the whole concept still has a fatal underlying flaw. To raise carnivorous fish like salmon requires fish feed based on fishmeal, and the fishmeal is obtained by catching "industrial" fish from the wild. These are fish that would otherwise not be regarded as commercially useful, and so, the critics conclude, fish farming is not slowing down the depletion of wild fish stocks -- it's accelerating it by literally expanding the dragnet. This depletion also has the effect of taking away fish regarded as valuable in undeveloped countries, where people are hungrier and less fussy about what they eat, to help provide high-value fish steaks for the tables of consumers in wealthier countries.

In fact, the catch of industrial fish has been stable for decades. The reason for this is because fishmeal used to be included in animal feed, but that use has been steadily cut back, while use by fish farms has increased. This is only relatively good news, since the catch is still substantial, and all other things being equal an increase in aquaculture will result in a greater need for fishmeal. Advocates point out that all other things are not necessarily equal, and that the fishmeal content of fish food has been more than cut in half since 1972. It is now is only about 30% of the content. Other fishmeal substitutes, based on soya, rapeseed oil, corn gluten, and yeasts are under investigation. Furthermore, more fishmeal could be produced by further exploitation of "bycatch", the incidental but substantial unwanted fish picked up by the nets of marine fisheries.

Advocates also point out that farming and ranching back on land have environmental impacts as well, and nobody fundamentally challenges such activities. Farming and ranching are, however, subject to regulation, and few would claim that fish farming should be exempt from regulation. Governments can provide oversight for the industry, and in fact they can do a better job of it than they can for marine fisheries, which do their business out at sea. Few like having to submit to regulations, but ironically they may be the key to wider success for fish farming.

BACK_TO_TOP* CELLPHONES FOR THE DEVELOPING WORLD: As discussed in an article in THE ECONOMIST ("Calling An End To Poverty", 9 July 2005), cellphone technology has become universal in developed countries, and in the developing world as well: few are too startled today to see a picture of an African shepherd chatting away on his cellphone. Cellphones, it turns out, are in many ways a more appropriate technology for poor countries than a traditional telephone system based on landlines, since it is much easier and cheaper to build the infrastructure.

The use of cellphones in third-world countries illustrates how critical communication is for society. Fisherman and farmers can get a better idea of common prices before trying to sell their product; villagers can figure out where to go to find work; help can be called when emergencies strike. An entire village can share a cellphone, using prepaid calling schemes.

However, although 80% of the world's population is within the reach of cellphone towers, only about 5% of the people in India or sub-Saharan Africa own cellphones. An official with a Middle Eastern / African cellphone company asserts that usage among the poor, who nominally make about a dollar a day, would double if handsets came down from their current cheapest price of about $60 USD (before sales plans) to half that. Manufacturers of course see a better margin in selling fancy phones to rich nations for about $200 USD apiece, but as sales in rich nations have saturated, third-world markets are becoming more attractive -- the margins are relatively small, but the potential sales volume is very big.

Most mobile phone systems around the world, including those of poor countries, are based on the GSM standard, and in early 2005 a group of mobile phone operators from developing countries in the GSM Association put out a request for bids on a contract for 6 million phones that could be sold for less than $40 USD each. Motorola won the contract, with deliveries beginning in the early spring of 2005. Motorola officials insist this isn't a charity deal in any way, one saying: "We do make a margin -- a much smaller margin, but still a margin." The GSMA operator's group is now negotiating for more handsets, with initial deliveries to begin in early 2006. The group's motivation in the deal was not only to come up with a product that could improve sales, but also to point out to big cellphone technology vendors that the developing world was worth their time and effort.

Motorola's cellphone is not only cheap, it was designed to fit the needs of the customer. It has a long battery life and is rugged, and has localizations for particular end-users. For example, cellphones sold in Africa include a football (soccer) game, while those sold in India include a cricket game. Low cost did not mean dispensing with all bells and whistles; indeed, since poor folk cannot afford such fripperies as digital music players, digital cameras, electronic games, and portable PCs, providing such features on a cellphone, even in a limited fashion, provides a great increase in value. Style is also a concern: in a society where people own so very little, ownership of a cellphone can be a major status symbol, and it should play the part appropriately.

Motorola's phone is only a start. In the summer of 2005, the Dutch electronics giant Philips announced a chipset that would cut the price of a cellphone to about $20 USD. Competition will drive the vendors to offer the most functionality at the lowest price.

As the price of phones fall, however, they raise the visibility of one of the major obstacle to wider use of cellphones in developing countries: governments, more specifically government taxes. As the price of cellphones fall, the tariffs and taxes that are slapped on phones start to approach a high percentage of the actual price of the phone itself. Says a World Bank economist: "It does seem strange for countries to say that telephone access is a public-policy goal, and then put special or punitive taxes on telecoms operators and users. It's a case of sin taxes on a blessed product."

In many sub-Saharan African countries, operators don't provide phones, since customers find it much cheaper to obtain them on the black market instead of being forced to hand over money to the government. The GSMA is conducting a 50-nation study that shows how cellphone use increases the net wealth of a country, making happier citizens with cellphones and more overall government tax revenues. Some nations are beginning to see that inhibiting cellphone use is counterproductive: India cut its import duty on cellphones to a minimal 5% in 2004 and plans to cut it to zero. Other nations may well follow.

BACK_TO_TOP* THE CURTA CALCULATOR (2): Curt Herzstark's work on his mechanical pocket calculator was interrupted when Adolf Hitler annexed Austria in 1938. Herzstark's mother had been Jewish and that didn't put him in good graces with the occupying power. He was, however, allowed to continue to run the family factory, which was ordered to build gauges for Nazi tanks.

In 1943, circumstances finally led Herzstark to be arrested. He was thrown into prison, then shipped off to the Buchenwald concentration camp. It was a hell on earth, where the prisoners were brutally abused and executed on minor pretexts, but he was able to use his engineering expertise to make himself useful in a slave-labor factory run as a satellite to the camp. After surviving this nightmare for some time, Herzstark ran into Franz Walther, designer and manufacturer of the famous Walther PP, PPK, and P-38 automatic pistols, who was making use of the slave labor system.

Walther had also produced calculating machines for a time, and so he knew the Herzstark family. Walther told the SS officers running the camp that Herzstark was a real prize. When they learned Herzstark was working on a pocket-sized calculator, they suggested that he might find circumstances more favorable for him if he managed to get the thing to work. They didn't adjust Herzstark's normal workload, but he spent every spare moment he could scrape up working on plans for the gadget.

The plans were effectively done when American troops liberated Buchenwald on 11 April 1945. Herzstark went to Wiemar in Germany and found a shop where machinists managed to cut the parts for three prototypes of his hand calculator. Then the Red Army arrived and Herzstark decided prudently to go west, breaking down the prototypes into parts so they wouldn't attract attention. He made his way to Vienna, where he found the family factory in ruins. Attempts to interest others in production of his pocket calculator went nowhere: Europe was a wreck and people had more important priorities to worry about. He finally chatted with the prince of Lichtenstein, the little country nestled in the Alps. The country had been spared the destruction of war, and the prince was interested in establishing industry there. The pocket calculator seemed to fit the bill. The first Curta pocket calculator hit the streets in 1948.

* The Curta had about 600 parts. A detailed discussion of its operation would be difficult, but the core was a stack of 37 plates, called the "step drum", which had a carrying system called a "tens bell" on top. The drum assembly was ringed by shafts that linked to the output digit indicators on top through pinion gears. Another ring of shafts was arranged around that, linked to the input sliders. Moving an input slider up and down spun a digit indicator on top of the input shaft around, and also moved an arm up the output shaft.

Numeric values were added by spinning the step drum around, interfacing with the output shafts through gears, with the tens bell working through gears on the output shafts to handle carries. The step drum was designed that when it was yanked up by pulling up the crank, it would automatically provide the nine's complement of a numeric value.

The Curta was a marvel of design and engineering, but it would never be more than a curiosity as far as the general public was concerned. It sold through mail order and specialty stores. Herzstark never got rich off of it. The company management even tried to sidetrack him, but they had also not bothered to acquire the patent rights, since they feared patent infringement litigation and wanted him to bear the costs of any court cases. Since the company didn't own the patents, Herzstark was able to come to terms.

The Curta remained in production into the early 1970s, with Herzstark increasing its numeric resolution to 11 input digits and 15 output digits. Curta users were very pleased with the machine, which was beautifully built and provided a capability not available in any other form at the time. Rallye racers were particular fans, since they could perform calculations by feel, rarely taking their eyes off the road.

About 150,000 Curtas were built in all. Of course, the invention of the modern electronic pocket calculator doomed the Curta. Herzstark died in 1988 in relative obscurity. His fascinating little gadget still remains a triumph not merely of ingenuity but of a person's will to survive under the worst circumstances. [END OF SERIES]

PREV* REAGAN'S WAR ON TERROR (2): While the Reagan Administration was dealing with Libya's Qaddafi, at the same time the US was becoming involved in a tangled chain of events in the Middle East that began with Israel's invasion of Lebanon on 6 June 1982. Entire villages of Shiite Muslims were wiped out in the fighting. Reagan felt that American military power could be used to limit the damage, and on 6 July he publicly agreed in principle to send troops to keep order. A contingent of US Marines arrived in Lebanon on 25 August. The Marines were simply there on a short-term basis to ensure that Palestinian Liberation Organization (PLO) fighters could withdraw without being massacred. The families of the PLO fighters were left behind in Beirut, where the Americans believed they would be safe. However, once the Marines withdrew, in mid-September 1983 Christian Falangist forces entered the Sabra and Shatila refugee camps and murdered about 800 unarmed women and children.

Reagan was a person who based his decisions on heartfelt emotion more than cold calculation. He was deeply shocked by the massacre and ordered the Marines to return to Beirut. 1,800 of them set up camp at the Beirut Airport, between the warring parties. Not everybody in the Reagan Administration was happy with this arrangement. Defense Secretary Caspar Weinberger, publicly regarded as an ultra-hawk, was nervous about putting the Marines in a vulnerable position in such a dangerous environment. Weinberger's chief military adviser, Lieutenant General Colin Powell, was uneasy about the "ready fire aim" approach to the action. Powell was a military professional and wanted to know what the objectives were; what the hazards were; what measures should be planned to ensure that American goals were achieved. However, Secretary of State George Schultz felt that the US needed to be assertive, and the president agreed.

* Powell had good reason to be apprehensive, since the Marines were now stuck right in the middle of violent factional feud. One of the most dangerous of the factions at war was the "Hezbollah", a militant Islamic group that took inspiration from the Iranian revolution and support from the Iranian government. Hezbollah fighters were particularly dangerous because they were willing to commit suicide to achieve their ends.

On 18 April 1983, a Hezbollah operative drove a pickup truck loaded with explosives into the US Embassy in Beirut and blew it up. The blast killed 63 people, including 17 Americans. Eight of the dead Americans were US Central Intelligence Agency (CIA) personnel, including station chief Kenneth Haas and the agency's chief Mideast analyst, Robert C. Ames. The successful attack not only humiliated the US, it effectively decapitated US intelligence activities in Lebanon for the time being, leaving the Americans in the dark about what was going on there. Some factions in the US government wanted to retaliate, but the problem was targeting the guilty parties.

Since there were no real intelligence assets on the ground in Lebanon, a special team was put together to go secretly to Beirut and finger who was responsible. However, the first thing the team realized when they got there was that the Marines were dangerously exposed to a terrorist attack. The Marines were already taking casualties, and there was little to protect them from a bombing similar to that used to wipe out the embassy. Despite the warning, the Marines stayed where they were. On 23 October 1983, another truck bomber drove into the Marine barracks in Beirut, detonating a load of tonnes of explosives. 241 Marines were killed and another 100 injured. Nobody was sure who had performed the bombing, but Hezbollah was the prime suspect.

Hezbollah was believed to be receiving training and support from Iranian operatives who had an encampment in Lebanon's Bekaa Valley. An airstrike was planned to hit that encampment, but it was called off at the last moment by Defense Secretary Weinberger. Nobody in the Reagan Administration could agree on a plan that made any sense. As a gesture, Reagan ordered the battleship USS NEW JERSEY to shell the hills near Beirut, but the bombardment did little damage.

* The US continued to be embarrassed in Lebanon. On 3 December, Syrian antiaircraft guns fired on US Navy F-14 Tomcats, and so the next day, 4 December, an airstrike was conducted from two Navy carriers. The strike was a fiasco, with two US aircraft shot down and one pilot taken prisoner. As far as the Marines went, the US finally cut their losses, withdrawing them in early 1984. There were those in the Reagan Administration who felt that the US had suffered a major humiliation in Lebanon, since the US had demonstrated they would cave in quickly to terrorism, and there is no doubt that terrorist groups learned that lesson. The facts remain that the Americans had few clear targets to retaliate against, and that the Marines shouldn't have been set up in such a vulnerable position to begin with.

However, the bombing of the Marine barracks did not seriously damage the credibility of the Reagan Administration. Two days after the bombing, on 25 October 1983, Reagan sent US forces to the Caribbean island of Grenada to evict Cuban military advisers and perform a rescue mission for American students stranded there by a revolution. The intervention was performed in haste and marked by major botches, and afterwards there would be doubts that it had been really called for to begin with. Politically, it was still a great triumph for the Reagan Administration. The US had demonstrated it was willing to use its power, and most of the American public found it felt good.

In hindsight, it still illustrated the "ready fire aim" flavor of many of the Reagan Administration's military ventures: an inclination to resort to military force, sometimes without a clear target, with ambiguous practical results. The irony was that these actions still often proved effective in terms of domestic and, on occasion, international, politics. Reagan proved repeatedly that he could take long chances and pull them off, embarrassing his critics. The fact that long-term issues were not always addressed in a sensible or consistent fashion didn't really become obvious until later. The confusion of the Reagan Administration over how to deal with terrorism continued. [TO BE CONTINUED]

START | PREV | NEXT* WINDMILLS ON THE PRAIRIE: As discussed in an article in INVENTION & TECHNOLOGY magazine from some years back ("Reaping The Wind" by Bill George, Winter 1993), windmills tend to have a futuristic "green" fashionableness to them, but of course they were an old technology in the 17th Century, when Cervantes had his Don Quixote taking them on. They were supposedly invented in Afghanistan a thousand years earlier, with the concept then eventually migrating to Europe.