* 23 entries including: food & farming infrastructure, a history of PET, enduring skyscrapers, potential resurrection of bacteriophage therapy, arctic seed repository, Indian call centers, corporate nurses, 747 fire tanker, Pluto demoted, sleazy adware companies, inside-out surgery, electronic ICUs, pachislots, war in Lebanon, nano-rolls, reformed Veteran's Administration, intestinal bacteria, web-based microfinancing, and greenery in China.

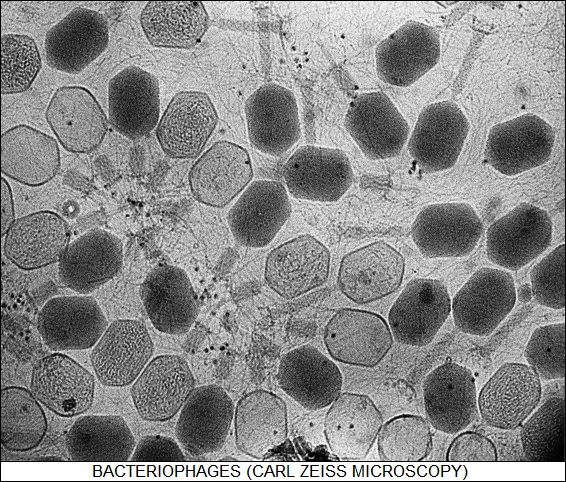

* RETURN OF THE PHAGE? As discussed in an article from a few years back in AAAS SCIENCE ("Stalin's Forgotten Cure" by Richard Stone, 25 October 2002), the discovery of viruses that infect bacteria, or "bacteriophages", late in the First World War led some researchers to believe that they had found a "magic bullet" that could selectively attack bacterial infections and wipe them out, without side effects on patients. It didn't work out that way. Research into "phage therapy" meandered back and forth in the interwar years, then all but disappeared in the West after the Second World War, when miraculous new antibiotics like penicillin became common.

Now antibiotics are running up against new strains of pathogens that shrug them off, and Western researchers are getting interested in phage therapy again. In fact, phage therapy remains alive and well in the former Eastern Bloc, which never gave up on it in the first place.

In December 2001, three woodsmen were wandering through the mountains of the former Soviet state of Georgia when they found two canisters that were strangely warm to the touch. The night was cold and they used the canisters to help keep themselves warm. As it turned out, the canisters were loaded with radioactive isotopes, having once been used as an element of a power-generating system for remote installations, and two of the men were hideously burned. The two were rushed to a hospital in Tbilisi, the capital of Georgia. They came down with a staphylococcus infection that resisted antibiotics. The two patients were certain to go into lethal septic shock, but the doctors were then able to use a new product, phage-impregnated patches sold under the trade name of "PhageBioDerm", that got the infection under control and saved their lives.

The phage patches were the product of the Eliava Institute in Tbilisi, which had kept phage therapy alive in the East for decades. Now work on phage therapy is reviving in the West, with dozens of startup companies trying to introduce phage-based products. Exponential Biotherapies INC (EBI) of Port Washington, New York, is now conducting clinical trials of phages designed to treat vancomycin-resistant enterococci (VRE).

Nobody expects phage therapy to be an instant success in the West. Few think it will replace conventional drugs, and the US Food & Drug Administration (FDA) is still considering regulatory mechanisms for phage products. One particularly sticky question is whether phage products will have to be completely re-qualified if the phage strains are modified to deal with bacteria that have learned how to resist phages. Some believe that phage products will be not be initially used to treat humans; more likely early targets will be livestock -- where pressures are mounting against mass use of antibiotics as the practice leads to antibiotic resistance -- and pathogens such as salmonella that can infect foods and cause food poisoning.

* Phages are everywhere, with millions in a drop of seawater or sewage, and they carpet our skin and our digestive tracts. The first clue of the existence of phages was provided by a British chemist name E.H. Hankin, who reported in 1896 that water taken from the Ganges and Jumma rivers could kill cholera pathogen.

There matters stood until World War I. In 1915, British bacteriologist Frederick W. Twort reported the existence of a mysterious virus that killed bacteria in solution. The next year, 1916, the well-known biologist Felix d'Herelle of the Pasteur Institute in Paris reported that he had identified a microbe from the feces of patients with dysentery that could destroy the Shigella bacterium, the cause of the disease. D'Herelle and his wife called the microbe a "bacteriophage". While the nature of viruses wasn't really understood at the time, that didn't prevent d'Herelle from trying to exploit his discovery. In 1919, he and his colleagues gave a 12-year-old boy suffering from severe dysentery a broth of bacteriophages, after the researchers had drunk large doses of it themselves to ensure that it had no side effects. The boy recovered.

D'Herelle worked very hard to promote phage therapy, and even pharmaceutical giants like Eli Lilly became enthusiastic. However, in 1934 the American Medical Association published a critique of phage therapy that described it as ineffective or even dangerous. Modern phage researchers believe that the concept behind phage therapy was sound, and certainly bacteriophages in themselves are harmless to humans; the failure of phage research seems to have due to sloppy work, with researchers administering strains that hadn't been adequately checked for effectiveness and failing to purify toxins and pathogens out of test preparations.

That was the beginning of the end of phage therapy in the West, but it was still going strong in the USSR. In 1923, Giorgi Eliava returned to Georgia after five years of work with d'Herelle and managed to persuade the powers that be to allow him to set up an organization in Tbilisi to work on phage therapy. That organization became the Eliava Institute. D'Herelle was impressed by Eliava's work, and in 1933 d'Herelle left his post at Yale University to work at the Eliava Institute. D'Herelle left a few years later, after Eliava was arrested during Stalin's purges and executed.

* Despite these setbacks, the institute survived; in fact, it prospered. In the 1940s, the institute developed phages to treat infections by anaerobic bacteria such as gangrene. The Soviets found phages effective and much cheaper than antibiotics, and the Soviet military was an enthusiastic user. The institute got whatever it needed, and phage production centers were set up both in other regions of the Soviet Union, and in Eastern Europe.

Interest in phages didn't completely die out in the West, but for decades they were seen more as ideal test subjects for genetic studies, being simple in arrangement and elegant in operation. Researchers tinkering with these viruses did wonder why phage therapy had gone so poorly, with suggestions that the host's antibodies neutralized them. In the 1970s, an experiment conducted on mice by Carl Merrill and his colleagues at the US National Institutes of Health discovered that phages injected into the test subjects were cleaned out by the spleen even before antibodies could be produced. The group didn't give up their studies, believing that there might be strains of phages that could evade being cleaned up and persist to target bacterial pathogens. They found such strains, which proved effective in saving mice from otherwise lethal infections. A report published by the group in the scientific press in 1996 began the revival of phage therapy in the West, since by that time medical researchers were increasingly desperate to find tools to deal with antibiotic-resistant pathogens.

Despite all the genetic studies performed on phages, there are still major gaps in our understanding of how they actually operate. It is known that there are two classes of phages: "lytic" phages that infect a bacterium, replicate wildly, and then break out and destroy their host; and "temperate" phages, which integrate their genomes with that of the host bacteria, quietly "hibernating" through generations of bacteria, even blocking infections by other phages.

Lytic phages are ideal for phage therapy, since they completely destroy their hosts. Temperate phages are not only much less destructive to bacteria, they also have some worrying features. When a temperate phage starts replicating again, it can carry chunks of the bacterial genome with it. When the child phages infect other bacteria, the phages can splice these chunks of the old bacterium into their new hosts. These genes may improve the resistance of the new bacteria to antibiotics and phages, or may give the bacteria new genes for the production of toxins. That means that phage therapy requires lytic phages. Complicating this issue is the fact that nobody is sure that lytic phages cannot become temperate phages.

Another issue is phage resistance. Bacteria develop resistance to phages, though it seems to happen more slowly than resistance to antibiotics. However, since resistance to phages arises gradually, phage advocates say that it is easy to modify phages to track such a moving target, simply by cultivating phages in a culture of the resistant bacteria and sorting out phages that are still effective.

This does raise the sticky issue of whether the new phage strain needs to be completely requalified, at great time and expense. Companies involved in phage therapy are promoting a scheme where a "master" strain is given full qualification, while new strains derived from that master strain will only require streamlined qualification. Some researchers are taking a middle path between antibiotic therapy and phage therapy. They are investigating phages to see if their mechanisms lead to useful antibiotics, or are using phages to deliver antibiotics.

* The staff of the Eliava Institute in Georgia must be pleased with the resurgence of phage therapy in the West, but that pleasure has to be diluted by the fact that it was only the result of Western studies. The work of the Eliava Institute and other organizations working on phage therapy in Eastern Europe has been largely ignored. Worse, organizations such as the FDA have refused to consider Eastern data as part of the approval process, though some of the officials at startups in the West say that some of the studies performed in the East can easily stand up to a critical inspection.

The FDA is still trying to figure out how to approach phage therapy. The EBI's VRE phage is leading the way, and the phage therapy startups are nervously waiting for the FDA to make their judgements in the case. The big pharmaceutical companies are also keeping an eye on the proceedings, and if EBI is successful, that will provide encouragement for the "big pharma" to get back into the field.

Another issue that phage therapy advocates have to address is that of public reaction. Many patients may not be happy with the idea of being administered a virus to treat a bacterial infection. Officials at some startups try to avoid the use of the word "virus" and emphasize the word "phage" instead, describing it as a "natural delivery system". In other words, they're playing the "green" angle. Other researchers suggest that such word games are not very constructive, or at least irritating, and that it would be better to educate the public that phages are everywhere, both in the outside world and inside the body.

Phage therapy advocates say the devil of antibiotic resistance greatly increases their own chances for success. One points out that a half-century of fighting bacteria with antibiotics demonstrates that the enemy will never be wiped out, but that "at least you can try to shift the ecological balance in our favor."

BACK_TO_TOP* SEEDS ON ICE: There is a conflict in human planning between thinking over the short term and thinking over the long term, with the pressures of the short term likely to win out much of the time. According to an article in THE ECONOMIST ("Seeds Of Hope", 24 June 2006), the Norwegian government, working with a non-governmental organization named the Global Crop Diversity Fund (GCDF), is backing up its commitment to long-term planning by spending $3 million USD to set up a seed bank on the island of Svalbard, well north of the Arctic Circle.

The GCDF estimates that there are about 1,400 seed banks for crops scattered around the globe. The organization wanted to create a central master seed bank that could survive for centuries or millennia even if it were abandoned. The Svalbard International Seed Vault will store its legions of seed in an underground bunker buried 70 meters (230 feet) deep, protected by meter-thick concrete walls and a massive door. The high-north location will ensure that the climate remains frozen for at least a century, and will also protect the seeds against any disease that wipes out the corresponding crops in the places where they are grown.

Not all crops can be preserved as seeds, however. Bananas, estimated to be the world's fourth most important food crop, are propagated by cuttings that can't be stored indefinitely. Researchers are trying to figure out some way of preserving such crops.

* CALL SOMEWHERE ELSE: The vision of an online support operation in India has become something of a modern stereotype, but according to a BUSINESS WEEK article ("Call Center? That's So 2004" by Manjeet Kripalani, 7 August 2004), it's somewhat out of date. While customers using Indian call centers are not always happy with the service, neither are the Indians running the centers: the work is aggravating, and more to the point it doesn't pay well, since client companies do everything they can to squeeze down the support money they pay out.

The Indian business processing outsourcing industry is now getting out of the call center game. In 2000, 85% of the industry's business was call center work, but now it's down to 35% and continuing to shrink. Now the companies are focusing on higher-value activities, such as processing mortgages, handling insurance claims, overseeing payrolls, and so on. The work is more pleasant, personnel turnover is much lower, and best of all it pays much better, both for companies and employees. The call center business is now starting to migrate elsewhere, such as Eastern Europe, or even back to the home countries where the customers are.

[ED: The bit about unhappy experiences with call centers rings a bell to me. I spent a good portion of my life in online support, and though I have had some problems with online help folk, I am generally very patient with them. This is because I know when I'm getting poor support, it's not the person on the other end of the line who's usually the problem. The real difficulty is rooted in company managers whose determination to cut costs gets so blinkered and blindered that they under-invest in support, resulting in a service whose sole effect is to antagonize customers. As the saying goes: You can do something or you can do nothing -- but doing only enough to botch the job is just plain stupid.]

BACK_TO_TOP* THE CORPORATE NURSE PITCH: While the big pharmaceutical companies, or "Big Pharma", get their fair share of public abuse, being accused of such actions as overselling drugs and overprotecting their rights to critical drugs, as a BUSINESS WEEK article discusses ("Big Pharma's Nurse Will See You Now" by Michael Arndt, 12 June 2006), they have taken some actions that at least in part can be seen as praiseworthy.

For example, take Dr. Victor Rivera. When he diagnoses a patient with multiple sclerosis, he prescribes a drug named Rebif -- co-marketed by two Big Pharma companies, Serono and Pfizer -- and then sets up an appointment with Alecia Parks, a registered nurse, who instructs the patient on the proper use of the drug. Parks keeps in touch with her patients, ensuring that they stay on the drug regimen. The interesting thing is that Parks actually works for Serono and Pfizer, operating through an intermediary company named MS Lifelines. The service is free to both the customer and the doctor. Eli Lilly similarly has nurses on the payroll to help diabetics, and Hoffman-La Roche does the same to help HIV patients.

It all seems like too good a deal to be true, but it's a case of enlightened self-interest as far as Big Pharma is concerned. They already have large sales forces and doctors are cramped for time to talk to them anyway, so they have had to come up with better ways to pitch their products to the doctors. The Big Pharma nurses are a help to the doctors, who want patients to stay on their drug regimens. Big Pharma also wants patients to stay on their drug regimens, since that means much more consistent sales of drugs, and also patients who keep on living to buy more drugs.

Some might call that cynical, and certainly the nurses are agents for their companies, promoting the drugs they are helping administer; Big Pharma's nurses also are paid more highly than most hospital nurses, leading some to fear a drain of resources. However, the nurses do not conceal their affiliations, and they have absolutely no authority to make prescriptions or make diagnoses. The reality is that both the doctors and patients appreciate the scheme, with some patients claiming that the nurses provide the most attentive care they receive.

* ED: I was amused by the picture with the article since it had a pretty young blonde nurse who was simply but stylishly dressed in a black pantsuit pitching a product to a patient. I had to think: "This would make medical treatment something to look forward to." Alas, the shot was obviously posed and I had little doubt the woman was a model. Reminds me of the story comedian Rodney Dangerfield told of the time he went to shoot a TV commercial on a beach, and commented on how good-looking the crowd around him was. The reply was: "Idiot! They're all actors!"

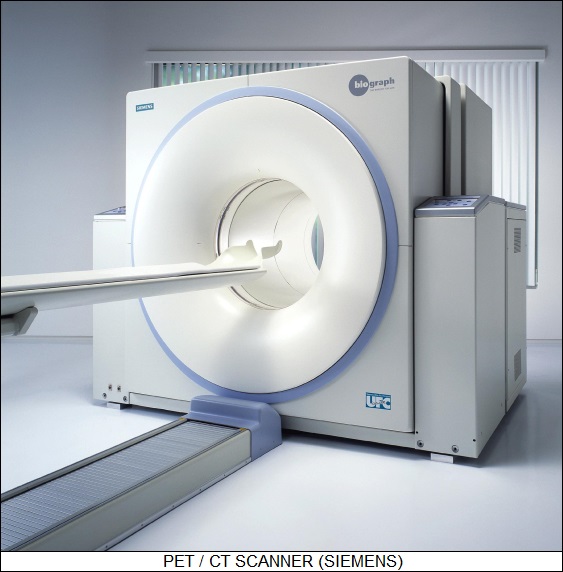

BACK_TO_TOP* A HISTORY OF PET (2): Although CTI scored a coup by buying up PET technology from EC&G ORTEC, it wasn't like a fortune was likely to spontaneously fall into the hands of the owners. Just having a whizzy new imaging device wasn't enough to make PET take off; other elements of the system had to come into place. One was to use radioactive fluorine-18 as a component of a sugar named "fluorodeoxyglucose (FDG)" and then use the sugar as a tracer. FDG was a very useful tracer, because fluorine-18 has a relatively long half-life -- 109 minutes, five times as long as carbon-11 -- making it easier to obtain and handle. Even more usefully, cells absorb glucose at a rate proportional to their cell activity, and cancer cells generally are very active. A PET scan of a patient dosed with radioactive FDG would provide a map of cancer hot spots in the patient's body.

It took some time to recognize the usefulness of FDG. A team at Brookhaven National Laboratory on Long Island, New York, synthesized an FDG radiotracer in 1976, but it took several years for their report to be published in a scientific journal. With the emergence of PET, FDG began to come of age.

However, another major system component had to be put into place to make PET a major success: a cyclotron to synthesize radioactive isotopes. In 1985, CTI bought Cyclotron Corporation, a bankrupt cyclotron maker, and adapted the technology for hospital use. By 1986, CTI could offer a complete system with PET scanner and cyclotron for $2 million USD.

In the meantime, the firm's management was looking around for a major corporate partner to help sell PET scanners worldwide. In 1985, Siemens of Germany bought a stake in CTI for $2.5 million USD, and then a few years later bought a 49.9% stake in the company for $30 million USD. (Siemens would buy out CTI completely in 2005 for about $1 billion USD.) In 1990, General Electric bought out Scanditronix, a Swedish competitor to CTI. That same year, the industry set up a non-profit trade association, the Institute for Clinical PET, to educate the public and promote the technology.

By 1991, the medical press was praising the usefulness of PET, with research showing how useful the technology was for brain, heart, and cancer imaging. However, then regulatory issues began to make trouble for PET. The difficulty was not really the PET scanner in itself, since it was a passive device, but the radioactive tracers, which required US Food & Drug Administration (FDA) approval. The tracers could be and were used, but without FDA approval Medicare would not pay for PET scans. The problem was that the FDA required clinical trials for the tracers that were just as thorough as if the tracers were a drug. Unfortunately, the Brookhaven team that came up the FDG tracer hadn't bothered to patent it, meaning it was "open source", and so no company wanted to pay for the qualification.

Finally, Phelps pitched the benefits of PET to his friend, Senator Ted Stevens of Alaska, and Senator Stevens decided to go to bat for PET. In 1997, he added a provision to the FDA Modernization Act that instructed the FDA to streamline the agency's procedures for qualification of radioactive tracers, and to allow use of the tracers before qualification was obtained. Medicare soon began to reimburse PET scans. CTI was advancing along other fronts at the time, having set up the "PETNET", a network of pharmacies that could provide radioactive tracers to hospitals, and once Medicare gave the go-ahead, PETNET was available to support expanded use of PET scanners. PET technology began to take off rapidly.

PET was poised for greater things. In 1994 David Townshend, then an assistant professor of radiology at the University of Pittsburgh, and Ronald Nutt, one of the founders of CTI, applied to the US National Institutes of Health for a grant to develop a combined PET / X-ray computed tomography scanner. A prototype was installed at the University of Pittsburgh in 1998, with the first commercial PET/CT scanners going on the market in 2001. Now PET scanners have been largely replaced in the market by the combined PET/CT scanner. According to Townshend, a PET/CT scanner provides imagery that is easier to interpret and reduces scanning time.

PET has proven extremely valuable in helping to diagnose cancers, diagnose Parkinson's disease and help treat epileptics. It provides a sensitive tool for tracking the effect of drugs to treat tumors, since it can detect if a tumor has been "shut down" even before it starts to shrink. Although use of PET is still much lower than use of CT, PET is a growth market, with the biomedical community still figuring out everything they can do with it. [END OF SERIES]

PREV* INFRASTRUCTURE -- FOOD & FARMING (4): Of course, there are many other crops besides wheat and corn, with each featuring its own distinctive technology.

Soybeans were almost unknown in the West before 1900, but in the last half of that century they became a staple crop in the US and elsewhere. This was not because an enormous market for tofu emerged, but because soybeans are an excellent source of vegetable oil, being used in everything from margarine to cookies to animal feed. Soybeans also neatly complement the growing of corn. Both crops tend to use much the same equipment, and soybeans, being "legumes" like peas, return nitrogen to the soil, making soybeans a good "rotation" crop to cycle with corn, which depletes the nitrogen. Rotating crops also helps control pests, because pests that infest corn usually don't like soybeans, and the reverse.

Of course, orchards for raising apples, oranges, walnuts, and so on are common. Tending an orchard is much different from growing corn or wheat; considerable care must be given to individual trees in the orchard, since a tree can take years to come to maturity, and will produce fruit for years as well. Orange growers live in fear of snap frosts and keep an eye on the weather channel. Once the growers made bonfires to keep their oranges warm in a frost, but now they use big fans, which don't warm the air much but keep the frost from settling. Trees in an orchard are arranged in nice neat rows to maintain spacing and to allow access for machinery. A honeycomb (hexgrid) pattern would be more space-efficient, but it's more troublesome to lay out.

Vineyards are one of the last agricultural holdouts against automation. They consist of rows of trellises -- stakes with lines run between them -- on which the vines grow, spaced widely enough to permit pickers to move down the rows. There are grape harvesting machines, which straddle the row and run down it, knocking off the grapes, but they tend to be more the exception than the rule. Incidentally, a single grape vine may support several different grape varieties, with segments of vines grafted on to a single root; plants, unlike us, are not constrained by graft rejection.

Wood is a crop, with much logging performed off of natural growth timber, a procedure that can be very politically controversial, but tree farming is done as well. Tree farming is particularly important for production of paper, which uses relatively small, fast-growing trees for "pulp". The trees are stripped of bark (which is then burned for fuel) and broken into chips, which are "pulped" into a soggy mush. The pulp is spread on a moving porous screen, where the water drains off; felt cloths and steam-heated rollers complete the paper production process, except of course for finishing, cutting into sheets, packaging, and distribution to end users.

Vegetables -- peas, carrots, cabbage, beans, tomatoes, squash, cucumbers, lettuce -- all have their own particular procedures and technology, but they share one consideration: there's a narrow window between unripe and rotten, and so they have to be harvested and processed quickly. A farmer may have to confront the unpleasant choice of buying an expensive machine that he only uses once every now and then, or trying to line up labor on the spot to do the job. There's also the problem that the packing plant can't absorb all the produce of all the farmers if, say, all the peas are picked at the same time. The result is that vegetable production is often done on a contract basis, with the supermarket chains or packing companies supplying the necessary harvesting equipment and coordinating its use.

Agrotech vegetables can have their limitations, the most despised example being the tomato. Advocates for organic farming can sometimes sound like religious fanatics, but they do have a point in that a typical grocery store tomato would hardly be described as very tasty. However, it is also obvious that the tastier home-grown tomatoes go bad a lot faster. Work has been performed to use genetic modification (GM) to produce a tomato that tastes good and lasts a long time. However, GM has encountered consumer resistance. GM technology does need to be treated with care, but some have suggested that the reaction against GM crops is so extreme that it has become the Left's answer to fluoridation of water supplies.

Cotton is a traditional crop in the US Southeast. It is notoriously labor-intensive and a picky crop on top of that, vulnerable to diseases and parasites. Its emergence as a crop did much to reinforce American slavery early in the 19th century, reversing the mindset of late 18th century Americans that slavery was on the decline. Now the labor's done mostly by chemicals and herbicides. Since nobody eats cotton -- fans of Joseph Heller's novel CATCH-22 will remember Lieutenant Milo Minderbinder's futile attempt to dump a load of Egyptian cotton by coating it with chocolate, and trying to pass it off as candy -- herbicides and pesticides are applied with little restraint, and there's less resistance to GM technology.

The cotton is harvested by first spraying it with defoliant, which suppresses the leaves, making the bolls easier to strip off by machine. The machine packs the bolls into dirty white bales, like loaves of bread the size of a truck. The bales are hauled off to the local cotton gin, which separates the "lint", the white fibers that go into cloth, from the seeds, which are pressed for oil and so valuable as well.

There are many more crops, some of them by no means obvious until they are pointed out. My original hometown of Spokane, Washington, in the US Northwest, is a major production center for Kentucky bluegrass -- somebody has to grow the seed for lawns, after all. When I was working in Corvallis, Oregon, the factory I spent my days at was next to fields of mint, which could generate a strong but not unpleasant odor downwind when the crop was ready to harvest. [TO BE CONTINUED]

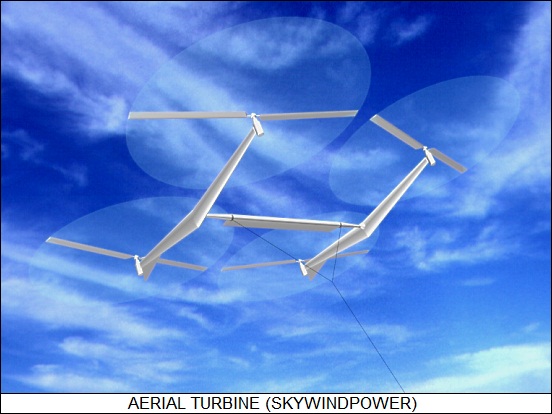

START | PREV | NEXT* GIMMICKS & GADGETS: POPULAR SCIENCE ran a series on alternative energy technologies in the July 2006 issue, which mostly "arrested the usual suspects" but did have a few new items of interest. One was a scheme by a California outfit named SkyWindPower involving a different approach to building wind turbines: in the form of kites flown up into the jet stream, driving power back down their tethers. Each kite looks like an "H" character with four twin-bladed rotors, one at each corner of the "H". The idea seems a bit dodgy, but it does have its appeal: the jet stream is strong and constant, and the kites wouldn't be as hard on birds as ground-based turbines.

Of course, the article mentioned ethanol power, pointing out that work on "cellulosic" ethanol, obtained from waste biomass, is getting a leg up from termites, of all things. Termites can digest wood and the like with the help of the bacteria colonizing their guts that convert cellulose into carbohydrates. Efforts are now being made to sequence the genomes of such bacteria so they can be put to use in producing ethanol from cornstalks, sawdust, and grass clippings.

* In more "energy gimmick" news, WIRED.com reports on a new twist: A British firm named Facility Architects is working on the "Pacesetters" project, in which the tramp of pedestrians on the floors and walkways of high-traffic areas, such as subway stations, is collected by a hydraulic system and used to drive lighting. Each pedestrian can provide five watts or more. The project started out as an attempt to put electric generators into combat boots to drive a soldier's electronic gear, but boots get wet and muddy, which is hard on generating gear. There's a complementary effort to use piezoelectric generating systems to harvest power from passing road or rail traffic.

* Yet another energy gimmick suggested in an article on the MIT TECHNOLOGY REVIEW website considered the potential of "thermoelectric" materials -- which convert heat directly into electricity -- for vehicles.

The majority of energy burned in an automobile engine is just discarded as heat, with the remainder used to keep the car moving and drive its alternator to provide electric power to car systems. It would be elegant to get rid of the alternator and use the waste heat to generate electric power instead, increasing efficiency and in principle reducing complexity. New thermoelectric materials that could be wrapped around the exhaust pipe would do the trick.

At first, the thermoelectric system might be used just as a booster for a conventional automotive electrical system. Once efficiency rose enough, it could replace the alternator -- but that wouldn't be the end of the matter. A car's engine also drives oil and cooling fluid pumps, and decoupling those pumps from the engine by driving them with electric motors powered by a thermoelectric system would further boost efficiency.

Thermoelectric materials are often made of semiconductors. Such materials must have high electrical conductivities, but that means in general they have high thermal conductivities as well -- reducing efficiency, since heat tends to pass through without losing much energy during the transition. New nanoscale materials may provide a solution, with the materials featuring molecular lattices that provide high electrical conductivity but trap heat vibrations.

* Finally, an article on the "SuperGrid" in the July issue of SCIENTIFIC AMERICAN outlined a grand scheme for a new power grid -- featuring superconducting underground electrical power trunk lines that are cooled by running liquid hydrogen through the center, with the liquid hydrogen used for fuel on the terminal ends.

I recall seeing grand schemes in the pages of SCIENTIFIC AMERICAN ever since I was a teenager and have to laugh a bit, since they rarely come to pass. I should keep an open mind, however; many of the things we take for granted nowdays started out as somebody's wild scheme. Every now and then one of them actually works. One of the things that I noticed in my career in industry is that only a small proportion of products are hits. Most are flops or just modestly profitable -- it's the few big hits that keep things going. It would be nice to be able to figure out the big hits in advance and not waste time on the rest, but if we could do that, we'd all be rich.

BACK_TO_TOP* SUPERTANKER: Each issue of AVIATION WEEK magazine usually has a "flight report" in which a magazine editor takes a ride, sometimes in the pilot's seat, in a particular type of aircraft. In the 31 July 2006 issue, William B. Scott reported on his ride as an observer in a huge, sleek four-engined jet as it maneuvered for a precision approach on a target. At the precise moment, the pilot punched the "pickle" button on his control yoke, plastering his payload over the target area and then zooming away.

The target was actually the airport at San Bernadino, California, and the payload was tens of thousands of gallons of water that created an artificial cloudburst. The aircraft, as described in the article ("Air Tankers Go Big Time") was a Boeing 747-200 jumbo jet, modified by Evergreen Aviation to fight wildfires as the "747 Supertanker", and was on a tour to demonstrate just how powerful a tool the aircraft was.

The Supertanker began life as a "convertible" aircraft that was capable of being switched between cargo and passenger carriage. It was given a set of modifications:

The Supertanker was designed as a system that could be operated at any airport capable of handling a 747. Ground support equipment, aside from that normally required for a large commercial aircraft, consists of a fork lift and a large bladder tank that is filled from a fire hydrant. The Supertanker is rapidly reloaded from the bladder tank using a hose-and-reel system fitted into the aircraft.

Evergreen Aviation hasn't found it simple to sell the 747 Supertanker to the US Forest Service (USFS), but has been able to make a case. Obviously the Supertanker is more expensive to operate than existing tankers like the Lockheed P-3 Orion, but one Supertanker can carry the load of almost seven Orions, and it can get to the target area faster. One Supertanker could put a small fire out on its own, save a specific structure from incineration, or protect trapped fire-fighting crews by laying down a protective cover of moisture.

There were concerns that the big 747 isn't maneuverable enough to do the job, but its operational weight is over 90 tonnes (200,000 pounds) less than its maximum takeoff weight, giving it a substantial margin of power even when laden and a very large margin of power once it has dumped its load. In tests, the Supertanker easily followed a small lead aircraft into the target area, performed its run, and then departed like a rocket. There were also concerns that the flood of water would wash away firefighting equipment and crews, but laying down effective drop patterns hasn't proven particularly difficult.

The 747 Supertanker has a rival, the "DC-10 Supertanker" from the 10 Tanker STC group. The DC-10 Supertanker is a converted McDonnell Douglas DC-10 jumbo jet with a load of 48,500 liters (12,000 US gallons). Both aircraft will need to answer serious questions from the USFS and the US Federal Aviation Administration before they will go into the fight against forest fires. Meeting the challenges will take effort, but company officials involved in the supertanker programs don't regard the rules are unjust. Aerial fire-fighting is a difficult, dangerous business, and the regulations often came from bitter experiences that cost lives.

BACK_TO_TOP* REDEFINING THE PLANETS: The distant world Pluto was discovered before World War II and immediately designated the "ninth planet". In the decades after the war, continually improving observations of Pluto kept reducing its size until it began to seem like a pretty feeble excuse for a planet, with a mass only a quarter of a percent of that of the Earth's. Pluto is very shiny, which is why its size was overestimated.

Proposals to "demote" Pluto met with considerable public resistance -- but recently, astronomers have discovered distant worlds as big as Pluto, and there was no way they could ignore the whole obnoxious issue of whether Pluto was a planet or not any longer. Now, according to a BBC.com article, a draft document to the International Astronomical Union (IAU) has proposed a precise definition of the term "planet". The definition involves two conditions:

The second condition can in general be met by any celestial body with a mass 0.6% that of our Moon and a diameter greater than 800 kilometers (500 miles). There may be borderline cases that require more observation to see if they belong to the planet "club".

Under the new scheme, the largest asteroid, Ceres, would become a planet, with a few other asteroids being considered as candidates. Pluto plus its comparatively big moon Charon -- the two bodies are close enough in size to be considered a "double planet" -- and a recently discovered distant world temporarily designated "2003 UB313" would now all be regarded as planets. However, the three worlds would be referred to as "plutons", characterized by having noticeably elliptical, high-inclination orbits, out of the plane of the orbits of the eight "classical" planets, and with the orbits taking 200 years or more to complete.

Other distant bodies have been discovered that may end up being classified as planets once they are better characterized. Some astronomers have found the whole "definition" exercise annoying, since no matter what the results are they won't learn a single new thing about the Universe, but it's one of those "paperwork" issues that won't go away until it's finally nailed down.

* ED: A week later, the IAU voted on the matter, with the end result being that a third qualification was added: a planet must clear its orbit of other objects. That rules out the plutinos and the big asteroids like Ceres, which become "dwarf planets". Now it's official -- there are only eight "real" planets in the Solar System.

BACK_TO_TOP* A HISTORY OF PET (1): As reported by a survey in THE ECONOMIST ("The Power Of Positrons", 10 June 2006), modern medical imaging technology is based on a set of technologies that operate using a range of principles. One of the most important, "positron emission tomography", provides detailed observations of the internal state of patients from the emissions of radioactive materials injected into them.

The discovery of radioactivity early in the 20th century led eventually to the use of radioactive "tracers" in biomedical applications. A patient could be injected with a solution of short-lived radioactive material, and the movement of the material through the patient's body could be then be traced. Radioactive tracers were a big step forward, but they left something to be desired. Traditionally, the tracer atoms were isotopes of heavy elements not generally found in the body, with the atoms building up in tissues and then demonstrating their accumulation on a photographic plate, which recorded the gamma-ray emission produced by the atoms. The heavy atoms used could sometimes interfere with the body processes they were supposed to be tracking.

Oxygen, nitrogen, and carbon are common in our bodies, and so their short-lived radioactive isotopes oxygen-15 (with a half-life of two minutes), nitrogen-13 (ten minutes), and carbon-11 (20 minutes) are compatible with our biological processes. These radioactive isotopes emit a positron -- an antimatter electron -- when they decay; if they decay inside the body the positron quickly encounters an electron, with the two particles annihilating each other, being converted into two gamma-ray photons that fly off in exactly opposite directions.

Once this decay process was understood, it seemed attractive to use it for biomedical applications. If a patient was injected with a positron emitter, the opposed gamma-ray photons could be picked up by sensors on either side of the patient, allowing the site of the electron-positron annihilation -- presumably very close to the location of the radioactive isotope that produced the positron -- to be pinned down by measuring the difference in time of arrival of the two photons. In the early 1950s, Gordon Brownell and William Sweet of the Massachusetts General Hospital experimented with such a device, pinning down the location of a brain tumor in a patient by injecting a radioactive tracer into the patient and then rotating an opposed set of detectors around the patient's head.

One big problem with the scheme at the time was the fact that the appropriate tracer isotopes decayed very quickly, which made them safer for use in patients but also meant that they had to be synthesized in a cyclotron particle accelerator shortly before use. Another problem was that results from a scan were in the form of raw data that had to be deciphered by an expert, and even then with difficulty. However, to some the potential was obvious. In 1966, the late Michel Ter-Pogossian, then head of the division of radiological sciences at Washington University In Saint Louis, and Henry Wagner, professor of radiology and medicine at Johns Hopkins University in Baltimore, published an influential paper advocating use of positron emitters in medical imaging. At the same time, the emerging computer revolution led to the introduction of "computed tomography (CT)", in which computer power was used to render piles of raw X-ray data obtained from axial scans of patients into much more easily inspected imagery.

In late 1972, Ter-Pogossian and Michael Phelps, a young assistant professor who worked in Ter-Pogossian's lab at Washington University, went to a discussion of computed tomography and came back home thinking it would make medical imaging using positron emitters much more useful. Their lab group began work on prototypes. To obtain more funding, in 1973 Phelps got in touch with Terry Douglas, the chief engineer of the life-sciences division of EG&G ORTEC, a scientific instrument company in Oak Ridge, Tennessee. Long-distance conversations between the two men led to Phelps driving from Saint Louis to Oak Ridge in his Volkswagen Beetle, accompanied by his colleagues Nizar Mullani and Edward Hoffman. They sold Douglass on the concept, and he loaned them some equipment needed for the research effort.

An early prototype of what they called the "positron emission transaxial tomograph" machine -- the "transaxial" would soon fall out, giving the acronym PET -- consisted of a table with a hole in it that was ringed by gamma-ray detectors, but by 1974 the group had built a PET scanner that was modern in form, with the patient on a sliding bed and the gamma-ray sensors arranged as a vertical hexagonal ring. By this time, the researchers and EG&G ORTEC had a partnership going, and the first commercial PET scanner, built by EG&G ORTEC, was shipped to the University of California at Los Angeles (UCLA) in 1976; Phelps and Hoffman had moved to UCLA by that time and were able to arrange the purchase of the device.

EG&G ORTEC sold a very small number of PET scanners for research purposes, but the clique that had developed the technology felt the technology had much more potential. And then, in 1983, it all fell into their lap: EG&G ORTEC decided to sell off the company's life sciences division, so Douglass and some of his colleagues managed to obtain $2.5 million USD in funding to buy up the PET technology operation, forming a company named "Computer Technology & Imaging (CTI)". Phelps was an adviser to CTI. [TO BE CONTINUED]

NEXT* INFRASTRUCTURE -- FOOD & FARMING (3): In farm country, distributorships of farm gear are common, featuring rows of machines painted green or yellow or orange or red. It may be hard for a novice to even figure out what some of the gear does.

Of course, few have any confusion over the plain old farm tractor, used to pull a wide variety of farm implements over the fields. The adjectives "plain" and "old" are a bit misleading, however, because a modern farm tractor is a fairly high-tech piece of gear. The classic farm tractor had big wheels in the rear to get traction over soft ground and small wheels in front for steering, with the farmer perched on a metal pan seat. Times have changed: now a farm tractor usually has four wheels, or sometimes eight (four duals), often with all-wheel steering for maneuverability and to ensure that the rear wheels follow the tracks of the front wheels, minimizing damage to a field.

The tractor is run by a diesel engine and has a wide selection of both forward and reverse gear ratios. As did its ancestor, the modern tractor has a "power take off" to link its engine with an external shaft to various sorts of machinery, such as a hay baler or mower. The farmer now rides in an air-conditioned cab with a nice sound system to listen to Randy Travis on CD, sometimes even using a computer control system following a Global Positioning System (GPS) satellite map to work the field according to a predetermined plan, laying down precise amounts of pesticide or fertilizer as needed. Some tractors will follow the plan automatically, with the farmer simply riding along as a supervisor.

* When it comes time to harvest, the tractor gives way to a self-propelled "combine harvester", a direct descendant of Cyrus McCormick's horse-drawn reaper of 1831. The reaper was followed by the threshing machine, which separated the wheat from the chaff. In the early 20th century the two functions were "combined" in one machine -- hence the name.

In the days when harvesting was done manually, the wheat had to be cut with scythes and bound into sheaves for collecting. It was threshed on the barn floor by being beaten to knock out the wheat kernels, and then "winnowed", or tossed into the air, to let the wind carry off the lighter chaff. A combine handles this entire process automatically. A wide cutter head chops off the stalks of wheat, which are then carried by conveyor belts into a spinning drum fitted with "rasp bars" to knock the kernels off the stalks. The kernels fall through a perforated floor, while the chaff is carried away on vibrating belts or chains called "chaff walkers" that shake loose any remaining grain. The chaff is dumped out the rear, either intact so it can be gathered up, or chopped up to act as mulch. The wheat collected in these processes is blown by a high-powered fan to blast away chaff, with the combine leaving a prominent plume of dust in its wake. Some combines have pressurized cabs to keep the dust out.

The grain is stored in an internal bin that can store tonnes of grain, but such is the productivity of the combine that the bin is quickly filled up, so it is pumped out through a chute into a truck about every half hour. Combines have a low utilization rate, since they are only used at harvest time, but crops won't wait long to be harvested and having a machine that can do the job in a big hurry pays off. The combine will be run almost continuously until the crop is in. Other types of crops -- cotton, tomatoes, sugar cane -- have their own specialized harvesting machines, each worthy of a detailed discussion by itself, but brevity means that they can only be mentioned here.

* Once the farmer has harvested the crop, it has to be stored. Farmers who use grain to feed their own livestock will have a granary on their farms, in the form of a big corrugated galvanized metal drum with a conical roof. Grain is carried to the tip of the cone using a device such as an "auger", a long tube with a rotating screw inside. The granary may be fitted with a fan and an oil or gas-driven heater to dry the grain, preventing it from getting moldy.

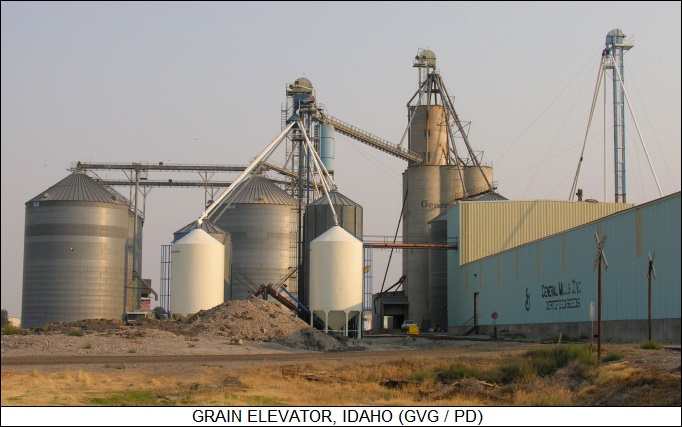

If the grain is going to be sold, farmers collect it in a centralized "grain elevator", usually sited in the local farming community and often run as a cooperative of the farmers. Trains or barges will pick up the grain from the "country" elevators and move them to huge "terminal" elevators, either for sale to bulk buyers at home or abroad, or at the site of food processing plants.

A grain elevator "elevates" the grain using a chain of buckets (called a "leg" for some reason). Traditional grain elevators consist of a row or double row of concrete silos, with a leg at the end of each row feeding a conveyor belt that transfers the grain to the appropriate bin. A conveyor at the bottom transfers the grain back out again when it is needed. These days, a grain elevator usually consists of a set of corrugated metal drums like those used for farm granaries, but bigger. A central bucket elevator feeds an "octopus" system that distributes grain to the appropriate drum through a rotary selector mechanism.

At harvest time, trucks arrive at the elevator, to have their contents sampled through a probe called a "trier" that looks something like a concrete pumper, though the flow is in the reverse direction. The samples are tested for moisture and contaminants. Once cleared, a truck dumps its load through a grate into a "boot", where the grain is then hoisted up a leg into the elevator. The grain may need to be dried before it is stored, being dumped through a "tower driver", a tall metal structure where the grain falls down through a warm column of air.

Grain will generate a fine dust when being stored that can produce a disastrous explosion if ignited by a spark. Vegetable oils may be used to suppress the dust, or it may be filtered out using "socks" attached to the vents. Care is taken to avoid electrical sparks; the operation of a leg tends to build up static electricity, and so legs are placed outside the main elevator structure. The elevator is fitted with explosive vents that direct the blast outward, reducing the damage.

* The first step in processing grains for consumption is, of course, milling. This is another process in agriculture with a long history and a surprising level of refinement. An old-fashioned mill, driven by wind, water, or horse power, ground the grain between two disk-shaped stones, the one on the bottom being fixed and the one on the top rotating. The stones were "dressed" with grooves in a radial or arc pattern. The stones were kept very slightly separated so they wouldn't grind directly against each other and wear themselves out.

In a modern mill, the grain is milled by feeding it through pairs of cylindrical steel rollers, which are also dressed with grooves or ridges in spiral patterns. The two rollers run at slightly different speeds to apply a combination of crushing and shearing forces. The objective, however, is not to directly grind the wheat into a powder. A wheat grain consists of an outer hull, a layer of starchy endosperm or "middling" underneath, and a core or "germ". The hull has to be removed first, becoming bran, and then the middling has to be taken off the germ. The middling, incidentally, is the most important part of the kernel, the basis of ordinary flour. After this selective milling process, the components are separated by sieving or sifting, with the mix spread out over a screen or cloth that is shaken, the materials falling through becoming "unders" and that remaining becoming "overs".

Corn can be "dry milled" is much the same way, the result being the cornmeal that is used to make cornmeal muffins. Dry-milled corn also ends up in cornflakes and whiskey. However, most corn is "wet milled", the kernels being soaked in warm water for a few days, where they ferment a bit. The end result is then ground up, with bran, endosperm, and germ separated by washing steps. Once again, the starchy endosperm is the most important end product. The bulk of it is treated with acids or enzymes to become corn syrup and fructose, the primary industrial sweeteners, which are hauled off in rail tank cars to snack factories.

The germ is pressed for corn oil, with the residue mixed with bran and leftovers from syrup / fructose production to become high-protein animal feed. Very little of the corn is wasted.

* These days, corn farmers are also making money by brewing ethanol as an additive for automotive fuel. The process is exactly the same as that used for distilling corn whiskey, just scaled up tremendously. Ethanol's a good deal for corn farmers, but about the only real benefit that consumers obtain is that it burns cleaner than gasoline and produces fewer pollutants. It ends up costing about the same as gasoline, and in fact some critics claim it takes more energy to produce corn ethanol than it delivers. That's a minority view, but even advocates admit that the margin between fuel in and fuel out isn't very impressive.

Nobody seriously sees corn ethanol as an answer to fuel shortages, though there is ongoing work in developing "cellulosic" ethanol production processes that could use plant waste, such as corn stalks, as a feedstock, with advocates claiming that in maturity ethanol costs could be cut in half or more. However, so far nobody had been able to produce cellulosic ethanol at prices that are remotely competitive. [TO BE CONTINUED]

START | PREV | NEXT* ADWARE WARS: A recent BUSINESS WEEK article ("The Plot To Hijack Your Computer" by Ben Elgin, 17 July 2006) took a microscope to the "adware" industry -- the friendly folks who try to sneak adware onto our computers to pop up ads in our faces, with the focus mostly on an organization named "Direct Revenue". Although the article covered a lot of ground that's very familiar to the computer-literate, it also revealed some interesting new information.

For one, the big Internet advertisers such as Yahoo! and Google Adsense end up having some complicity in adware schemes, if not by intent. Website operators often use Google Adsense banner ads on their websites, and get a cut on user access to the ads. The adware companies are basically operating the same sort of business, displaying ads for the big Internet ad service providers and taking their cut. Although the ad service providers do have rules on how the advertising should be performed, it's hard to police exactly what the ad displayers are really doing. Another interesting item in the article was how bitterly the adware companies fought against each other, creating software "torpedoes" that would seek out rival adware on a user's PC and then delete it. Of course, this was likely to do damage to the PC's operating system as well.

That's the worst aspect of adware: the pop-ups more than a nuisance, the adware is intrusive and so tends to break things. Some adware programs, including Direct Revenue's Aurora and a similar adware program from competitor CoolWebSearch, are so badly designed that they often cripple PCs without ever displaying a single ad.

Somehow not surprisingly, adware organizations like Direct Revenue often began as legitimate advertising organizations, but gradually fell prey to greed. They start out trying to play by the legal rules, only to cut more and more corners over time, for example doing their best to hide or obscure the fine print the law demands they display to unsuspecting PC users before downloading adware. New York Attorney General Eliot Spitzer -- a photo shows a classic sharp-edges New Yorker, along the lines of Rudolf Giuliani -- is now blasting Direct Revenue with a lawsuit for false advertising, computer tampering, and trespassing. Direct Revenue spokesmen insist the company is playing by the rules and the suit has no merit. The company does admit to getting a lot of feedback featuring sharp language and the occasional death threat. One user commented: "If God exists, He hates you."

* ED: According to the article, Direct Revenue staffers saw naive computer users as their prime targets, calling them "trailer cash". I'm a fairly careful PC user and I don't believe I have any active malware on my computer. When I run into a dodgy website that seems to be doing something tricky or my PC begins to act like it is developing a mind of its own, I restore my PC to a configuration from a few weeks back and it starts behaving again.

Usually when I get a website trying to download a fast one on me, I simply kill the web browser, but even if the download wasn't completed, it jams up the workings of the PC anyway -- sometimes to the point of making a system restore a seriously anxious exercise. Microsoft has been fairly diligent in working on defenses, but it's an arms race -- I've noticed that an increasing number of websites are able to defy the Windows Explorer pop-up blocker.

I do get the feeling that I have broken bits of inactive adware and spyware on my PC. It reminds me of retroviruses, like the HIV virus that causes AIDS, which operate by inserting their genome into our cellular genomes. During the past history of our species, a number of different retroviruses inserted their genomes in human ova, and the now-broken DNA patterns of these invaders can still be detected in our own genome. Mother Nature is a spammer.

BACK_TO_TOP* SURGERY INSIDE OUT: One of the real advances of modern medicine in recent decades has been the development of laparoscopic surgical techniques, in which complicated surgeries could be performed through a small incision using various sorts of remote-manipulation surgical tools. The approach meant much less injury to the patient and usually far shorter hospital stays, or even outpatient treatments.

Now, according to an article in THE ECONOMIST ("Invisible Mending", 10 June 2006), some surgeons are refining the technique to allow them to perform surgeries without any external incisions at all. The dodge is fairly drastic, however: the surgeries are performed by inserting laparoscopic tools down a patient's throat and performing the surgery through the stomach walls instead of the skin.

Promoters of "transgastric surgery" include Dr. Paul Swain of Imperial College, London, who pioneered swallowable wireless camera capsules, and Dr. Dmitri Oleynikov of the University of Nebraska Medical Center in Omaha. Transgastric surgery might sound like gross overkill since performing remote-controlled surgery through the stomach and then stitching up the patient is obviously not easy, and it certainly doesn't sound all that safe. Most of us do not have the sort of high-class body where a small abdominal incision is much of a problem.

However, although advocates admit that cosmetics are the main appeal of the scheme, from the point of view of access to organs, transgastric surgery is far superior to external surgery, and Swain believes that gastric incisions are less traumatic and heal faster. As far as the clumsiness of the approach goes, that's certainly a problem, but Oleynikov has been working with University of Nebraska researchers to build tiny cylindrical robots, 1.5 centimeters in diameter and 8.5 centimeters long (0.6 x 3.35 inches) that use twin wheels with a corkscrew thread to maneuver through the interior space of the patient.

The whole concept remains experimental, but it is interesting to consider how far the notion of tiny robots navigating through a patient might be taken. A surgeon might be able to pilot a robot using a virtual environment, in effect taking a real-life "fantastic voyage".

* ELECTRONIC ICU: As reported in an article in BUSINESS WEEK ("The Doctor Is (Plugged) In" by Timothy J. Mullaney, 26 June 2006), in 2000 the nonprofit Sentara Healthcare system in Norfolk, Virginia, had a problem: the intensive care units (ICUs) in the seven hospitals in the system had costs three times higher than the rest of the operation, often got frazzled doctors out of bed in the middle of the night to handle emergencies, and -- worst of all -- had a mortality rate running up to 40%.

Then Sentara's CEO, David L. Bernd, got a cold-call from a salesman from Visicu INC in Baltimore, Maryland. Almost nobody likes salesmen calling from out of the blue, but this one had a pitch that made Bernd sit up and listen: Visicu wanted to sell Sentara an "electronic ICU" system that used modern networking to link ICUs with the resources needed to run them. Now Sentara operates an eICU system at a total of eleven ICUs distributed over six hospitals, with company officials wondering how they ever got along without it. Costs have dropped and survival rates risen dramatically. The Visicu system paid off its $1.6 million USD purchase pricetag in only six months. The average ICU stay fell from 4.4 days to 3.6 days.

The Visicu eICU keeps an electronic watch on patients with cameras, sensitive microphones, and sensor systems. Patients also have an emergency button they can press if things get dodgy. There are local staff, but the experts are consolidated into a central control center filled with video displays and computer readouts that's staffed in shifts, 24 hours a day, 7 days a week. The system software gives alarms when a patient undergoes a dangerous change in condition, and prioritizes patients to place those who need the most immediate care at the top of the list. The system also provides database information on patients and treatments.

It all sounds sterile and mechanized, but patients seem to like it, feeling that the new system is keeping a much closer eye on them than the old. The statistics seem to bear that out: many of the ICU fatalities were from opportunistic infections -- blood infections and pneumonia -- and the early signs of infections are now spotted much more quickly and reliably.

The eICU is still far from the norm in US hospitals. The up-front cost is hard for small hospitals to bear, and the cost of maintaining the system is not cheap either -- though Sentara certainly found it much cheaper than what they'd had before. Insurance and Medicare don't cover eICU costs yet, and eICUs also suffer from one of the classic problems of such electronic systems: lack of standardization. The eICU system doesn't neatly link into existing hospital record systems.

Anybody with any knowledge of such system integration issues knows they are a nightmare and can sometimes be all but impossible to fix. However, eICUs are clearly an idea whose time has come. It is estimated that the nation's ICUs require the support of 54,000 specialists to do the job right. With only about 6,000 specialists actually available, technology is just going to have to help carry the weight.

BACK_TO_TOP* PACHINKO WORLD: Anyone familiar with Japanese pop culture knows about pachinko, a game that resembles a scaled-down vertical pinball machine and provides a payoff. In Japanese pop stories, pachinko parlors are the place where slacker salarymen hang out when they're goofing off. However, according to an article in THE ECONOMIST ("Rules Of The Game", 29 July 2006), that image is somewhat out of date.

Gambling and pachinko has never been particularly respectable. In Japanese samurai stories, the villains are often gangs of gamblers, just as often as Wild West stories feature gangs of cattle rustlers. Pachinko parlors are seen as seedy, and the number of pachinko players has dwindled from 29 million a decade ago to 18 million today. Even at that, Japanese still spend 30 trillion yen -- about $260 billion USD -- on pachinko parlors, about as much as is spent on health care in Japan, and pachinko parlors are believed to account for about half of all Japanese consumer borrowing. Every summer, babies die when pachinko-playing parents leave them in hot cars. There are concerns that some pachinko parlor owners rig their machines to give fewer but bigger payouts, and worries that the owners evade taxes or send profits to North Korea -- parlors are often run by Japanese of Korean origin.

Despite the problems, pachinko persists, and the industry has been given a shot in the arm through the introduction of "pachislot", which combines features of pachinko and slot machines. The introduction of a new pachislot, "Hokuto No Ken" -- "Fist Of The North Star", modeled on a popular martial arts manga / anime (comic / cartoon) series -- was a big hit among those who remain faithful. Pachislot arose when the authorities loosened the rules for pachinko parlor game design, and now about two thirds of the machines in a parlor are pachislots.

The pachislot's big appeal lies in the fact that payoffs can accumulate, permitting jackpots of up to a million yen -- about $8,700 USD. This has made the authorities nervous. Casino-type gambling is actually illegal in Japan; payoffs in pachinko parlors are in the form of "prizes", which as it turns out can always be conveniently and legally redeemed for cash at a shop across the street. In 2004, the rules were changed to cut the maximum payoff by 80%, but pachinko and pachislot machines only have to be relicensed once every three years, so the big jackpots will survive into 2007.

After that, so it is hoped, pachinko and pachislot will simply become a form of entertainment, not gambling. Nobody has any idea what will happen to the industry after that. Gambling may be unsavory to Japanese, but lawmakers everywhere like tax revenues, and Japan has a big budget deficit. A committee in the ruling Liberal Democratic Party is considering the possibility of legalizing casino-type gambling in Japan. After the drought, the deluge?

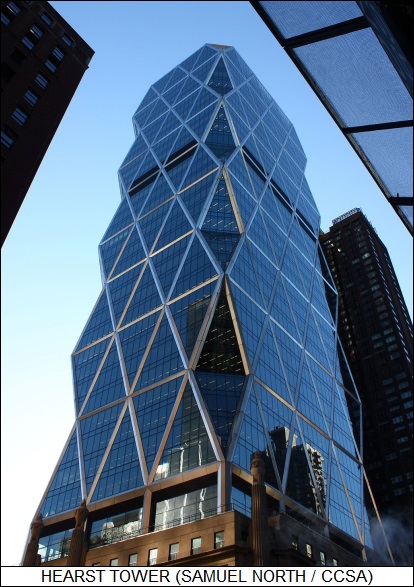

BACK_TO_TOP* ENDURING SKYSCRAPERS (2): Modern skyscrapers can aspire to heights and shapes that would have been inconceivable a century ago, with innovation being driven by improvements in materials, lifts (elevators if you prefer), and computing power.

In 1922, the German-American architect Mies van der Rohe produced a design for a skyscraper as a cluster of glass-walled cylindrical towers -- without the heavy-duty "central core" that supported contemporary skyscrapers -- that seemed to defy gravity. It certainly defied practicality, there being no way at the time to build such a structure so it would stand up, and no way to produce glass that was light and flexible enough to implement the glass walls.

Glass technology has advanced dramatically since then, making the vision of van der Rohe's glass towers seem stodgy. A fine modern example is the science-fictional 30 Saint Mary Axe building in London, known as the "Gherkin" and now as much a trademark of the city as Big Ben. The new Hearst Building in NYC exceeds van der Rohe's idea of aesthetics, incorporating concepts of "green" design that would have been hard to imagine in 1922. Modern glass can be formed into shapes that support such designs, with thin-film coatings providing the ability to let light in and keep heat out, and "self-cleaning" glass reducing hazardous window cleaning. Architects like to use glass for skyscrapers since it not only lets light into the structure, but helps prevent the structure from turning the streets below into dark canyons.

Of course lighter materials help buildings go higher, with thinner floors and walls, leveraging off such innovations as "slim-line" insulation made of fiberglass and aluminum foil -- an idea borrowed from containers built to transport blood. Lighter floors and walls do pose engineering challenges, with wide light floors demonstrating a tendency to bounce like trampolines.

Modern architects are also working on Van der Rohe's concept of getting rid of the central core, or at least distributing it. The central core design tends to run out of steam for buildings more than 200 meters (660 feet) tall. The direct solution is to build "outrigger" structures, which allow the structure to grow almost indefinitely. The latest claimant to the world's tallest building, the Burj Dubai, uses this approach, resulting in a building that is strongly reminiscent of prewar art deco futurist concepts and wouldn't have looked out of place in old sci-fi epics. The actual height of the Burj Dubai, incidentally, won't be announced until the building is completed, in hopes of trumping rivals.

Another approach is to dispense with the core and construct the building using a web of steel struts, resting on a set of piers. This allows the lifts to be distributed around the building or even around the outside of the building. The result is distinctive and light in appearance; one of the best-known examples is the HSBC building in Hong Kong, which somehow suggests a truss bridge stood on end.

Such innovations have produced the 509-meter (1,670-foot) Tapei Tower, for the moment the tallest building in the world. Obviously such tall buildings impose big demands on lift technology, since nobody would want to work in one if it took a hour to get from the lobby to the office. Most tall buildings have two sets of lifts, one for the lower floors and one for the upper. For the really tall buildings, even that isn't enough, and so the designers have resorted to putting two lifts in each shaft and building in "sky lobbies" where riders can transfer between lifts. Scheduling becomes an issue when the lift systems are so complicated: a Finnish lift company named KONE is developing a scheme where users can call on their cellphones to obtain instructions for which lift to take in a building.

That requires computing smarts. Such smarts have in general made building super skyscrapers easier. Once upon a time, before a skyscraper was completed it only existed as a set of elaborate blueprints, but now such structures can be simulated on a computer, permitting more complicated forms than previously possible. The simulations can model how the building would tolerate an airliner crashing into it, and how effectively it could be evacuated in an emergency.

The sophistication of modern skyscraper design means that it is easier to build "green" structures, like the Hearst Tower already mentioned, that make use of natural light and ventilation. Advocates claim such structures are healthier places to work, since they don't recycle air that may contain pathogens from sick workers. Companies certainly find it attractive to play up how green they are to the public, but such buildings also use a third less energy than conventional designs.

* In the end, however, skyscrapers rise on economics, and that remains the biggest obstacle to getting them built. One of the real problems with a skyscraper project is that the work cycle very often seems to be about as long as the economic cycle, which is why the Empire State Building ended up being completed in 1934, in the depths of the Great Depression, and acquired the nickname of the "Empty State Building".

Still, people keep building higher and higher. Skyscrapers have a definite appeal, with publicity for the Empire State Building capturing the exhilaration of looking out from the viewing gallery, saying it was better than flying. It's worth remembering, however, that the gallery was only built because no occupants could be found for the space. [END OF SERIES]

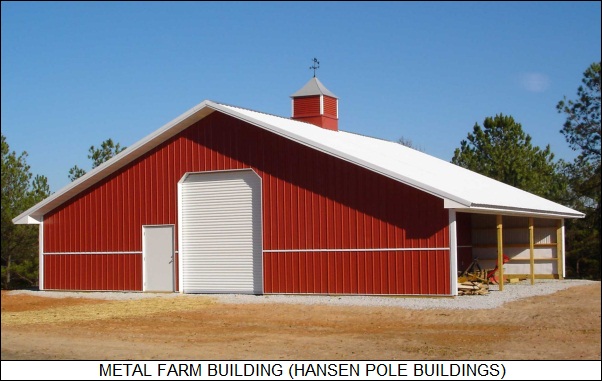

PREV* INFRASTRUCTURE -- FOOD & FARMING (2): One of the most distinctive features of a farm is of course the barn -- or at least it is in the USA; in many other countries, individual buildings are used to stable horses, keep cattle, and store feed and grain, but in the US the tradition has been to consolidate these functions in one big structure.

A "classic" barn has two levels: a lower level where the animals were stabled, plus an upper level where grain was threshed and binned, and baled hay was stored. A third level, a hayloft, might be used to store the hay instead. An earthen ramp was piled up to allow "drive up" access to the upper level; barns might also be built on hillsides to allow direct access to the upper level.

There are variations on barn styles. In Appalachia, the "upland crib barn" was common, consisting of what amounted to an overgrown corn crib with additions tacked on. In New England, "connected barns" were built, featuring covered walkways to the other elements of the farm so the farmer didn't have to wade through hip-deep snow in the winter to do his chores. The "Pennsylvania barn", based on Swiss and German farm structures and once common in Virginia and Maryland as well as Pennsylvania, featured the upper level extended on one site well over the lower level to provide an open-air sheltered space. The "three-bay barn" had a spacious central area with stalls flanking on either side.

The traditional idea of a barn is the "Dutch barn", with a "gambrel" (double-sloped) roof and doors at the ends, not the sides. The Dutch barn is not actually known in the Netherlands; it may have German origins, since the "Deutsch" were often called "Dutch" in 19th century America. It is actually a fairly recent invention, not known before the US Civil War, with 20th-century Dutch barns sometimes having curved roofs. There is a smattering of circular barns in the USA, but they're rare.

Traditional barns feature details such as good ventilation for the hayloft; a roof extension known as "hay hood" where a pulley can be hung for hauling, say, bales of hay up and down; hooks for hanging the carcasses of livestock for butchering; and "owl holes" where owls are invited to nest so they can patrol the barn for mice, rats, and other varmints. Incidentally, barns are not necessarily painted red.

The traditional barn is still around but seems to be obsolescent, being increasingly replaced by prefabricated metal buildings, which are cheap, sturdy, can be put up in a hurry, and have big open spaces convenient for storing farm machinery. In some cases, cosmetic features are included to give them a more traditional "barn" appearance, if not all that convincingly. Farms also increasingly feature "tent"-type storage buildings using metal frames covered with tough synthetic fabric. In warm climates such as the US southwest, barns, traditional or modern, tend to be uncommon, with hay stored in an open hayrack and cattle taking shelter under what looks like a big carport.

* Livestock has to be fed year round, with their provisions stored as hay -- dried grass -- or "silage", which is moist fodder stored without oxygen and allowed to ferment in a particular way. Centuries ago, hay was obtained by handwork, with the grass being cut with a sickle, piled up to dry in the fields, and then hauled up to the hayloft for storage. Reaping machines and then the mechanical baler did much to automate this previously labor-intensive practice. Balers are generally towed by a tractor; traditionally they gathered up hay and wired it into rectangular bales -- small ones about 60 x 60 x 120 centimeters (2 x 2 x 4 feet) in size, and "jumbo" bales with doubled dimensions (and weight multiplied by 8). In the 1970s, balers were introduced that baled up the hay into giant rolls, sometimes wrapping plastic around them to keep them from getting wet in the rain.

Hay tends to have a pleasant odor, but silage is more reminiscent of a rotten pile of damp grass. To create silage, grass is packed into an airtight silo. It used to be hauled up to the top of the silo with a rope and pulley, but these days a machine chops up the grass and blows it up through a long duct. Once packed in, a bacterium named Lactobacillus gives it a bit of predigestion, producing lactic acid, with the acidity suppressing less welcome forms of bacteria, the sort that makes the rotten pile of grass mentioned above particularly malodorous and inappropriate for animal consumption. The silage is doled out as needed by an unloading machine hung from the ceiling of the silo. Since this means that the oldest and least appetizing silage is hauled out last, a more modern scheme uses a chain-type unloader at the bottom of the silo.

Silage and silos are a fairly recent invention, about a century old. Early silos were made of wood and strapped with metal bands, but these have given way to concrete or metal silos. It is also possible to get by more cheaply with a "horizontal silo", just a trench or pit covered with plastic, and in fact it seems that's how silage technology began. [TO BE CONTINUED]

START | PREV | NEXT* TECHWAR IN LEBANON: On 12 July 2006, fighters of the Lebanese Hezbollah Shiite militia attacked Israeli Defense Force (IDF) soldiers on the Israeli border, killing eight Israelis and taking two prisoner. The attack was the last straw for the Israelis, who within a day began a heavy bombing campaign against Lebanon, which has continued into August and been accompanied by IDF ground assaults.

The broad brush of the war is getting plenty of play on the nightly news and discussion of them would be somewhat redundant here. What hasn't been emphasized is the tactical aspects of the conflict, with both the IDF and Hezbollah employing advanced weaponry.

A BBC correspondent in Tyre, wearing a flak vest, commented on video that IDF drones are overhead at all times, though the little drones are hard to spot. The buzz of their "chainsaw" engines was audible on the soundtrack since the streets were otherwise quiet and almost empty of inhabitants, the Israelis having dropped leaflets warning the citizenry to get out. The drones keep an eye on everything that moves, and anything deemed suspicious gets an artillery barrage, or an F-15 or F-16 dropping a laser-guided bomb -- with the target "illuminated" by the drone.

Videos released by the IDF have displayed laser-guided bombs zipping into targets -- and in one case, a Hezbollah rocket being launched against Israel from under the cover of a banana grove. Most of Hezbollah's rockets are "Katyushas" -- "Sweet Little Katy", what the Russians called the family of barrage rockets they initially developed during World War II and produced enthusiastically during the Cold War. There are a number of different types of Katyushas, but they are all simple, cheap, unguided weapons that were originally designed to be fired from a truck with a multiple-launch rocket rack. However, during the war with the Americans, the Vietnamese came up with the notion of building a simple rail launcher, often of wood, then using it to fire a single Katyusha, and departing to evade a counterstrike -- a tactic that has become popular with insurgents and militias such as Hezbollah.

Katyushas are easily built by any country with a modest industrial base. The Katyushas used by Hezbollah are "BM-21" rockets, almost featureless spikes with a diameter of 12.2 centimeters (4.8 inches), a length of 3.23 meters (10 feet 7 inches), a warhead with a weight of about 20 kilograms (44 pounds), and a range of over 20 kilometers (12.4 miles). Apparently Hezbollah also has an "extended range" variant with a range of 40 kilometers (25 miles).