* 21 entries including: power plant infrastructure, PC interfaces & networking, global warming surveyed, click fraud details, North Korean nuclear dud, power from trash, high-tech concrete, Second Life virtual environment, cellphone malware, hydraulic hybrid vehicles, US population reaches 300 million, LED lighting for developing countries, the Earth's shifty magnetic field, industrial recycling.

* INFRASTRUCTURE -- POWER PLANTS (2): A close inspection of a coal-fired powerplant reveals a system more technologically refined than might be expected. The core of the powerplant is the "firebox", into which the powdered coal is blown by a huge centrifugal fan, with an even bigger fan feeding the burning process. The pulverization of the coal and forced air ensures as complete combustion as possible, not merely in the interests of efficient use of fuel but to also ensure minimal release of noxious emissions and as little ash as possible -- after all, the ash has to be hauled off and disposed of properly, and the less ash, the less troublesome its disposal.

A coal-fired powerplant burns continuously, except for maintenance downtime. This is not just because of power demand, but also because getting the firebox up to full burn -- an operation called "lighting off" -- is a laborious process. The firebox has to be heated up with kerosene to a high enough temperature to ensure that the pulverized coal burns properly. If the fire goes out, the firebox has to be purged to eliminate unburned fuel that could cause an explosion during lighting off. It can take half a day to get the fires burning properly again.

The hot gases from the roaring furnace follow a serpentine path before they finally go up the flue -- the gases first passing through the boiler to produce steam; then through a "superheater" to make the steam even hotter and more energetic; through a reheater that reboosts the steam passing through the powerplant's electric-generating turbine; through an "economizer" that preheats the water going into the boiler; and finally through a "preheater" that warms up the air being driven into the firebox. Each stage operates at a lower temperature as heat is consecutively drained out of the gas flow.

* A modern coal-fired powerplant burns reasonably cleanly, but coal is by no means an inherently clean fuel. It contains minerals that won't burn, which end up as ash, as well as sulfur, which becomes sulfur dioxide on combustion and will combine with water in the air to form sulfuric acid and acid rain. As far as the ash goes, the heavy component, known as "bottom ash", falls to a pan at the bottom of the firebox, where it is removed periodically, while the light component, "fly ash", rises along with the hot gas stream.

The fly ash has to be removed, this being done either by a "baghouse" or an "electrostatic precipitator". A baghouse is conceptually simple, just a set of heavy cloth filters in the form of long tubes open at one end, with the "bags" allowing the gases to pass through while capturing the fly ash. Since the fly ash would eventually clog the bags, the airflow is reversed occasionally to force out the ash, which falls down into a hopper for removal. Some baghouses have a shaker system to help dislodge the ash.

An electrostatic precipitator consists of rows of vertical plates, with arrays of fine wires energized to high voltage arranged between the plates. The gas passes up through the gaps between the plates, with the ash particles acquiring an electric charge and sticking to the plates. A "rapper" system knocks the ash loose into a hopper for collection.

Not surprisingly, neither of these schemes works for sulfur dioxide. A "scrubber" system is used instead, installed "downwind" from the baghouse or electrostatic precipitator system. The scrubbers are tall cylinders into which a slurry of lime -- calcium hydroxide (CaOH) -- is sprayed down from the top. The calcium hydroxide combines with the sulfur dioxide to form calcium sulfate, or gypsum (CaSO4), which is collected in a hopper and hauled off.

Not all coal-fired powerplants have scrubbers: some Wyoming coal has low sulfur content and doesn't need a scrubber. Incidentally, sometimes the stack of a coal-fired power plant will emit a visible white plume. The plume isn't smoke -- it's steam from the scrubber. In cold weather, the plume may appear whether there's a scrubber or not. A number of powerplants also have "selective catalytic reduction" system to help get rid of toxic nitrous oxides (NOX), with the NOX catalytically reacted with ammonia (NH3) to form nitrogen and water. [TO BE CONTINUED]

START | PREV | NEXT* GIMMICKS & GADGETS: One of the latest tricks being examined for the nanotechnology toolkit is the construction of nanostructures using viruses. Angela Belcher and colleagues at the Massachusetts Institute of Technology (MIT) genetically engineered an M-13 bacteriophage virus to accumulate cobalt oxide and gold metal from a solution on its coat. The spindle-shaped viruses then organize themselves into a uniform thin conductive plate. Ultimately, the goal is to use the technology to "grow" a battery.

* One of the difficulties with the unmanned aerial vehicles (UAVs) -- drones -- that have become so popular lately is that flying them around in civil airspace is problematic, and national aviation authorities have been trying to figure how it can be done. Raytheon decided that it might help to have a better ground control system, and so the company worked with computer game designers to create the "Universal Control System (UCS)", a ground-based cockpit. The UCS features three wraparound flat panel displays to give the pilot a 270 degree field of view, as well as two command-status displays. The pilot has a keyboard, throttle, and joystick, and can switch operational modes easily. The system can be used sitting or standing, in case the pilot gets tired of staying in a chair all day.

* POPULAR SCIENCE had a "plug" article for an interesting "open source" handheld game console, the Gamepark Holding GP2X. It runs Linux and supports emulators to execute popular classic games obtained from an online archive, with tools to allow users to grow their own games. (It has a 320x240 pixel color display, which will certainly bring back memories of the days of CGA PC games to old-timers.) It can also play MP3s or DivX video, and can be used as a E-book to read text or PDF documents obtained from the web. A "breakout box" is available to allow the unit to be hooked up to mouse, keyboard, big display, and speakers for use as a nice little Linux computer.

* Text messaging has become popular; as reported by THE ECONOMIST, it's starting to put on a bigger and gaudier face in urban areas. "Digital graffiti" displays are now popping up on the sides of buildings, in cafes, and at sporting events and various celebrations, to display text messages sent to them from mobile phones or computers. The displays can be used to capture the mood of the action, get discussions going, or push a sales message.

The idea began in Europe. In 2001, for example, the Arts Council England promoted a poetry contest, with the winners being circulated on a tickertape-like display on a building in Huddersfield. Text message and digital graffiti took a bit more time to catch on in the USA, but now American startups like LocaModa, of Somerville, Massachusetts, are on the leading edge of the wave.

LocaModa has set up a number of "Wiffiti" displays -- the name meaning "wireless graffiti" and cashing in on the "wi-fi" fad -- in cafes in a number of cities. Clients can send text messages to the big displays, with the messages also echoed to a website so anybody can check in from anywhere in the world. Clientele of a cafe who are on the road can check into "wiffiti.com" to see what's happening back home.

Paul Notzold, an arts designer from Brooklyn, uses digital graffiti as public artwork. His system involves a projection display that he uses to cast text "balloons", like those in cartoons, on the sides of buildings after sundown. He then passes out leaflets telling people how to post to the system. He's put on such displays in Brooklyn, Europe, and even Beijing -- where the cops came over to talk to him. Notzold thought they were going to shut him down, but no, they just wanted the phone number so they could send messages, too.

Fun and arts are all very well, but what about making money? Companies are in fact very interested in digital graffiti as an advertising medium. Nike, Proctor & Gamble, and Gillette have all run digital-graffiti promotions. The usual trick is to let people send messages and then send messages back with vectors to a website or information on the promotion. An evangelical group in Texas has even used the technology at religious group events.

* And now for something different: US NEWS & WORLD REPORT had an interesting short article on the EggFusion company of Deerfield, Illinois, which performs commercial laser-etching of eggs. That might seem to be a limited market, but EggFusion marks tens of millions of eggs a year. The company makes its money by selling advertising space on the eggs, setting up deals with egg farms and grocery chains to split the ad revenue. Advertisers see the egg-marking scheme as a way to get attention through surprise from jaded consumers.

* And now for something really different: WIRED.com had a short photo-essay article on Hyungkoo Lee, a South Korean artist who molded precise skeletons of cartoon creatures out of plastic and mounted them museum-style. The photo gallery included Wile E. Coyote, the Roadrunner, Tom (cat of Tom and Jerry), Bugs Bunny, and various Disney characters. The skeletal structures were meticulous and demonstrated some real knowledge of biological structures.

I was reminded of a Polish artist about ten years back who created a mock Lego series for a concentration camp, complete with guard figures beating inmates, neatly put together Lego boxes with colorful illustrations of Lego crematories, and so on. The Danes were somewhat upset with him, all the more so because the Lego company had given him blocks to work with. As the saying goes, not all our artists are playing a joke on the public -- some are genuinely mad.

BACK_TO_TOP* CLICK FRAUD CLOSEUP: The phenomenon of "click fraud", or schemes to defraud internet advertising, was discussed here earlier this year , but an article in BUSINESS WEEK ("Click Fraud: The Dark Side Of Online Advertising" by Brian Grow & Ben Elgin, 2 October 2006) performed a more detailed inspection of the phenomenon.

Martin Fleischmann, boss of Mostchoice.com, an insurance-advisory site run out of Atlanta, Georgia, is a believer in online advertising, paying $2 million USD to Yahoo and Google for banner ad services in 2005. To his confusion, Fleischmann began to get hits on the ads from places like Botswana, Mongolia, and Syria. There was something clearly wrong because his service was US-only; why would someone in Mongolia want to know price quotes on insurance in the US? Fleischmann obtained software to backtrack on the hits and found mysterious sites like "insurance060.com" that seemed to exist only for the purpose of repeatedly clicking on his ads. Fleischmann pays when people click on the ads, and he figures he's been had for $100,000 USD since 2003.

Anybody who runs a website knows how the banner ad system works. The website owner signs up with Google Adsense or some other banner ad service as an "affiliate" and then displays ads provided by the ad service, receiving a payment for each "click-through" on the ad. The website owner gets a payment from the ad service, but of course the money actually comes from the advertisers. In some cases, the ads go through a middleman operation, known as a "domain parking service", which lines up small websites to run ads.

The system can be exploited with what is called a "parked website", which consists of pages of ads and nothing else, where visitors simply come in and click away from ad to ad. The parked website owner gets money for the ad clicks, paying off a domain parking service that may very well be in on the scam, and passes on a cut to the "clickers", a scheme known as "pay-to-read (PTR)". David and Renee Struck, a couple in Minnesota, set up a parked website in 2005, picking up $5,000 USD in four months, until the shadiness of what they were doing finally sank in and led them to pack it up.

The clickers lower down on the pyramid don't make much money, but it's not exactly hard work to click on banner ads. It is possible to even bypass the clickers, using "clickbot" software to perform the clicks in an automated fashion. The clickbots can be operated by vast "botnets" of computers that have been enslaved by viruses, with those controlling the botnets renting them out. The behavior of clickbots is more predictable than a human clicker, however, and so clickbots are relatively easy to spot.

Google and Yahoo say they do try to screen out click fraud and reimburse advertisers who get bit by it. A Yahoo official calls the problem "serious but manageable". David Struck is not impressed, saying that from his experience, the defensive actions of the online advertising brokers are "not having much of an effect." Google, Yahoo, and others in the online advertising business will not discuss the techniques they use to catch click fraud, saying it would help scammers penetrate their security systems.

The click fraud scammers are not necessarily the grungy hackers one might expect. The BUSINESS WEEK reporters ran down an operator, a 36-year-old disabled Kentuckian named Michele Ballard who lives with her mother and pet cat. She runs a network of five parked websites that she calls "Owl-Post", after the magic postal service in the Harry Potter books, and feels her operation is a service to a lot of people on the bottom end of the economic pyramid. She doesn't believe that what she's doing is wrong.

To be sure, Owl-Post is clearly a pocket-money operation, but it's not the only click fraud operation out there, and some of them, sourced out of Eastern Europe or China, are bringing in big money. When the BUSINESS WEEK reporters contacted some suspected click-fraud operations, they then began to receive floods of spam asking them to sign up with PTR rings. Those who responded to the reporters often insisted, with logic a bit difficult to follow, that their PTR operations were "legitimate".

Small-time or big-time, the click fraud scammers are skimming from an enormous market. Online advertising spending in the US ran to $12.5 billion USD in 2005 and is expected to reach $29 billion USD by 2010, with about half the amount going to pay-per-click advertising. Estimates of the level of click fraud run to 10% to 15%, which means that the take is hundreds of millions of dollars right now, and will be billions soon.

Michele Ballard has a bit of justification or at least rationale in feeling okay about what she's doing, because the law hasn't paid much attention to click fraud yet. The FBI and US Postal Service recently set up a small group to address click fraud as part of a joint cybercrime operation, and a few tentative actions have been taken so far. People like Martin Fleischmann are not okay with it, and in fact major online advertisers are forming groups to share information on click fraud, for use as a lever against the likes of Google and Yahoo, who have already been hit by class-action suits over click fraud. While the ad service providers try to downplay the issue, customers are getting hotter, and some believe that if more isn't done to shut down click fraud, it will all but wreck online advertising.

* Incidentally, according to a sidebar to this article, click fraud is not a completely new concept. Newspapers occasionally exaggerate their circulation to overcharge advertisers -- a practice so long-standing that the US created an Audit Bureau of Circulations in 1914 to stop the practice. It still pops up every now and then, however. TV viewership ratings have also been manipulated, with TV stations often running special events and promotions to pump up the viewer base during the quarterly "sweeps" that determine the number of viewers. Advertisers are unsurprisingly not happy about such practices.

[ED: I have to add a personal note of amusement at the notion that some of these click-fraud operators don't think they are doing anything wrong. To be sure, some of that is equivalent to a gangster in a pinstriped suit with wide lapels claiming that he is "just a legitimate businessman", but I am sure that in other cases it is sincere. Spammers, at least in the early days, would often defend their work as legitimate, and I recall an article on virus-writers who thought there was no reason for concern with their activities. One did change his mind after somebody else's virus trashed his own PC.

I also recall a tale of a young couple in court over eBay fraud, who made no effort to conceal to the court that their ripoffs didn't bother them in the slightest. The infuriated judge threw the book at them, giving them an angry chewing-out along with the harshest sentences allowed. As the saying goes, a man on a horse may not give much thought to the fairness of the arrangement, but the horse is going to think about it a great deal. There seems to be something about online transactions that enhances such a failure of consideration -- possibly because it looks just like another sort of game being played on a computer, and there's no face to attach it to.]

BACK_TO_TOP* ATOMIC DUD: North Korean dictator Kim Il-Jong enjoys rattling the cages of his neighbors and the US, but given the poverty of his "hermit kingdom" it's no great surprise that there may be less to his theatrics than meets the eye. On 9 October 2006, North Korea set off a nuclear weapon, raising considerable international alarm; however, as reported by SCIENTIFIC AMERICAN Online ("Kim's Big Fizzle -- The Physics Behind A Nuclear Dud" by Graham P. Collins), the test was clearly a failure.

The very first nuclear test, the TRINITY shot performed in New Mexico on 16 July 1945, had an explosive yield the equivalent of about 20 kilotons of TNT. The North Korean test, in contrast, was only about half a kiloton. That was so feeble that there were those who suspected the Koreans had simply set off a big stockpile of conventional explosives as a con, but air samples picked up a few days after the test included radioactive traces that had leaked into the air after the underground detonation.

Designing and building a nuclear weapon is not trivial. The air samples hinted that the North Korean device was a plutonium weapon, like the US TRINITY and Nagasaki bombs, not a uranium weapon like the Hiroshima bomb. A plutonium device operates by "implosion", using an explosive "lens" to focus plutonium segments of a sphere into a radioactive "initiator" core that starts the explosive chain reaction. Getting the implosion just right is tricky -- like "crushing a beer can and keeping all the beer inside" -- and any asymmetry in the process will result in a low explosive yield.

A plutonium bomb also uses the plutonium 239 isotope. Plutonium is created in a breeder reactor, with most of the product being plutonium 239 but some being plutonium 240. It's hard to separate the two since their atomic weights are so close and their properties are otherwise much the same. Since plutonium 240 is more fissile than plutonium 239, too high a concentration of plutonium 240 will cause "predetonation", also resulting in a low explosive yield.

What exactly happened only the North Koreans know, and nothing they say is trustworthy: whether they tell the truth or tell a lie, it's certain to deceive. In any case, it is likely that the country's nuclear scientists are being given strong encouragements to make sure that a second test goes better. What is certain is that the test was performed as a political demonstration, another act of rattling the cages of North Korea's opponents -- and as far as that went, it worked precisely as planned.

BACK_TO_TOP* PC INTERFACES & NETWORKING (1): In my corporate life, I spent a lot of time fussing around with PC plug-in cards. While poking through some materials on the new "wireless USB" interface specification, I ran across a mention of the "ExpressCard" specification. Huh? News to me. After poking around on Wikipedia, I realized that I had been falling behind in my PC interface technologies, and needed to figure out what was going on.

Most of my corporate career was based on support of various plug-in interface cards using the old "Industry Standard Architecture (ISA)" bus. There were ISA cards with 8-bit ("XT" 4.77 MHz bus for 8086/8088 machines) and 16-bit ("AT" 8 MHz bus for 80286 machines) buses, and they were generally a nightmare to deal with because they put the entire responsibility for configuration on the back of the user, who had to configure them with jumpers or switches to fit into I/O or (in some painful cases) memory space in such a way as to avoid conflicts with other cards. The operating system (OS) was usually little help in this regard, and the drivers for cards had a nasty tendency to run into other drivers.

There was an attempt to develop a "Plug and Play (PnP)" scheme for ISA cards, but it was hopeless. A number of attempts were made to develop improved card schemes, including the IBM "Microchannel Architecture (MCA)", the "Extended ISA (EISA)", and the "VESA Local Bus (VLB)". These schemes were put to some use in niches; the MCA seemed for a time as though it would amount to a new standard since in principle it fixed most of the problems with the ISA bus, but IBM tried to protect it as a proprietary specification and ended up dooming it to irrelevance over the long run.

The Intel "Peripheral Component Interconnect (PCI)" plugin cards came into use in the mid-1990s and effectively killed off the rivals. PCI offered PnP capability, in that the OS could interrogate each of the PCI card slots in the PC, determine what was there, and configure the I/O space of the cards to ensure there was no conflict. This was obviously a better deal than the "plug and pray" operation of an ISA card -- that is, plug it in and pray that it works. PCI didn't work that much better at first, however, since driver conflicts could make them almost as troublesome to install as ISA cards, but in time that issue was generally resolved.

The PCI cards also had pin-and-socket connectors, not the edge connectors of the ISA cards, which had a tendency to make unreliable connections when they got dirty -- rubbing an eraser across the contacts could help -- and could damage the backplane slot pins if the card wasn't built to proper specification or if the user muscled it in. The pin-and-socket connectors of PCI weren't perfect, but they were a definite improvement.

PCI's maximum throughput was generally 32-bit words at 33 MHz, though there were extensions to 64-bit words at 66 MHz. PCI led in the late 1990s to a faster "PCI-X" specification that could handle 64 bit words at 133 MHz. PCI-X was a superset of PCI, and a PCI card could be plugged into a PCI-X slot and work. A "PCI-X 2.0", a "super-superset", was introduced in 2003 that provided 266 MHz and 533 MHz transfer rates.

However, it appears that the momentum has now shifted to an entirely different specification, the Intel-designed "PCI Express" or "PCI-E", which despite the name has almost nothing to do with the mechanical and electrical specification of the original PCI scheme, though it does share the PCI software interface scheme -- meaning that a PCI-E card could be exchanged for a PCI card without having to rewrite all the application software.

PCI-E is intriguing because it abandons the parallel bus scheme of PCI, instead using a two-wire serial connection or "lane" as the basis for communications, with the organization of the scheme similar to that of a twisted-pair two-wire local network (LAN) using a central hub -- though PCI-E is strictly an internal communications scheme. The serial scheme was adopted because at high clock rates, it's hard to make sure all the bits on a parallel bus are available at the same time, a problem known as "timing skew".

Each lane actually consists of two wires in and two wires out, since each pair of wires is one way. Each pair uses "differential signaling" -- that is, instead of a signal pulsing from ground to a high voltage level and back down again, the signal pulses by changing polarity, from "plus-minus" to "minus-plus". Differential signaling is more immune to noise and can support higher transfer rates. The transfer rate of a lane is 250 megabytes per second. All control data is sent over the lane, not on separate wires.

A PCI-E card may support 2, 4, 8, 12, 16, or 32 lanes, multiplying the total throughput accordingly, up to a maximum of 8 gigabytes per second. The assembly of lanes on a card is called a "link". Not surprisingly, backplane slots are sized as per the number of lanes provided, for example as "x1", "x4", and "x16". A small card, say an x1 card, will fit into a big x4 or x16 slot, but a big card, say an x16 card, won't fit into a small x1 or x4 slot. A PCI-E 2.0 spec is in the works, with doubled throughput. [TO BE CONTINUED]

NEXT* INFRASTRUCTURE -- POWER PLANTS (1): Chapter 5 of Brian Hayes' INFRASTRUCTURE discusses electric power plants. There are three main sources of electrical power in the US: fossil fuel plants burning coal, oil, or natural gas; nuclear power plants; and hydroelectric plants.

Fossil fuel plants provide the lion's share of electrical power, about two-thirds of the national total. Coal is the primary fuel. A big coal-burning plant will burn about 13,600 kilograms (30,000 pounds) of coal every minute and produce a gigawatt of electrical power. A coal-fired power plant is very distinctive, marked by a tall smokestack and cooling towers, with a trainload of coal often being unloaded into stockpiles, and an electrical switchyard sending electrical power off over the horizon on a string of power towers.

A "stacker" unloads the coal cars into a stockpile, which is then drawn down by an "unstacker" that sends the coal to the plant over a conveyor system. The stacker and unstacker feature long pivoting booms that make them look like dinosaurs grazing at the coal pile. The stockpiles have to be monitored since coal is a combustible and "weathers" or oxidizes at a slow rate, which can turn into an outright fire if the process is allowed to run out of control. The sensor system is very simple: a worker shoves a steel rod into the stockpile and if it comes back out hot, something's wrong.

The conveyor from the stockpile dumps the coal into a bunker or silo for use. The coal is pulverized into a powder before being fed into the furnace to make sure it burns as efficiently as possible, with the powderization process being much like that used in ore milling. The big lumps are broken down in a spinning drum or "crusher", and then run through another rotating drum containing steel balls or bars hinged to the interior of the drum, with the output as fine as beach sand.

While coal is the most common fossil fuel for electric-power generation, oil is used to a considerable extent in the US Northeast. The oil is not like the light fuel oil used for residential heaters, instead being the nasty, thick, tarry "bottom" material obtained from the base of the refinery stack. Since the thick oil doesn't flow well and won't flow at all in cold weather, the fuel lines have to be heated. Natural gas is a much cleaner fuel than oil and easier to handle, but it's expensive and only used in locales where environmental regulations make coal or oil plants unacceptable. Power plants fired by natural gas need a high fuel feed rate and so are fed by a specialized high-pressure pipe system, not the low-pressure municipal system. [TO BE CONTINUED]

START | PREV | NEXT* POWER FROM TRASH? According to an AP article from September 2006 ("County To Vaporize Trash -- Poof!"), Saint Lucie County in Florida is now sinking $425 million USD into an unusual scheme for generating power -- by vaporizing trash in plasma arcs. After the trash power plant comes online in 2008, thousands of tons of trash will be converted every day to generate an expected average of 120 megawatts of electricity. Trash will no longer go to a landfill; in fact, the existing county landfill is to be mined and eventually cleaned out.

The hot gas from the vaporized trash will run power turbines, with about a third of the power output used to run the plant itself and the rest sold on the power grid. Steam produced by the process will be piped to the neighboring Tropicana Products plant to support orange juice production. The plasma system will also be able to vaporize sludge from a nearby wastewater treatment plant. The residue from the process will be a hard, inert slag that will be used in road construction and other projects.

At the present time, there are only two such plants operating in the world, both of them in Japan, and they are much smaller than the planned Saint Lucie County facility. The new facility will feature eight incineration vessels, each supporting a single plasma-arc system, with trash dumped into the vessels via a conveyor system. Geoplasma, the company behind the technology, claims the process is cleaner than burning coal or natural gas and produces very low levels of toxins.

Critics are suspicious, saying the data on the operation of trash vaporization plants is too sketchy to make such glowing claims credible, and also say that attempts to run similar facilities in Germany and Australia failed because their emissions were too high. Geoplasma officials point to the two Japanese plants in response, saying that they meet Japan's very strict environmental standards.

* According to a related article in THE ECONOMIST ("A Rubbish Business Model", 23 September 2006), a startup named Startech Environmental out of Wilton, Connecticut, is claiming that they can use plasma vaporization of trash to generate "synthesis gas" -- mostly carbon monoxide and hydrogen -- which can be then converted into ethanol or diesel using a catalytic process known as "Fischer-Tropsch synthesis". According to Startech officials, a single scrap tire can produce several gallons of ethanol.

An official at the US National Resources Defense Council (NRDC) mocked the idea, saying that there's no cost-effective way to sort the trash stream to get rid of materials that cannot or should not be vaporized, and also claims that the concerns over landfills are a red herring: there's plenty of landfill space in the USA for a long time to come. Startech officials reply that the scheme is workable and profitable, though all the company has in operation right now is a small pilot plant. Several other companies are promoting similar schemes, but they're not even as far down the road as Startech, with their plans existing entirely on paper for the moment.

A company named Changing World Technologies (CWT) is now running a full-scale plant to process the wastes from turkey and pig meat-processing plants. The wastes are subjected to "thermal conversion", using heat and agitation to break them down, mostly to produce liquid and solid fertilizer. The process also produces gas that can be used to power an industrial plant, and the gas could be used as a feedstock to produce biodiesel. CWT officials do say that their effort hasn't been a magic carpet ride by any means, the company having been forced to deal with the foul smells produced by the plant, and admit that at present they can't produce biodiesel at less than $80 USD a barrel.

CWT officials remain optimistic, but the issues they have had to deal with suggest that the dreams of a new world order of alternative fuels should be taken with a grain of salt. Anybody who recalls some of the froth associated with the similar rush of alternate-energy enthusiasm in the 1970s hardly needs the reminder.

BACK_TO_TOP* SUPER CONCRETE: Concrete was in widespread use in Roman times and is a well-established technology, but as reported by an article in THE ECONOMIST ("Concrete Possibilities", 23 September 2006), there's still plenty of room for improvements.

Concrete consists of cement (made of kiln-dried clay and limestone), sand, and an "aggregate" in the form of gravel of various grades. Mix it all in water, pour it in a form, and then it dries into a rocklike floor or wall. Materials scientists also know how to tweak the mix to obtain a range of properties; for example, encouraging the distribution of tiny air bubbles in the concrete makes it more durable, since cracks won't propagate as far.

More intriguingly, in the late 1990s materials scientists figured out the virtues of adding steel or carbon fibers to the concrete. The proportion of fibers is very small, only about 1%, but they make the concrete electrically conductive. Passing a current through the concrete will cause it to heat up, melting snowfall on the roadway. A traffic bridge near Lincoln, Nebraska, has been paved with conductive concrete, and researchers have been monitoring how well it works in real-world conditions.

The conductive concrete costs about four and a half times more than ordinary concrete, but that doesn't factor in life-cycle costs, such as the use of salt to de-ice roads and the damage caused to roads and cars by salt. Conductive concrete might not be practical for long stretches of road, but it could be very well suited for major bridges and airport runways.

Another property of conductive concrete is that the compression of the concrete as a vehicle passes over it compresses the fibers, giving a greater conductivity per cross-sectional area, and allowing the concrete to monitor and weigh traffic. Conductive concrete could also be used in the floors of buildings to sense burglars or provide heating, as well as detect damage from earthquakes or a creeping structural failure. The conductive concrete also is opaque to radio waves and so can block out electronic snoops.

Building applications are merely a future at the present time, though companies are working on wireless sensor systems to interface with conductive concrete building structures. The US Army is interested in conductive concrete for use in military structures and bunkers, as well as roads on border crossings. The Canadian Institute for Construction Research (ICR), an arm of the National Research Council Canada, is now working towards funding a few demonstration projects.

A startup named Grancrete, based out of Mechanicsville, Virginia, has developed their own new take on concrete, made from sand and a proprietary binding agent, which to no surprise they call "grancrete". It started life as a material to encapsulate radioactive waste, but the inventors realized that it could be sprayed on a form to build a cheap house. It will easily bind to most surfaces, and the form can be made of wood, metal, polystyrene, or even woven matting. It takes 20 minutes to harden after being applied, resulting in a material as strong as ordinary concrete, as well as fireproof, waterproof, non-toxic, and durable. Grancrete officials say a team of two can put up a simple house in two days with the material. The company has performed demonstrations in Latin America and now has a full-scale production plant in operation. It should be remembered, however, that back in 1906, the endlessly energetic Thomas Alva Edison came up with a broadly similar scheme for concrete housing for the poor, but the effort only produced eleven demonstration homes: nobody wanted them.

Other ideas for advanced concrete are more speculative. Bill Price, a professor of architecture at the University of Houston in Texas, came up with the idea of a concrete mix that included glass or plastic mixtures, resulting in translucent concrete. Slabs made of the material have been exhibited at museums in the US and Europe, but so far it hasn't been used in a practical construction project. Price is working with contractors to give the idea a shot in a few Houston construction projects.

There's also an environmental angle for new thinking about concrete. The magnitude of concrete use can be appreciated by the fact that about a cubic meter of the material is produced every year for every human on Earth. In itself, concrete is generally environmentally benign, but the kilns that fabricate the cement for concrete produce large amounts of carbon dioxide. It's hard to believe, but the contribution of cement kilns to carbon dioxide production is estimated at 5% to 10% of all global carbon emissions. One idea to get around this problem is to use waste materials from various industrial processes as a supplement or partial replacement for cement. Fly ash trapped in the emissions systems of coal-fired power plants, slag from steelmaking plants, and condensed silica from semiconductor plants have all been considered as alternatives. The Canadian IRC is interested in the idea, though IRC officials warn that cost and quality issues have to be addressed.

Somewhat along the same lines, concrete can be used to help dispose of waste materials. It has been used for some time to encapsulate the toxic ash from municipal incinerators or the sludge dredged up from harbors. A few years ago Christian Meyer, a professor of engineering at Columbia University, figured out a process to allow waste glass to be incorporated into concrete. This requires a special mix, since glass mixed with normal concrete would react with the cement, causing the concrete to swell and crack. Meyer's glass-concrete composite, in contrast, is superior to ordinary concrete in many ways, being more resistant to water absorption, more durable, more resistant to chemical attack, and is pretty. Wausau Tile of Wausau, Wisconsin, has licensed the process to manufacture decorative tiles and planters. Sales of these products have been gradually increasing over the past few years, and Meyer believes there's much more room for growth: "We're still learning how to use recycling."

BACK_TO_TOP* WELCOME TO YOUR SECOND LIFE: There has been some fuss in the press over the "Second Life" virtual environment recently. An article in THE ECONOMIST ("Living A Second Life", 30 September 2006) took an interesting closeup look at the phenomenon.

There are other virtual environments on the Internet, but most are what are known as "massively multiplayer online role-playing games (MMORPGs)" or "morpegs". At the present time the biggest morpeg is "World of Warcraft", a swords-and-sorcery environment with over 7 million participants. Second Life is different: it is a general environment where there is no specific game, where participants can make up their own games, or do anything else that they like. A professor of psychiatry at the University of California / Davis has set up a simulation of what it is like to be a schizophrenic on Second Life; Mark Warner, once a governor of the state of Virginia and now seeking other offices, recently conducted a virtual town hall meeting on Second Life, speaking with 62 other participants and with the session moderated by a participant who works as a reporter in the virtual world. Currently, Second Life has about 750,000 participants.

Second Life is the product of Linden Labs of San Francisco, established in 2003 to make the dreams of its founder, Philip Rosedale, a (virtual) reality. The environment is not accessed through a web browser; instead, a user installs a dedicated Second Life software environment on a PC, with the environment minimizing the need for network bandwidth by performing much of the digital "grunt work" locally.

Not only does Second Life allow its participants to play any game they choose, it also allows them to create their own props: cars, aircraft, robots, weapons, artworks, and so on. Furthermore, participants have "intellectual property" rights to their "user-generated contents" and can sell or trade in virtual currency -- which can actually be converted to real money, with a handful of Second Life participants making tidy real fortunes off their virtual transactions. In fact, the concept of virtual transactions is how Second Life supports itself, mostly from the sale and lease of virtual property plots. Linden Labs is estimated to make about a million real dollars a month at present using this approach.

The usual reaction to the notion of a real business supported by virtual transactions is that it's all a financial bubble, or a pyramid scheme where the money changing hands has nothing to do with the actual value of what is being bought or sold -- the idea in making a purchase being that some "greater fool" will buy it at a markup. Not so fast, says Second Life's defenders: most money is completely virtual, existing only as numbers in bank accounts, and even the relatively small portion that exists as cash is strictly in the form of paper or metal tokens that have little intrinsic value in themselves. Intellectual property gets traded all the time in the real world, sometimes for big money, even when it hasn't been converted yet into real products.

Mitch Kapor, known to computing old-timers as the lad behind the classic Lotus 1-2-3 spreadsheet and now chairman of Linden Labs, is effusive about the potential of the concept, calling it "disruptive", saying that it will eventually become "profoundly normal", and displace normal "desktop computing". He even claims that it will "accelerate the social development of humanity."

Second Life is a hot business in Silicon Valley these days, but it has been accused of being over-hyped. There are only about 9,000 participants online at any one time, generally translated to oversexed digital cartoon avatars, and most of the stuff being traded is clearly junk, virtual or not. Although Second Life's defenders may try to soften the accusation that the business model is based on a financial bubble, it's also hard to avoid it. Sun Microsystem's Bill Joy likes Second Life but adds that he doesn't see the activities of the participants as having much correlation to a "skill set to succeed in the real world."

Still, almost everybody still thinks it's worth a shot, and real-world organizations and businesses are getting involved. Book publishers are now conducting readings and promotions in Second Life, and the BBC is conducting events on an island that Auntie owns in the environment. Coca-Cola, Microsoft, Sun, and MTV also have stakes in Second Life. Toyota is even providing virtual Scion cars, at least initially for free, in Second Life. Giving away virtual product turns out to be a new and potentially very effective way to advertise: if somebody likes their virtual Scion, they may well go to a dealership to see how the real one compares. Since virtual products have a design cost but no real production cost, even small-time players can play the giveaway game to good effect in Second Life.

Those participants who have been with Second Life for a while fear that commercialization may overwhelm their virtual world, but Rosedale says that being a virtual world, it can be extended as much as anyone pleases. If one part of the world becomes too commercialized, those unhappy with it can create a new virtual promised land in the environment and relocate. Rosedale feels that for now there's no reason for the management of Second Life to interfere in the transactions in the environment, though he admits that it might be necessary to break up monopolies if they become too overbearing.

There is also the issue in that as Second Life becomes more like a "super Internet" there is the likelihood of getting the same bad players in the virtual environment as can be found on the outside web. Mitch Kapor says: "People all bring their karma." The karma of the web includes bigotry, demagoguery, and cybercrime, and Second Life can look forward to the same. That should be no reason to condemn the concept: the telephone can be used for obscene calls and scams, but nobody seriously thinks that makes the telephone bad. Second Life's backers believe that it will be as technologically revolutionary as the telephone, and if that means there will be abuse of the system, that will just have to be dealt with, and it will not compare with the value that Second Life will provide.

* To illustrate the sights and attractions of Second Life, WIRED magazine ran a heavily illustrated "travel guide" to the virtual land in the October 2006 issue. It starts out with an image of the glittering technopolis of the city of Armorg; then travels through the lovely Lost Gardens (where marriages are performed, with a legitimate minister on retainer); the International Spaceflight Museum, with its array of boosters and spacecraft on display; the island-city of Nakana, which has districts emulating different genres of Japanese anime; Svarga, a nature reserve and experiment in a virtual ecosystem; an amphitheater for conducting events for participants; and many resorts, clubs, and shopping centers.

Participants can buy weapons, tools, property, homes, artwork, clothes, and even sex organs (for their avatars) -- adult fare is available on Second Life, though it's zoned into red-light districts. For the less daring, there are online games, including action games (SAMURAI ISLAND); MYST-like exploration games (NUMBAKULLA, which was actually originally made by the creators of MYST as a morpeg, then canceled, with Second Lifers cloning it); and puzzle games (TRINGO, a cross between Tetris and Bingo that is starting to catch on in the game world outside Second Life).

Initially, Linden Labs tried to tax participants for their user-generated content, but ran into a great deal of resistance, finally turning to Lawrence Lessig of Stanford -- well-known for his work to reform the excesses of current intellectual-property law -- to develop the current free-market system. The content is built out of cubic elements, which can be modified in appearance and given different properties. The currency in Second Life is the "Linden dollar" or "L$". The conversion rate of Linden dollars versus US dollars varies over time -- exactly how the conversion rate is figured is an interesting question -- but through 2005 and 2006 the range has been about 240 to 325 L$ to a single US dollar. Baseline accounts on Second Life are free, with a premium account subscription being about $10 USD a month at this time, with the subscriber also getting a stipend of 400 L$ a month.

[ED: Looking back from 2017, Second Life would end up being an infamous bust. Game virtual worlds would zoom past it.]

BACK_TO_TOP* HOTHOUSE WORLD (6): Right now, the only global effort to deal with global warming is under the umbrella of the Kyoto treaty, which attempts to limit greenhouse gas emissions of signatory states. The Kyoto treaty has proven controversial. Although the treaty was set up with American backing, shifting politics meant that the US never ratified it; neither did Australia. Canada did, but doesn't seem to be reaching targets, and Japan is struggling with it as well. The European Union (EU) is taking the treaty more seriously, having set up the European Emissions Trading Scheme (ETS) to allow member states to buy and sell their emissions quotas.

Ironically, the trading scheme was originally proposed by the US, but ended up in the lap of the EU. The ETS has worked surprisingly well, with the "big three" Western European countries -- Germany, France, and the UK -- on track, though other members are having more difficulty. The World Bank has organized an annual trade fair in Cologne, in which Kyoto signatories from over the world come to set up booths and trade emissions.

Progress of the signatory states towards reducing emissions has generally focused on gases, such as methane, that are much worse offenders than carbon dioxide, which is an all but unavoidable end product of combustion processes. There are criticisms that the economics of Kyoto are dodgy:

Most defenders of Kyoto admit that it's far from perfect, but it's the only global game in progress at this time. Unfortunately, it's not entirely global, with the absence of the USA from Kyoto being only too noticeable, helping make the current US administration a target for international criticism. However, even the US federal government is starting to feel pressure from the bottom. Several major American cities have implemented greenhouse gas emission control programs, and California governor Arnold Schwarzenegger is making the emissions issue a major plank in his political platform. California is already leading the US on auto emissions, and what California does is often then imitated by other US states.

Some of the citizenry is also becoming mobilized over the issue -- and not just greens, either, with conservatives worried about energy independence joining in. Both groups like the idea of renewables; and that tends to bring in farmers, who really like the idea of growing crops for ethanol production, and for leasing sites for wind turbines on their farmlands. Even a group of American evangelicals has come forward to declare the threat of global warming. Hunters and fishermen are starting to line up as well, as are businesses like GE.

Right now, however, progress of legislation in Washington DC is slow. It may pick up after mid-term elections this year; if not, then after presidential elections in 2008, it being unlikely that the next administration, whether Republican or Democrat, will be as conservative as the current one. The whole world will be watching. The absence of the US from Kyoto and the mainline world politics of greenhouse gas emissions is impossible to ignore; the US, being a major greenhouse gas emitter and global industrial power, is in a position to take a lead in the struggle.

The challenge is serious. Although cars are regarded by the public as one of the biggest offenders, in fact only 13.5% of emissions is from transport -- not just cars, but trains, ships, and airplanes as well. The big offender is power generation, with 24.5% of the total, and this threatens to get worse as high oil and gas prices push a greater emphasis on coal. Deforestation comes in second, at 18%. If the US is the biggest offender in emissions at present, China and India are catching up fast. If these three nations and the EU can get their heads together, they have a fair chance of coming up with a solution that works.

There's no shortage of good ideas. Underground sequestration of CO2 from coal-fired power plants might well put a big dent in emissions. It could raise the price of energy from these plants by about 50%, but coal is really cheap energy and that wouldn't be an extremely painful burden. Renewables like wind power can also help, though their usage is not expected to skyrocket any time soon.

Governments can push by establishing clear-cut economic incentives. The real political issue, however, is international cooperation, and again the matter heavily falls on the US. If the US does establish schemes for reducing emissions, the rest of the world is likely to be strongly influenced. If the US also gets back into the process of exerting world leadership on the issue, others are very likely to follow along -- but not even the most optimistic think that America will be prepared to exercise the diplomatic option before 2008. [END OF SERIES]

START | PREV* INFRASTRUCTURE -- OIL & GAS (9): We tend to take natural gas somewhat more for granted than gasoline, for the simple reason that we don't normally have to pump natural gas out at a filling station. Instead, it arrives at our homes through the gas mains to be burned in a furnace or water heater, and we get a bill at the end of the month. Obviously, this convenient arrangement demands some extensive infrastructure.

That infrastructure has been around a long time, from the days when municipal gas systems not only provided heating but also lighting. The scheme had its origins in the 19th century, with the gas originally provided by roasting coal in an oxygen-poor environment, producing carbon monoxide, some hydrogen and methane, and a wide range of traces of other things. This "coal gas" -- which was also later synthesized by roasting the "bottom of the barrel" resid from an oil refinery -- left something to be desired as a fuel, in particular being very toxic. The "coal tar" left over the process was extremely nasty, and a good chunk of the work of 19th century chemists in creating synthetic dyes and the like was driven by the need to figure out how to get rid of it.

Coal gas went away in the 1950s, with natural gas taking its place. Natural gas is mostly methane, which burns clean and isn't anywhere near as toxic as coal gas. A gas leak still isn't welcome, and so traces of mercaptans -- smelly sulfur compounds that underlie halitosis and skunk smell -- are mixed with natural gas to make sure a leak can be detected, methane being otherwise odorless.

The triumph of natural gas over coal gas was due to the construction of long-distance gas lines, since otherwise it's difficult to transport natural gas in a cost-effective fashion. It is possible to ship "liquefied natural gas (LNG)" in tanker vessels, but it not only requires the tanker to be fitted with expensive pressure vessels, the pressure vessels have to be cooled to cryogenic temperatures. Shipping LNG is expensive, and there are also some worries that terrorists might try to use a LNG tanker as a super-bomb to attack port cities. Some countries with natural gas deposits that aren't linked up to customers with pipelines have taken to synthesizing diesel fuel out of the gas. It's a relatively expensive process, but with high fuel prices it's still cost-effective.

Gas shipped through the pipeline system has to be stored in tank farms. Traditionally, the tanks are large drums that feature telescoping segments inside an external frame, with the segments sealed with water that's heated to keep it from freezing. As the tank fills up, the sections rise, and as the tank is drained they fall; the idea is to keep air out to reduce the hazard of fire and explosion. Rigid tanks are also used, with an internal piston that rises and falls with the gas level.

These days, pressure tanks and large LNG tanks are increasingly common. A bank of about a dozen pressure tanks, which are cylinders with rounded ends like a big medicine capsule, is equivalent in storage to one of the older telescoping tanks. The LNG tanks are used for large-scale storage; LNG is 600 times denser than normal natural gas, and so a tank that doesn't seem that much bigger than the old telescoping tanks has vastly more storage capacity. Of course, LNG tanks require a cooling unit, as well as an evaporator to return the LNG to gas form for distribution.

The end-user distribution system hasn't changed much for a century. The gas is pumped at only slightly over atmospheric pressure and flows through pipes at a gentle rate. This means large pipes, with some of the mains being tall enough to walk through, but it improves safety, reducing leakage if a pipe fails. A gas system will have venting units to release gas in case some failure causes overpressure. One thing has changed: although the pipes in the system were traditionally made of iron, these days they're generally bright yellow plastic.

* In this era of expensive energy, much effort is being expended on what we'll use for fuels the day the oil and gas pumped from the ground starts becoming too expensive to be usable. There's been a lot of talk about biofuels and hydrogen; those who remember the energy crisis of the 1970s take it all with a grain of salt, but it is still clear that changes are in store, with a new fuel infrastructure arising in the future.

There have been worries that oil production will soon peak or even has, but this is generally seen as a fringe notion. The general assessment is that the oil economy has several more decades of life left in it. The past evolution of the current fuel infrastructure gives some cause for confidence, if not complacency, that the challenge will not be overwhelming. There was no serious gasoline infrastructure in the US in 1900; by 1950, it was universal and taken for granted. Decades give plenty of time to build a new fuel infrastructure -- and if that seems maybe a bit too optimistic, go back to 1850 and consider a debate over what might happen when whale oil started to give out. There may well be difficulties in store, but on the other hand people may well look back from the year 2050 at the energy insecurity of our time and wonder why we were so worried. [END OF SET 4]

START | PREV | NEXT* DIAL M FOR MALWARE: The cellphone revolution has led to ever smarter cellphones that begin to seem more like pocket computers. To no surprise, as discussed in an article in SCIENTIFIC AMERICAN ("Malware Goes Mobile" by Mikko Hypponen, November 2006), they now present a tempting target for hackers trying to penetrate their operating systems, sometimes for profit but sometimes just for the malicious hell of it. The first virus to infect cellphones, a self-replicating "worm" named "Cabir", appeared in June 2004. It seemed to have originated in Spain, with its author simply posting it to a website but not trying to propagate it himself. He didn't need to, since others were more than happy to do it for him.

Researchers at F-Secure, a Finnish computer security firm, began to study Cabir to see what made it tick. This was trickier than probing malware infecting a desktop PC: although a desktop PC can usually be disconnected from a network simply by unplugging a networking cable, it's not that simple to isolate a cellphone from the greater network. Initially, the study team worked in a basement bomb shelter. Later, two special study labs, encased in aluminum and copper, were then built to ensure security.

Cabir, it turned out, was a purely experimental virus, designed simply to propagate itself. However, in two years it had been followed by about 200 cellphone viruses, with some disabling phones, others deleting data, and still others ordering the phone to send messages to high-priced phone numbers.

* The first computer virus, named "Brain", was introduced in 1986. Two years after that, computer experts were claiming viruses would never be any more than a minor nuisance, an idle fad that would pass once the virus writers got bored. Now over 200,00 known viruses have been identified, and malware writers have used them to assemble huge "zombienets" of compromised PCs whose computing power they lease out. As far as cellphones go, it is now 1988, but this time nobody is being complacent: with over two billion cellphones in operation on the planet, the potential for trouble is enormous.

Up to recently, cellphone viruses were also regarded as a curiosity because cellphones weren't highly standardized, with different vendors using different proprietary technologies. A virus written to nail one type of cellphone would not work against another. The new generation of "smartphones" is much more standardized, with many running the Symbian operating system (OS) and a good number (mostly in the Far East) running "pocket" versions of the Linux OS. The smartphone installed base is growing rapidly; a modern smartphone has more computing power than a PC of the mid-1980s, and soon the smartphone will be the most common personal computing device on the planet. The smartphone network makes an irresistible target for virus writers.

At present, smartphone technology is very easy to compromise. Many smartphones have a "bluetooth" interface, which is a short-range secondary wireless communications channel allowing a phone or other device to trade data with another bluetooth node within a distance of about ten meters (33 feet). Normally, a device with a bluetooth interface operates automatically, linking to any other bluetooth node in range -- which not only makes a bluetooth-enabled smartphone easy to infect, but also makes it a virulent source of infections once it has been compromised. Users can set their smartphones to a "nondiscoverable" mode so that it won't hook up automatically with another bluetooth node, but most smartphone users don't know they can turn their bluetooth connection on and off at will.

For example, consider the operation of the worm "CommWarrior.Q":

Although CommWarrior.Q wasn't designed to make money for its creator, that doesn't mean it won't rip off Bob and Alice. They have to pay a premium for each MMS file they send, and CommWarrior.Q industriously sends them out in volume, running up bills.

The first cellphone virus designed to make money was "RedBrowser", which appeared in early 2006. It was actually a "trojan horse", based out of a website that offered downloadable goodies for cellphones. The website actually installed a Java program -- which can run on any OS that runs Java -- that then quietly sent out calls to a special phone line that charged a hefty fee for each call.

Smartphones are increasingly being used as "electronic wallets", making the incentive to try to compromise them just that much greater. There's also the threat of "spyware", with one virus, "FlexiSpy", reporting a log of the calls and MMS transfers from a compromised phone. New phones have voice recording facilities, and virus writers are sure to try to listen in on the recorded conversations.

* Makers and service providers for smartphones have something of an advantage in that they can see the virus threat coming. As mentioned, it wasn't taken all that seriously on PCs in the early days, but now everybody knows what a nightmare it finally turned into. Smartphone viruses are still fairly primitive: none of the hundreds of malware programs written so far actually exploit holes in the operating system, instead trying to trick users into letting them in.

Some antiviral programs are already available for smartphones. Firewall software, to warn users when a program on the smartphone tries to open an internet connection, needs to be developed. Some service providers monitor traffic and block MMS files corrupted by viruses, but all the providers need to do so as a standard practice. The "Trusted Computing Group" has been working on standards for microcircuitry to provide improved security for smartphones, and the latest versions of the Symbian OS also include improved security schemes. Symbian now requires any programs installed on a smartphone to have a "digital certificate", which has to be obtained from Symbian and is hard to simulate.

Governments also need to get their act together on dealing with cybercrime, providing penalties and enforcement. However, the ultimate responsibility will rest on users. All the tools in the world won't help if the users don't take advantage of them, and go on answering YES just because malware won't take NO for an answer.

BACK_TO_TOP* HYDRAULIC HYBRIDS? An article run here in October mentioned the concept of "hydraulic hybrid" automobiles, a scheme in which fluids are stored under pressure by braking and then released to provide acceleration. According to backers, hydraulic hybrid systems are potentially lighter, cheaper, and more effective than electric hybrid systems. According to an article in BUSINESS WEEK ("Gas Saver Or Tailpipe Dream?" by David Welch, 18 September 2006), not everyone is convinced.

Hydraulic hybrids are now being pushed by parts makers Eaton Corporation and BorgWarner INC, with the leading advocate being an inventor named Tom Kasmer, who was involved with the development program being conducted by the US Environmental Protection Agency (EPA) and United Parcel Service mentioned in the earlier article. The system increased mileage by 50% and has attracted the attention of heavy-equipment manufacturer Bobcat.

The scheme is conceptually simple. Braking drives hydraulic fluid, usually oil, from a low-pressure reservoir into a high-pressure reservoir. Acceleration vents oil back in the reverse direction, driving a set of vanes connected to the drive axle. Kasmer claims his "hydristor" system is superior to an electric hybrid system in almost every respect. A study by an EPA group not associated with Kasmer, as well as investigations by Eaton and BorgWarner, also give high marks to the scheme. A prototype hydraulic hybrid system that BorgWarner experimentally fitted to a subcompact car increased mileage by 21%.

Eaton Corporation sees hydraulic hybrid technology as particularly well suited to delivery trucks, such as garbage haulers that move from stop to stop over short intervals. Kasmer is also working with a bicycle manufacturer to design a bike with a hydristor system replacing the convention gears and chains. The bike would be in theory simpler than a traditional bike and easier to pedal since it could store up energy a traditional bike throws away.

What about Detroit? Motor City leadership is wary. A GM official points out that people have been tinkering with hydraulic schemes for a long time, and finds the mileage figures cited by advocates hard to believe. There's also a problem long associated with hydraulic systems of all sorts: they leak. One more drawback is that they're noisy, with an Eaton researcher admitting the "groan like the landing gear on an airplane." For the moment, hydraulic hybrids seem like an interesting possibility whose advocates still have some proving to do.

[ED: They did. As of 2017, the trash trucks in Loveland CO are hydraulic hybrids.]

BACK_TO_TOP* THE 300 MILLION: According to an article in THE ECONOMIST ("Now We Are 300,000,000", 14 October 2006), in 2006 the United States of America passed a milestone: the population officially reached the 300 million mark. The 200 million mark had been reached in 1967, and the 400 million mark is expected to be reached in 2043 or so. This is a remarkable rate of population growth for a wealthy country, making the USA the world's third most populous country after China and India. In contrast, Japan and the EU are expected to lose millions over the next few decades.

These are all just estimates, with a lot of factors that can change the ultimate sums, such as immigration, new lifestyles, increase or decrease in lifespan. However, it is a well-established fact that the US birthrate is an average of 2.1 children per woman -- only about the replacement rate, but along with robust immigration it means a booming population. Contrast this with the EU, where the fertility rate is 1.47, and where the population is expected to start falling in 2010. In Spain and Italy, it's 1.28, and without immigration the populations of those countries will fall to half in 42 years.

Falling birthrates are generally seen as an indication of prosperity. In poor countries, families try to have more kids to provide extra hands for work, as well as providing an old-age safety net. In rich societies, children can be a very expensive proposition, and with women working more and more, child-rearing means losing a good part of family income while financial demands jump up abruptly. Couples in rich countries end up balancing their desire for the good life with their desire for children.

So why the high US birthrate? It seems that one of the answers is that Americans are more devout than Europeans. There is a tendency overseas to view the US as something like the Western equivalent of Afghanistan, a hotbed of religious fundamentalism, but in reality American religious conservatism is not always extreme, and the vision of fundamentalist families with hot and cold running kids is, as a birthrate of 2.1 kids per woman shows, much more the exception than the rule. However, a comfortable association with a religious faith not only pushes family values but provides resources to help raise families.

There is also the fact that the equality of the sexes is good, if not perfect, in the USA; studies seem to show that more male-dominated societies like Japan, where child-rearing is shifted heavily to women, have lower birthrates. In addition, there's more wide open spaces in the US for families to grow. The big urban centers are of course crowded and have high living costs, but there's plenty of space left in the heartlands and the West.

The changes in population mean changes in demographics. Cities like Houston, Texas, were once white-dominated; now whites share power and influence with hispanics, blacks, and asians. Some non-white Houston residents don't feel race is an issue there, one saying: "Everybody's so busy making money they don't have time to worry about race." Others disagree to an extent, but most of the citizenry still feels the multi-ethnic nature of their city is a strength in an age of globalization. As goes Houston, so it seems will go the rest of the USA.

Population growth, in spite of the old fears of "population doomsday", is seen generally as a good thing here. By 2050, there will be one retired European for every two Europeans in the workforce; in the US the ratio will be a more tolerable one to three. The attitude is that problems of growth are better than problems of decline. The US population boom may well make others nervous. The USA is now widely seen elsewhere as overbearing, though the Americans have been acquiring some humility the hard way, and the prospect of 400 million Americans is likely to cause some nervousness. The more diverse and globalized America of 2043 may not be as big an irritant.

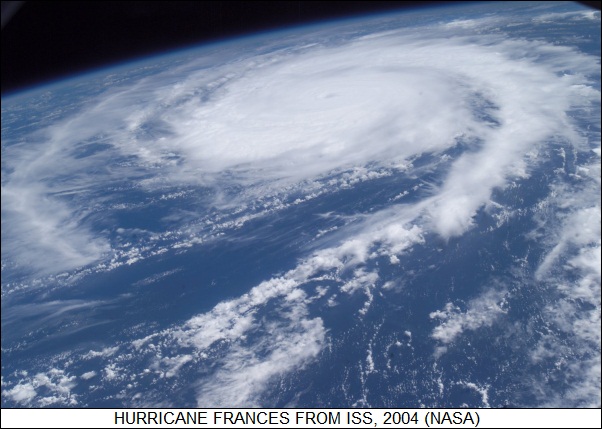

BACK_TO_TOP* HOTHOUSE WORLD (5): Concerns about shifting ocean currents, melting glaciers, and nastier hurricanes are only part of the global-warming package of worries. There is the simple impact of hotter and more unbearable summers, and of the spread of insects like mosquitoes that carry disease.

The realization that global warming is good for mosquitoes leads to the thought of what effects it will have on other organism on the planet. The evidence suggests that in many locales, organisms accustomed to warm climates are expanding their ranges at the expense of life-forms accustomed to cool climates. Red foxes are becoming more common -- arctic foxes, less so. Polar bears are obviously hurting as their icepack range gradually melts. Plants ought to do generally better in a CO2-rich world, but overall global warming is a challenge to the Earth's species, in large part because they are already being pressured by human activities. Most biologists think that a goodly number of species are going to die out in the coming century. All in all, global warming doesn't look like any picnic -- so what to do about it?

* One of the first considerations is of course cost, including both the cost of the problem and the cost of fixing it. If the damage caused by global warming is less than the cost of fixing it, there's going to be a disinclination to take action. Economists have been pushing hard to come up with cost models, factoring in not only the science but also the politics and the social implications.

The models suggest that some folks will actually come out ahead on global warming -- a hotter world will make things better for Russia, where a large part of the territory is more or less useless a good part of the year. Some models even suggest the USA will obtain a slight benefit, though others suggest a slight decline. However, the models are unambiguous in stating that the tropics -- particularly South Asia and Africa -- will suffer badly, bringing down the average heavily.

The cost of fixing the problem is heavily dependent on items that are hard to estimate. How much can be done to promote energy conservation, and at what cost? How fast will the cost of renewable energy technologies fall? How fast do emissions need to be brought down? This last issue is a subject of debate, since if it doesn't need to be done in a hurry -- and, given that global-warming is a long-term issue, there's a fair case for saying there's no rush -- then the fixes are much cheaper: instead of scrapping existing infrastructure immediately in favor of "green" replacements, the existing infrastructure can be used to the end of its normal life, and then replaced with more environmentally appropriate infrastructure.

What further complicates the equations is the concept of value across different borders. Putting a money value on global warming damage to the UK and India is tricky, because the number of Britons living in serious poverty is much smaller than the number of Indians living under such conditions. Global warming might be an inconvenience for most citizens of prosperous countries, but it could be a real disaster for poor citizens of poor countries. Another complication is the "time value of money": will money spent now have an ultimate effect much greater than money spent later, or the reverse?

Some economists think that such considerations are generally red herrings. Why worry about global warming for a poor country like Bangladesh when such places have so many other problems to fix? Why not spend the money on known problems, strengthening the citizenry to be better able to deal with less certain problems if or when they arise? However, for the most part the economists believe that global warming will have a severe impact, that the costs of remediation are not so large, and that things don't have to be fixed in any enormous hurry.

* While not all businesses are sold on the threat of global warming, a surprising number are. For example, Shell Oil in the Netherlands figured out a way to get rid of carbon dioxide from refineries by pumping it into greenhouses, which also helped put food on the table. In the US, giant General Electric (GE) is pushing a strategy called "Ecomagination". Partly businesses are turning green because it helps recruit young blood; partly it's out of a need to conserve energy and other resources as their prices rise; but mostly it's out of fear of the government. Companies faced with the prospect of governments handing down regulations unilaterally find it wiser to set up responsible programs on their own terms.

In many cases, the greenery is something of a sales pitch. GE's Ecomagination product line features energy-efficient products, but for the most part they would have been developed anyway. GE's latest turbofan engines for jetliners are more fuel-efficient and produce lower emissions than the previous generation of turbofans -- but then, they would have had to be in any case, since nobody's going to buy fuel-inefficient jetliner engines these days.

Still, no matter what the motivations of businesses are, the end result is basically the one environmentalists want. A much more aggressive green program wouldn't have been sensible from a business point of view, and so not sustainable. Programs like Ecomagination can be seen as a reasonable compromise between greenery and profits, and from an enlightened point of view, convincing business executives that there really is a correlation between the environment and the bottom line is all for the good. [TO BE CONTINUED]

START | PREV | NEXT* INFRASTRUCTURE -- OIL & GAS (8): The neighborhood gas station is a more sophisticated facility than we generally give it credit for being. In the early days, it was simple enough: a general store installed a pump at the curb and sold gasoline along with other goods, with the gas often pumped by the purchaser. By the middle of the 20th century, this arrangement had given way to the filling station, which not only pumped gas but performed automotive maintenance and repairs as well. In the 1980s, the cycle went back to its origins, with the typical gas station incorporating a convenience store and self-service pumps -- which could accept credit cards. Automotive maintenance and repair is now generally performed by more specialized shops.

Early gas pumps were hand-cranked, with the fuel pumped up to a glass vessel with gallon markings at the top, allowing the customer to observe the actual amount of fuel before draining it through a hose into the car's gas tank. Even into the late 1950s, pumps had a little glass globe with a plastic turbine to allow the customer to watch the fuel being pumped. (I vaguely remember this myself.)

In the early days, the station owners stored gas in drums, but as fuel use increased, storage tanks were buried to save space and to reduce fire hazard. However, the early steel tanks tended to rust and then leak. Owners didn't always worry too much about the loss of gas from a rusty tank, but once environmental standards became important, a leaky tank could turn a gas station into a hazardous waste site whose cleanup could be ruinously expensive. Nowdays, tanks are made of fiberglass that doesn't corrode, with the tanks surrounded by monitoring wells or, increasingly, electronic sensors to check for leaks. In some places in the US, above-ground storage tanks are coming back into style, particularly for kerosene and diesel fuel, which are nonvolatile and so don't pose much of a fire hazard.

A typical tank at a US gas station has a capacity of about 45,500 liters (12,000 US gallons). Of course there are separate tanks for each grade or type of fuel. The tanks are filled from fittings recessed into the concrete pad around the tanks, with the fittings color-coded for the type of fuel:

____________________________________ WHITE regular unleaded gasoline. BLUE "plus" gasoline. RED premium gas. YELLOW low sulfur diesel. GREEN high sulfur diesel. BROWN kerosene. ____________________________________

Monitoring wells are capped with alert orange to make sure nobody tries to pump fuel into them.

In the last decade or so, air pollution standards have led to another innovation, the "vapor recovery" system. This involves a hood around the pump spigot that draws up butane evaporating as the fuel is pumped, reducing air pollution and retaining the butane for use in the remaining fuel.

New innovations are being introduced as well. Some gas-station chains have electronic RFID systems that allows a customer simply to present a "key" to the pump, then fuel up and drive away, with the purchase billed from a charge account automatically. Pumps are also now becoming available that have video displays to pitch advertising to customers while they're filling up their vehicles -- generally to the annoyance of consumers. [TO BE CONTINUED]

START | PREV | NEXT* LEDS FOR THE DEVELOPING WORLD: An article in THE ECONOMIST ("Lighting Up The World", 23 September 2006), described efforts to bring the advantages of light-emitting diodes (LEDs) to the developing world.